AI Data Centers Are Set to Strain US Energy and Water Resources by 2030

The rapid rise of artificial intelligence has reshaped nearly every sector, but behind the convenience of AI assistants, generative models, and large-scale cloud tools lies a growing infrastructure challenge. A new study from researchers at Cornell University provides a detailed and sobering look at how fast-growing AI data centers could impact energy use, water resources, and carbon emissions across the United States by the end of this decade. Their work not only quantifies the scale of this environmental footprint but also offers a roadmap for how to drastically reduce it—if industry and policymakers act soon.

According to the research team, the current rate of AI expansion could drive annual emissions from AI data centers to 24–44 million metric tons of carbon dioxide by 2030. To visualize that, it’s roughly equal to adding 5–10 million cars to American roads every single year. On top of that, the entire system could pull in 731–1,125 million cubic meters of water annually, a volume comparable to what 6–10 million Americans use in their homes each year. All of this comes from the energy-hungry and water-intensive nature of training and running large-scale AI models.

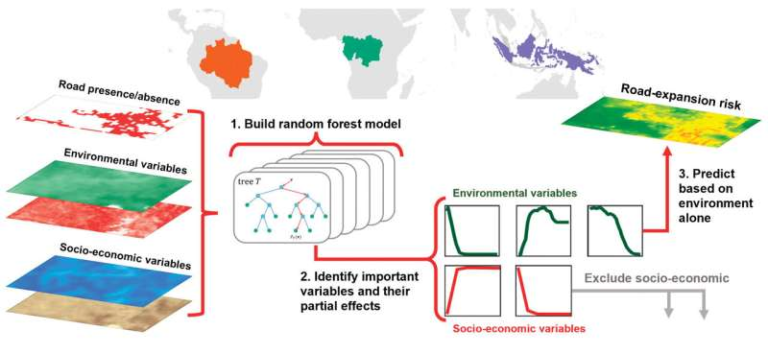

The team spent three years gathering what they describe as “multiple dimensions” of data: industry production and financial data, power-grid information, water-resource statistics, and climate-related variables. Because many companies don’t publicly report everything needed for this level of analysis, they also used AI-based modeling to fill in some of the gaps. This approach let them build a state-by-state map of projected AI server deployments and their environmental consequences.

Their findings highlight that continued growth without intervention could push the AI sector’s climate targets far off course. Even if the electricity powering each server becomes cleaner over time, overall emissions will still rise if AI demand grows faster than the rate of grid decarbonization—and right now, that is exactly what is happening.

However, the study offers more than warnings. It shows that coordinated planning and updated strategies can significantly reduce the environmental burden. The researchers identified three major levers: where data centers are built, how fast local grids adopt clean energy, and how efficiently servers and cooling systems operate. If all three strategies are pursued together, the analysis suggests emissions could be cut by about 73%, and water consumption could be reduced by around 86% compared with the projected worst-case scenario.

One of the biggest takeaways is the importance of location. Many current AI server clusters are concentrated in areas with limited water resources. States like Nevada and Arizona, both of which suffer from significant water scarcity, have seen rapid data-center growth. Meanwhile, northern Virginia—already one of the world’s largest data-center hubs—faces infrastructure strain and potential local water issues.

In contrast, several regions stand out as far more sustainable choices. The Midwest and windbelt states such as Texas, Montana, Nebraska, and South Dakota offer a much better combined water-and-carbon profile. Whether it’s abundant wind energy, lower water stress, or both, moving new AI facilities into these regions could dramatically reduce environmental impacts. The study also highlights New York state as a particularly favorable location thanks to its low-carbon electricity mix fueled by nuclear, hydropower, and rapidly growing renewables—though water-efficient cooling would still be essential.

Still, even smart siting can only address part of the problem. The grid itself must continue to decarbonize—faster than it is now. If the grid doesn’t keep up, total emissions from AI will climb by approximately 20% even as electricity becomes cleaner per kilowatt-hour. That’s because demand from AI will surge at a much higher rate than the grid’s transition to wind, solar, and other clean sources.

Even the most ambitious clean-energy scenario won’t completely eliminate emissions by 2030. The research suggests that about 11 million tons of CO₂ would remain even under a high-renewables model, requiring large-scale offsets like 28 gigawatts of wind capacity or 43 gigawatts of solar power to achieve true net-zero status. That’s equivalent to dozens of large wind farms or solar installations dedicated solely to balancing the footprint of AI infrastructure.

The third component—operational efficiency—offers smaller but still meaningful gains. Technologies such as advanced liquid cooling, improved server utilization, and better load management could collectively cut around 7% of carbon emissions and 29% of water use. When combined with smarter siting, this brings total water reductions to more than 32% and significantly helps reduce the overall carbon footprint.

These details underscore a larger point: the AI industry is at a pivotal moment. Companies like OpenAI, Google, and others are investing billions into new facilities, and utilities are racing to meet demand. Without coordinated long-term planning among the tech industry, regulators, and energy providers, the nation risks locking itself into infrastructure that overburdens local resources and slows climate progress.

The research team emphasizes that the window for responsible decision-making is right now. The AI boom is still early enough that strategic choices made this decade will shape its long-term environmental impact. Building in water-stressed areas, relying on slow-to-decarbonize grids, or ignoring cooling efficiency could create a legacy of environmental strain. On the other hand, adopting sustainable siting policies, rapidly expanding clean-energy production, and deploying efficient cooling can set the stage for AI growth that aligns with climate goals.

Beyond the specifics of the study, it’s worth understanding why AI data centers consume such massive amounts of resources. Training large AI models requires running billions or trillions of mathematical operations across thousands of servers for weeks or months. Each server generates heat, so data centers consume vast amounts of electricity both for computation and for cooling systems. Water is often used to remove heat through evaporative or liquid cooling, especially in hotter climates. Running AI systems at scale means maintaining those servers 24/7, even when they are not actively training new models. As AI becomes integrated into everyday tools, demand keeps increasing—even more so as new models grow larger and more resource-hungry.

Different types of cooling also influence environmental impact. Traditional air cooling consumes more electricity but less water, while evaporative cooling uses much less energy but can require enormous amounts of water depending on climate conditions. Liquid immersion cooling, one of the newer methods, can dramatically improve efficiency, but it remains costly and difficult to deploy in older facilities. These trade-offs mean every data center must balance cost, sustainability, climate, and long-term operational plans.

This broader context helps explain why the Cornell study stresses that there is no single solution. Sustainable AI depends on integrating multiple strategies simultaneously—choosing the right location, improving technology, and transforming energy systems at a national scale.

The researchers’ message is clear: AI has the potential to accelerate climate solutions, optimize power grids, and model environmental systems. But if the infrastructure supporting AI is not built responsibly, it could create a new environmental burden that undermines those very benefits. The next few years will determine which path the industry takes.

Research Paper:

Environmental impact and net-zero pathways for sustainable artificial intelligence servers in the USA

https://www.nature.com/articles/s41893-025-01681-y