Generative Chatbots Aim to Personalize Learning at Scale but Accuracy Problems Create New Risks

Generative AI has sparked a wave of excitement in education, especially because of its potential to offer personalized learning to an unlimited number of students at once. In traditional classrooms, personalization is powerful but limited — a single instructor can only give so much one-on-one time. As classes get larger or shift online, individualized support becomes harder to maintain. This is where pedagogical chatbots enter the picture, promising to fill that gap by giving learners instant explanations, tailored guidance, and on-demand support.

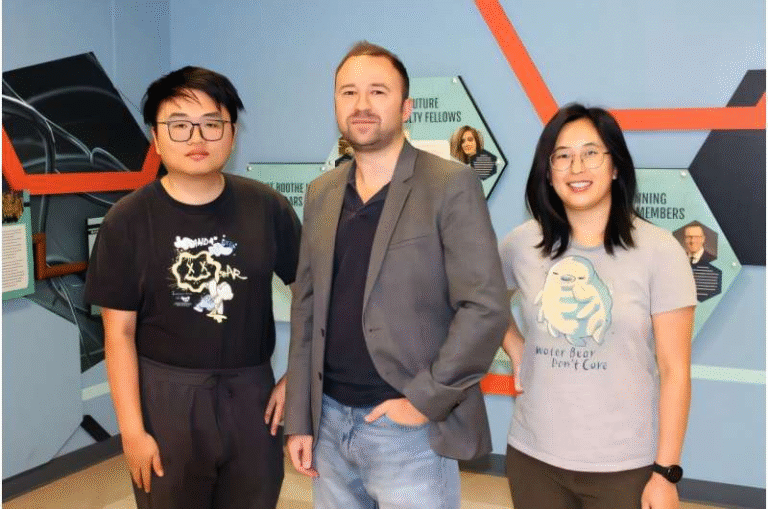

A research team led by Assistant Professor Tiffany Li, who specializes in human-computer interaction and education technology, set out to test how reliable and safe these chatbots truly are when used for learning. Their goal wasn’t to prove whether chatbots can teach — we already know they can explain things well — but to examine what happens when these chatbots make mistakes, something generative systems are known to do. They wanted to understand whether students can detect those errors, how often they miss them, and what impact those mistakes have on actual learning.

Li and her team built a chatbot designed specifically to teach introductory statistics, placing it inside a learning environment similar to what you might see on a large online course platform such as Coursera. Importantly, they programmed the chatbot to intentionally insert specific factual errors into some of its responses. These weren’t random hallucinations — they were carefully designed inaccuracies relevant to the content students were learning, ensuring the researchers could measure exactly when and where learners encountered incorrect information.

To conduct the study, the team recruited 177 participants, including both college students and adult learners. As they progressed through statistics practice problems and learning modules, participants were free to consult the chatbot whenever they needed help. To make sure the setup was fair, learners also had access to additional resources: an online textbook and search engines. This meant they weren’t trapped into trusting the chatbot and had the ability to verify anything they felt uncertain about.

A key detail in the study involved giving participants a dedicated button to report chatbot errors. To encourage active checking, the researchers even offered a small monetary bonus for correctly identifying faulty answers. With incentives, tools, and educational material all available, the study simulated a realistic but supportive environment where learners could verify the chatbot’s information.

Despite all of this support, the results were surprisingly concerning. On average, learners had only about a 15% chance of reporting the chatbot’s errors — an extremely low detection rate. Even when errors were directly relevant to the topic being studied and could be checked with resources right in front of them, learners overwhelmingly failed to notice the mistakes.

This failure had serious consequences. When students encountered chatbot errors, their accuracy on practice problems dropped sharply, landing between 25% and 30%. In sharp contrast, learners in the no-error control group — who interacted with the same chatbot minus the injected mistakes — scored dramatically higher, with an average accuracy between 60% and 66% on the very same tasks. The difference was massive, underscoring how powerful an influence incorrect chatbot responses can have when learners implicitly trust the system.

Li’s team didn’t stop at measuring outcomes; they also investigated why students struggled to catch errors. One factor was over-reliance on prior knowledge. Many learners only checked answers if something felt “off,” but if a student lacked foundational knowledge, they had no internal alarm telling them to double-check. On top of that, chatbots tend to respond with fluent, confident language, which creates the impression of expertise even when the content is wrong. This confidence effect subtly convinces learners that the system “knows better,” leading them to accept incorrect explanations without question.

The study also uncovered differences among participants. Some groups were more vulnerable to chatbot errors than others. For example, learners with little prior knowledge of statistics were less likely to detect mistakes and more likely to experience severe drops in their performance. Those with less experience using chatbots had similar difficulties; unfamiliarity with generative AI meant they trusted the system too readily. Non-native English speakers were also less likely to report errors, possibly because linguistic fluency shaped their confidence in challenging the chatbot’s answers. The researchers additionally found that female participants experienced greater declines in performance when exposed to incorrect chatbot responses.

These findings suggest that generative chatbots not only pose general learning risks but may also widen existing inequities among learners. If certain groups are consistently harmed more by chatbot mistakes, simply deploying AI tutors widely could unintentionally create unequal learning outcomes.

Given the results, Li recommends being strategic about when and how instructors incorporate chatbots into coursework. For example, she argues that chatbots might not be ideal when students are first encountering complex concepts, because they lack the foundation needed to evaluate explanations. Instead, chatbots may be more suitable later in a course, once students have developed a minimum level of prior knowledge that helps them verify information more effectively. In other words, building a base of understanding before introducing AI tools may serve as an important protective layer.

While chatbots are promising — especially for large-scale or self-paced learning — this study highlights a fundamental problem: their errors are too easy to miss, and those errors can significantly damage learning. Since generative systems inevitably produce mistakes, especially in technical subjects, educators need to understand the risks before integrating them into teaching environments.

This research adds to a broader conversation about the role of generative AI in education. Chatbots can certainly enhance learning, improve accessibility, and support personalization. But they also require careful oversight, meaningful guardrails, and thoughtful design. Students need training to critically evaluate chatbot responses, instructors must be aware of students’ vulnerability to AI-generated inaccuracies, and developers must prioritize reliability and transparency.

As chatbots continue to find their way into classrooms, online courses, and self-learning platforms, studies like this one remind us that generative AI is a helpful assistant but not an infallible teacher. Understanding how learners interact with imperfections in these systems — and how those imperfections shape learning outcomes — is essential as education moves deeper into the AI-assisted era.

Research Reference:

https://doi.org/10.1145/3698205.3729550