Yale Researchers Combine AI Learning and Control Theory to Help Robots Perform Complex, Multi-Step Movements

Robots have become remarkably good at individual physical feats. With the help of artificial intelligence, machines can now run, jump, balance, and even perform acrobatic tricks like backflips. However, teaching a robot to combine multiple advanced movements smoothly and reliably is still a major challenge. A new research effort from Yale University takes a meaningful step toward solving this problem by blending AI-based learning methods with classical control theory, allowing robots to perform complex, compound actions with greater precision and stability.

The research, led by Ian Abraham, an assistant professor of mechanical engineering at Yale, focuses on a core limitation of modern robotic learning. While AI techniques such as reinforcement learning excel at teaching robots single, specialized skills, their performance often drops when those skills need to be stacked together. For example, training a robot to execute a backflip is one task. Training it to perform a backflip followed immediately by a stable handstand is far more difficult. When AI models are trained to handle multiple tasks at once, the resulting behavior is often less reliable than training each task separately.

To address this issue, Abraham and his team turned to optimal control theory, a mathematically grounded framework that focuses on choosing actions that minimize errors, energy use, or other costs. More specifically, the team employed hybrid control theory, a branch of control science that deals with systems capable of switching between different control modes depending on the situation.

At the heart of this research is the idea that robots should not rely on a single learning method at all times. Instead, they should be able to switch intelligently between different learning and control strategies as a task unfolds. Some movements require careful planning and prediction, while others benefit from learned reflexes that respond instantly to feedback. Hybrid control theory provides the mathematical tools needed to decide when and how a robot should switch between these modes without losing stability or precision.

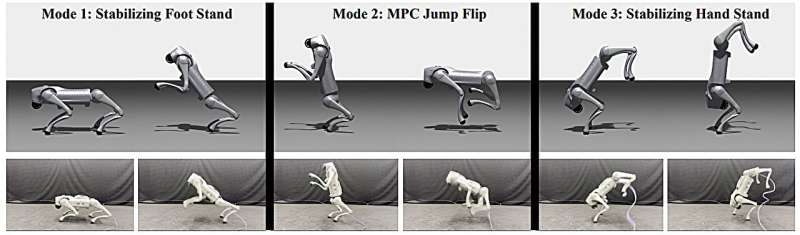

In this work, AI methods are used to learn the most demanding motor skills, particularly those involving whole-body coordination. These include balancing, flipping, and dynamic transitions that require precise timing and control. Once these skills are learned, hybrid control theory is used to schedule and combine them, ensuring smooth transitions between different behaviors. This approach allows robots to build compound motor skills from simpler components without sacrificing performance.

To test their ideas, the researchers conducted experiments using a dog-like quadruped robot, similar in form to commercially available robotic platforms. The robot was trained to perform a sequence of actions that included balancing and executing a controlled flip. The results demonstrated that hybrid control techniques enabled the robot to move seamlessly between different learned behaviors, maintaining stability throughout the process. The system was able to determine when to rely on model-based planning and when to fall back on learned motor policies, depending on the demands of each phase of the task.

A key inspiration behind this approach comes from how humans learn physical skills. When people first learn a new movement, they consciously think about how their body should move, predicting outcomes and adjusting carefully. Over time, repeated practice turns these actions into muscle memory, allowing the movement to happen automatically. The hybrid control framework mirrors this process by allowing robots to shift from deliberate planning to automatic execution as confidence in a skill increases.

The research team emphasizes that this flexibility is especially important for robots operating outside of tightly controlled environments. In real-world settings such as homes, workplaces, or disaster zones, robots cannot rely solely on pre-trained behaviors. They must be able to learn new skills after deployment, adapt to unexpected conditions, and prioritize safety. Hybrid control allows a robot to draw from multiple learning modalities, using planning and reasoning when uncertainty is high and switching to specialized learned skills once conditions are better understood.

The underlying technical contribution of this work is described in a paper titled Sample-Based Hybrid Mode Control: Asymptotically Optimal Switching of Algorithmic and Non-Differentiable Control Modes, authored by Yilang Liu and colleagues. The paper was published as a preprint on the arXiv server in 2025. It introduces a framework for optimizing how and when a robot switches between control modes, even when those modes are non-differentiable and difficult to integrate into standard learning pipelines.

The method relies on sample-based optimization, which evaluates different switching strategies to identify those that approach optimal performance over time. By framing mode selection as an optimization problem, the researchers provide strong theoretical guarantees about the quality of the resulting behavior. Importantly, the approach is designed to work with real robots, not just simulations, and accounts for the practical constraints of physical systems.

Beyond the immediate experiments, this research has broader implications for the future of robotics. As robots are increasingly expected to operate alongside humans, they will need to perform long sequences of coordinated actions, rather than isolated tricks. Tasks such as household assistance, warehouse automation, and search-and-rescue operations all require robots to combine perception, planning, and physical execution in real time. The ability to transition smoothly between different control strategies could make robots significantly more reliable and capable in these roles.

Why Hybrid Control Matters for Robotics

Hybrid control theory has been studied for decades, particularly in fields like aerospace and automotive engineering. What makes this research noteworthy is its integration with modern AI learning techniques. Traditionally, control theory prioritizes guarantees like stability and optimality, while machine learning focuses on adaptability and data-driven performance. By combining these perspectives, the Yale team demonstrates a practical way to get the best of both worlds.

The Role of AI in Learning Motor Skills

AI methods, especially reinforcement learning, are well suited for discovering complex motor behaviors through trial and error. However, they often lack guarantees about safety and consistency. Hybrid control provides a structured framework that ensures learned behaviors are deployed only when appropriate, reducing the risk of failure during execution.

Looking Ahead

This work points toward a future where robots can build skill libraries, refine them over time, and combine them intelligently to perform new tasks. Rather than retraining entire models for every new behavior, robots could reuse and recombine existing skills, much like humans do. This approach could dramatically reduce training time and improve adaptability across a wide range of applications.

As robotics continues to move out of the lab and into everyday environments, research like this highlights the importance of combining theoretical rigor with practical learning techniques. By bridging AI and control theory, the Yale team offers a promising path toward robots that are not only agile, but also dependable and versatile.

Research paper:

https://arxiv.org/abs/2510.19074