Open-Source Framework byLLM Lets Developers Add AI to Software Without Prompt Engineering

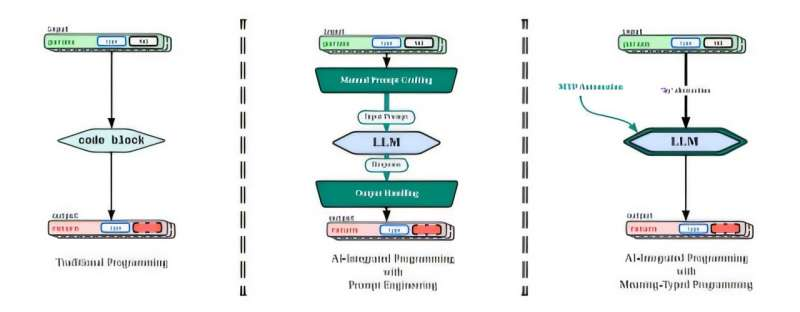

Developers working with large language models have spent the last few years juggling two very different worlds. On one side is traditional programming, where logic is explicit, types are well-defined, and functions behave predictably. On the other side are large language models, which operate on natural language, probabilistic reasoning, and loosely structured text. Bridging these two worlds has usually required prompt engineering, a manual and often frustrating process of carefully crafting text prompts to get usable outputs from AI models.

A new open-source framework called byLLM aims to change that entirely. The project introduces a way to integrate AI directly into software with a single line of code, removing the need for manual prompt engineering and dramatically reducing development effort. The work was presented at the SPLASH conference in Singapore in October 2025 and published in the Proceedings of the ACM on Programming Languages.

At its core, byLLM is about making AI feel like a native part of programming rather than an external system that developers have to constantly negotiate with.

Why Prompt Engineering Has Been a Problem

Traditional software operates on variables, functions, and types that are clearly defined. Large language models, by contrast, take natural language text as input and generate probabilistic outputs. To connect the two, developers have had to manually translate program state into text prompts and then interpret the model’s response back into structured data.

This translation layer, known as prompt engineering, is not only time-consuming but also fragile. Small changes in wording can lead to unexpected results, making applications harder to maintain and scale. As AI models evolve, developers often need to revisit and tweak prompts repeatedly, adding ongoing maintenance overhead.

The team behind byLLM observed that developers were spending an enormous amount of time solving the same problem over and over: figuring out how to talk to AI models in a way that aligns with their program’s intent.

What byLLM Does Differently

byLLM introduces a new language construct and runtime system that automates prompt generation based on the structure and meaning of the program itself. Instead of writing prompts by hand, developers write normal-looking code and let the system infer what the AI should do.

The key innovation is the “by” operator, which acts as a bridge between conventional programming logic and large language model execution. When a function or operation is marked with this operator, the system understands that the result should be produced by an LLM rather than traditional code.

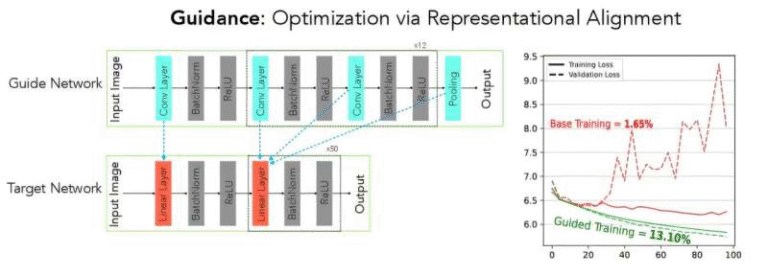

Behind the scenes, a compiler built on a meaning-typed intermediate representation analyzes the program. It gathers semantic information such as variable types, function signatures, and programmer intent. This information is then passed to a runtime engine that automatically generates focused, context-aware prompts for the language model.

The result is that developers can integrate AI behavior without ever writing a single prompt manually.

Meaning-Typed Programming and the MTP Paradigm

The framework is based on a new concept called Meaning-Typed Programming (MTP). Unlike traditional type systems that focus only on data shape, MTP captures the semantic meaning of code elements. This allows the system to understand not just what data is being passed around, but what that data represents and how it should be used.

In practice, this means the compiler can infer developer intent directly from code structure. The runtime then translates that intent into precise instructions for the language model. This approach removes ambiguity and reduces the trial-and-error nature of prompt engineering.

The researchers describe this as a third paradigm of programming, sitting alongside traditional deterministic programming and prompt-based AI integration. It allows AI to be treated as a first-class citizen within software systems.

Performance and Developer Productivity Results

The byLLM team evaluated their approach against existing prompt engineering frameworks such as DSPy. The results showed clear advantages across multiple dimensions.

Applications built using byLLM achieved higher accuracy, better runtime performance, and greater robustness. Because prompts are automatically generated and tightly scoped to program semantics, the system avoids many of the inconsistencies seen in manually written prompts.

A user study further highlighted the productivity gains. Developers using byLLM completed tasks more than three times faster than those relying on traditional prompt engineering. They also wrote 45% fewer lines of code, indicating a substantial reduction in boilerplate and glue logic.

These results suggest that byLLM does not just simplify AI integration but also makes it more reliable and maintainable over time.

Open Source and Rapid Adoption

The team released byLLM as an open-source project, making it accessible to developers, researchers, and companies of all sizes. Within just one month of release, the framework saw over 14,000 downloads, signaling strong interest from the developer community.

There has also been notable interest from industry partners across sectors such as finance, customer support, health care, and education. These are areas where AI integration can provide immediate value but where prompt engineering complexity has often slowed adoption.

The open-source nature of byLLM lowers the barrier to entry, allowing smaller teams and even non-expert programmers to experiment with advanced AI-powered features.

How Developers Actually Use byLLM

From a developer’s perspective, byLLM is designed to feel natural. AI-powered functionality can often be added with just a single line of code. The framework works by associating functions or expressions with a language model, letting the runtime handle prompt creation and response parsing.

The system supports integration with multiple large language models, making it flexible for different deployment needs. Developers can focus on defining what their program should do, rather than how to phrase instructions for an AI.

This abstraction shifts attention back to software design and problem-solving, instead of prompt tweaking and debugging.

Broader Implications for AI-Integrated Software

byLLM reflects a broader trend in AI tooling: moving away from ad hoc solutions toward structured, principled integration. As AI systems become more capable, the challenge is no longer just model performance but how seamlessly those models fit into real-world software.

Automating prompt engineering helps address one of the biggest friction points in AI adoption. However, it does not eliminate all challenges. Developers still need to consider issues such as model reliability, latency, cost, and responsible usage. byLLM makes AI easier to use, but it does not remove the need for thoughtful system design.

That said, frameworks like byLLM point toward a future where AI capabilities are embedded directly into programming languages and runtimes, rather than bolted on as external services.

Why This Matters for the Future of Programming

The introduction of meaning-typed programming suggests a shift in how developers think about computation. Instead of explicitly specifying every step, programmers can increasingly describe intent, allowing AI systems to fill in the details.

This approach could lead to a new generation of applications that are more adaptive, personalized, and easier to build. Educational tools, research software, and interactive systems are especially well-positioned to benefit from this model.

By reducing the cognitive load associated with AI integration, byLLM helps make advanced language models accessible to a wider audience, potentially accelerating innovation across many domains.

Research Reference

MTP: A Meaning-Typed Language Abstraction for AI-Integrated Programming

https://arxiv.org/abs/2405.08965