University of Utah Engineers Use AI to Make Robotic Prosthetic Hands More Natural and Intuitive

Engineers at the University of Utah have taken a major step toward making robotic prosthetic hands feel less like machines and more like natural extensions of the human body. By combining artificial intelligence, advanced sensors, and a carefully balanced system of shared control between humans and machines, the research team has shown that prosthetic users can achieve better dexterity, stronger grip security, and lower mental effort while performing everyday tasks.

The work focuses on a long-standing challenge in prosthetics: while modern bionic hands look impressive and offer multiple grip modes, they often demand constant conscious control from the user. Simple actions that most people perform without thinking—like picking up a cup, holding a pencil, or grasping a fragile object—can become mentally exhausting for amputees using traditional prosthetic devices. The Utah team’s approach aims to change that by giving the prosthetic hand a form of intelligent autonomy, allowing it to assist the user in subtle but meaningful ways.

Why Controlling Prosthetic Hands Is Still So Hard

For people with intact limbs, grasping objects relies on a combination of touch, anticipation, and subconscious motor control. The brain constantly predicts how objects will feel and adjusts finger positions and grip force automatically. Most commercial prosthetic hands, however, lack true sensory input and predictive control. Users often rely on visual feedback and deliberate muscle signals to open or close their fingers, which increases cognitive burden.

This difficulty helps explain why nearly half of prosthetic users eventually abandon their devices, often citing poor control and mental fatigue. The Utah researchers recognized that solving this problem would require more than just better motors or stronger grips—it would require intelligence built directly into the hand itself.

A Smarter Prosthetic Hand with “Senses”

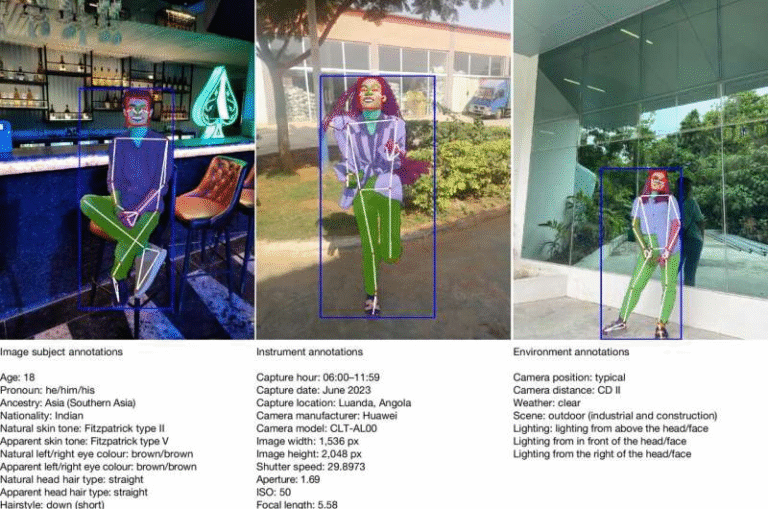

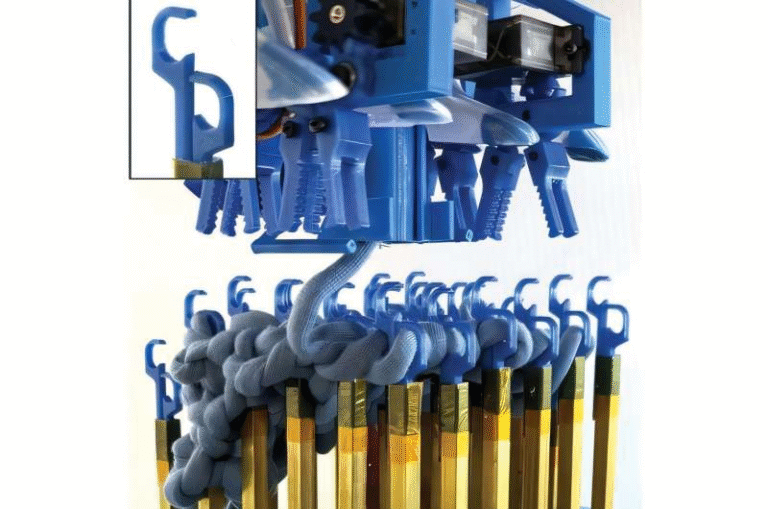

To tackle this issue, the researchers modified a commercial bionic hand manufactured by TASKA Prosthetics. They replaced the standard fingertips with custom-designed ones that include two key types of sensors:

- Pressure sensors, which detect how much force is being applied during contact

- Optical proximity sensors, which can detect objects before the fingers actually touch them

These proximity sensors are remarkably sensitive. In testing, the fingertips could detect something as light as a cotton ball falling onto them. This capability gives the prosthetic hand a form of artificial “awareness,” allowing it to sense an object’s position and distance in real time.

Each finger is equipped with its own sensor, meaning the digits can work independently and in parallel, much like a biological hand.

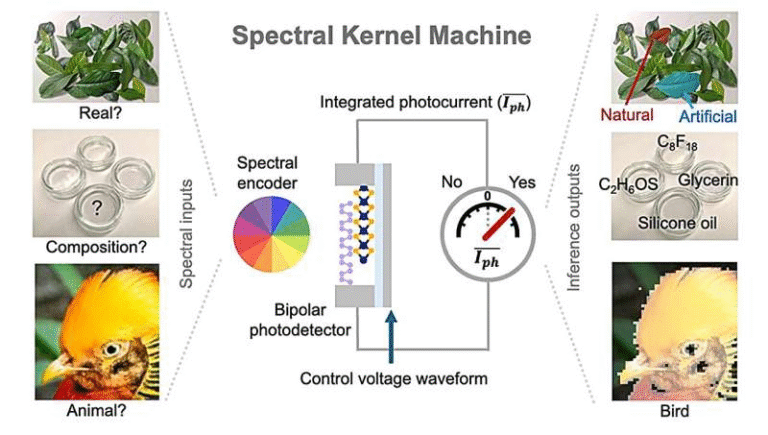

Training AI to Understand How Humans Grasp Objects

The sensor data alone is not enough. To make sense of this information, the team trained an artificial neural network using proximity data associated with different grasping postures. The goal was to teach the prosthetic fingers how far to move and how to position themselves to form a stable, secure grip.

Instead of waiting for the user to manually close each finger, the AI predicts the optimal finger placement based on how close the object is and how the hand is oriented. This allows the prosthetic hand to naturally move into the right grasping position, reducing the need for constant user input.

In effect, the AI handles the fine adjustments that human hands usually perform automatically.

Shared Control Between Human and Machine

One of the most important aspects of this research is the idea of shared human-machine control. The researchers were careful to avoid giving full control to the AI, which could lead to frustration if the prosthesis acted against the user’s intentions.

Instead, the system is designed so that:

- The user remains in control of intent, such as when to grasp, release, or reposition the hand

- The AI assists with precision, improving finger placement and grip stability

This balance ensures that users are not “fighting” the machine. Rather, the AI augments the user’s natural control, making movements smoother and less mentally demanding.

For example, if a user wants to pick up a cup, the AI helps ensure the fingers close at the correct distance and apply the right amount of force. If the user wants to let go, the system responds immediately to that intention.

Testing with Real Prosthetic Users

The research team tested the system with four transradial amputees, meaning participants had amputations between the elbow and the wrist. These individuals used the AI-assisted prosthetic hand to complete both standardized performance tests and real-world tasks.

The tasks included:

- Picking up small and delicate objects

- Lifting and holding a plastic cup

- Performing fine motor actions that require precise grip control

These tasks are particularly challenging for amputees because applying too little force can cause objects to slip, while applying too much force can crush or break them.

The results were clear. When using the AI-assisted system, participants demonstrated:

- Greater grip security

- Improved grip precision

- Reduced cognitive effort during tasks

Importantly, participants were able to perform these actions without extensive training or long practice sessions, suggesting that the system is intuitive and adaptable.

Why Reduced Cognitive Burden Matters

One of the most significant findings of the study is the reduction in mental workload. Traditional prosthetic control often forces users to focus intensely on every movement, which can be exhausting over time.

By offloading part of the grasping process to the prosthesis itself, the AI allows users to focus on the task rather than the mechanics of the hand. This shift could play a major role in reducing prosthesis abandonment rates and improving overall quality of life for amputees.

The Bigger Picture: Smarter Prosthetics and the Brain

This work is part of the Utah NeuroRobotics Lab’s broader vision to create prosthetic devices that integrate seamlessly with the human nervous system. Beyond external sensors and AI, the team is also exploring implanted neural interfaces.

These interfaces could allow users to:

- Control prosthetic limbs directly with their thoughts

- Potentially receive sensations of touch transmitted back from the prosthesis

The long-term goal is to blend intelligent sensors, AI-driven control, and neural interfaces into a single system that feels truly natural.

How This Research Fits into the Future of Prosthetics

AI-powered prosthetics are becoming an increasingly important area of research. Across the field, scientists are exploring ways to:

- Improve sensory feedback

- Reduce training time for users

- Personalize prosthetic behavior based on individual habits

The University of Utah’s approach stands out because it emphasizes collaboration between human and machine, rather than automation for its own sake. The prosthetic hand does not replace the user’s decision-making—it supports it.

What Comes Next

The researchers plan to further refine the system by:

- Enhancing tactile sensing capabilities

- Improving AI models to adapt over time

- Integrating thought-based neural control with intelligent grasping

If successful, these advances could lead to prosthetic hands that are not only more functional but also more comfortable, intuitive, and emotionally acceptable for users.

Research Reference:

https://www.nature.com/articles/s41467-025-65965-9