Penn State Researchers Build an AI Vision System That Helps Robots Identify and Track Weeds in Apple Orchards

Weed control has always been one of the less glamorous but most critical parts of running an apple orchard. Weeds compete directly with apple trees for nutrients, water, and sunlight, and if they are not managed properly, fruit yields can suffer significantly. Traditionally, growers have relied on either manual removal or chemical herbicides, both of which come with serious drawbacks. Now, a research team at Penn State University has developed an advanced AI-powered machine vision system designed to help agricultural robots detect, track, and measure weeds with remarkable accuracy in apple orchards.

This system represents an important early step toward automated robotic precision weed management, where herbicides are applied only where they are needed, instead of being sprayed broadly across an orchard.

Why Weed Control in Apple Orchards Is So Challenging

Apple orchards present a uniquely complex environment. Unlike open crop fields, orchards have tree trunks, low-hanging branches, and dense canopies that block visibility. Weeds often grow close to tree bases, partially hidden by shadows, trunks, or other plants.

Manual weed removal is not just labor-intensive; it can also damage soil structure and tree roots, creating long-term problems for orchard health. Chemical spraying, while faster, can lead to environmental pollution, herbicide resistance, and chemical residues on apples, which are growing concerns for both growers and consumers.

This is where precision weed management comes in. The idea is simple but powerful: accurately detect weeds, measure how many there are, and apply small, targeted amounts of herbicide only where necessary. Doing this effectively, however, requires extremely reliable weed detection technology.

Moving Beyond Top-Down Cameras

Most agricultural imaging systems rely on top-view cameras, such as drones or overhead sensors. In orchards, this approach does not work well. The tree canopy blocks the camera’s view, making it nearly impossible to see weeds clearly from above.

The Penn State team addressed this by using side-view cameras, positioned to look across the orchard floor rather than down onto it. This setup makes it easier to see weeds near tree trunks, but it introduces new challenges. Weeds may be partially visible, occluded by trees, or hidden behind other weeds, increasing the chances of misidentification or losing track of a weed as the robot moves.

Solving these problems required a more sophisticated approach to machine vision and deep learning.

Improving AI Models for Weed Detection

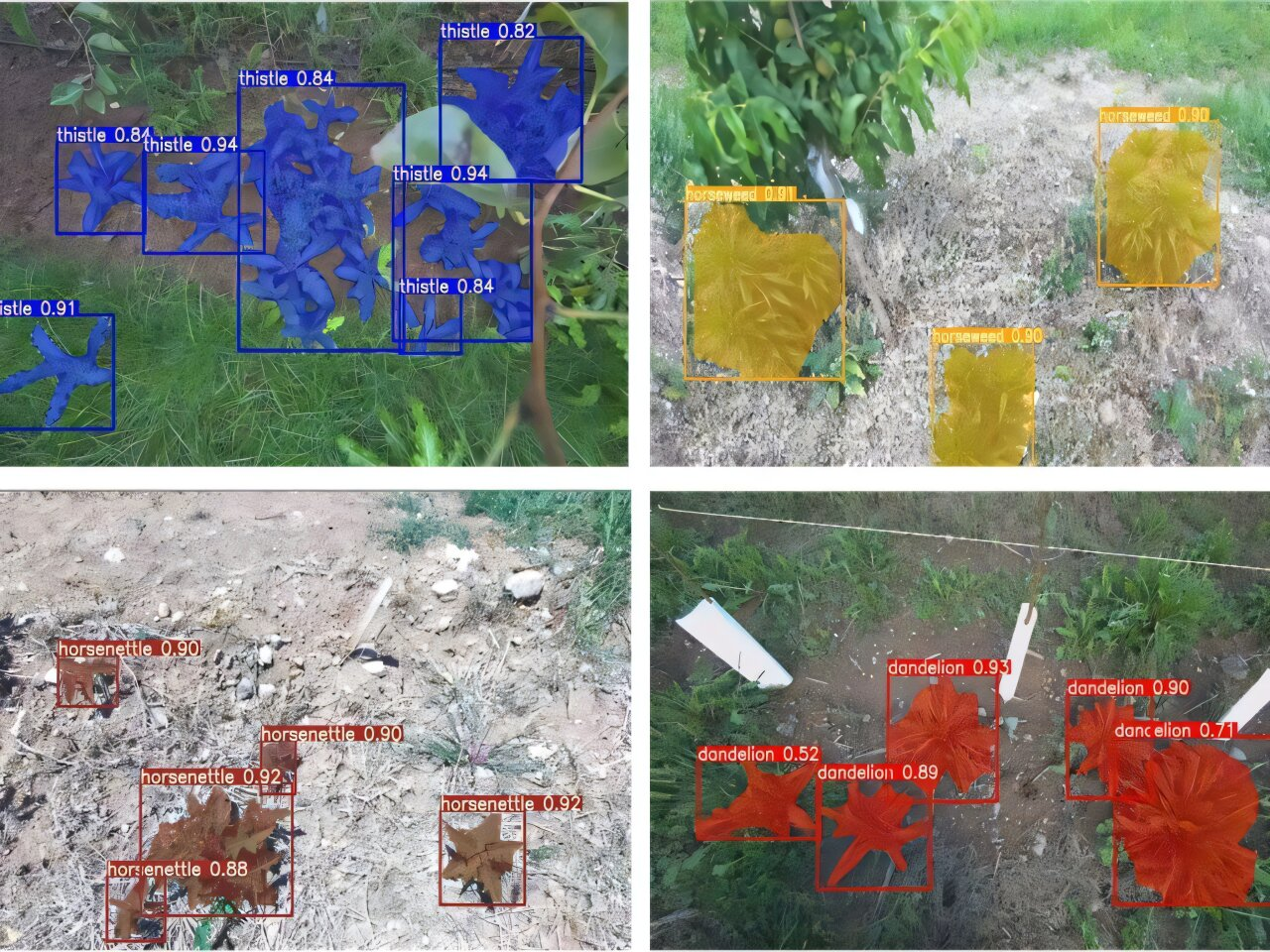

The research team, led by Long He, an associate professor of agricultural and biological engineering, focused on improving a commercially available deep-learning model used for object detection and segmentation. The model they worked with is capable of identifying objects in real time and outlining them pixel by pixel, which is crucial for precise herbicide application.

To improve performance in orchard conditions, the researchers enhanced the model by adding a Convolutional Block Attention Module, often referred to as CBAM. This module helps the AI focus on the most important visual features in an image while suppressing irrelevant background information. As a result, the system performs better when weeds are partially hidden or difficult to distinguish from their surroundings.

In addition to detection, the team integrated a tracking algorithm known as DeepSORT, along with a filtering mechanism. This allows the system to maintain the identity of each weed across multiple video frames. In practical terms, this means the AI does not count the same weed multiple times and can continue tracking weeds even if they briefly disappear behind tree trunks or other objects.

Training and Testing the System in Real Orchards

To build and evaluate the system, the researchers collected data at Penn State’s Fruit Research and Extension Center in Biglerville, Pennsylvania, as well as nearby commercial apple orchards. They focused on several common weed species found in these environments, including dandelion, common sow thistle, horseweed, and Carolina horsenettle.

High-resolution images of these weeds were used to create a training and testing dataset for the AI model. By working with real orchard conditions instead of controlled lab environments, the researchers ensured that the system could handle the complexity and unpredictability of real-world agriculture.

Accuracy and Performance Results

The results reported in the journal Computers and Electronics in Agriculture show that the system performs at a high level across several key metrics.

For weed detection and segmentation, the model achieved 84.9% average precision, meaning it could accurately find weeds and outline their shapes. For localization, which focuses on identifying the exact position of weeds, the system reached 83.6% average precision.

Tracking performance was also strong. The model achieved 82% multiple object tracking accuracy, indicating reliable tracking of multiple weeds at the same time. It recorded 78% multiple object tracking precision, showing good accuracy in estimating weed positions, and an 88% identification score, demonstrating a strong ability to maintain weed identities across video frames. Notably, the system recorded only six identity switches, meaning it rarely confused one weed for another while tracking them.

The researchers also added a density estimation component, which calculates how much of a given area is covered by weeds. This feature provides growers with actionable data that can guide site-specific weed management decisions.

Why This Matters for Growers and the Environment

By combining improved detection, robust tracking, and weed density estimation, this AI system lays the groundwork for robotic precision herbicide spraying in orchards. Such systems could dramatically reduce the amount of chemicals used, lower costs for growers, and minimize environmental impact.

Instead of blanket spraying, future robots guided by this technology could apply herbicides only where weeds are present, reducing waste and limiting exposure to non-target plants. This approach also helps slow the development of herbicide-resistant weeds, a growing issue in modern agriculture.

The Bigger Picture of AI in Agriculture

This research fits into a broader trend toward AI-driven precision agriculture, where data and automation are used to improve efficiency, sustainability, and productivity. While much of the early work in this field focused on large row crops, this study shows how advanced AI can be adapted to specialty crops like apples, which have more complex growing environments.

The Penn State team’s work highlights how combining computer vision, deep learning, and robotics can solve problems that once seemed too difficult for automation. Although the system is still part of an ongoing development process, it represents a major step toward fully autonomous weed management in orchards.

Research Team and Contributions

The study was led by Lawrence Arthur, a doctoral candidate in agricultural and biological engineering, with Long He serving as senior author. Other contributors include Caio Brunharo, assistant professor of weed science; Paul Heinemann, professor of agricultural and biological engineering; Magni Hussain, assistant research professor of electronics, instrumentation, and control systems; and Sadjad Mahnan, a graduate assistant in agricultural and biological engineering.

Their combined expertise spans weed science, robotics, electronics, and AI, reflecting the interdisciplinary nature of modern agricultural research.

Research paper:

https://doi.org/10.1016/j.compag.2025.111071