PaTH Attention Introduces a Smarter Way for Large Language Models to Understand Order, Context, and Changing States

Large language models have become impressively fluent, but beneath that fluency lies a long-standing technical challenge: understanding order, context, and state changes over long sequences of text. Language depends heavily on word position and structure. A small change in order can completely change meaning, and over long documents or complex instructions, meaning doesn’t stay static—it evolves. A new research effort from MIT and the MIT-IBM Watson AI Lab proposes a powerful solution to this problem through a technique called PaTH Attention, a new form of positional encoding designed to be flexible, adaptive, and content-aware.

At the heart of modern language models are transformers, the architecture that powers most state-of-the-art LLMs today. Transformers rely on an attention mechanism that allows the model to weigh which tokens in a sequence matter most. Attention is excellent at identifying relevance, but on its own, it does not understand sequence order. All tokens are processed in parallel, meaning that without extra information, the model does not inherently know which word came before or after another. This is why positional encoding exists.

Why Positional Encoding Matters So Much

Human languages are deeply structured. Word order conveys meaning, syntax shifts across paragraphs, and relationships between entities evolve as a text unfolds. The same is true in other domains where transformers are used, such as computer code, logical reasoning, or step-by-step instructions. In these settings, models must track variables, updates, and conditional changes—what researchers often describe as state tracking.

Existing positional encoding techniques attempt to solve this problem, but they come with trade-offs. The most widely used approach today is Rotary Position Encoding (RoPE). RoPE encodes positional information by applying a fixed mathematical rotation to tokens based on their relative distance from each other. While this method is efficient and scalable, it has a key limitation: it is independent of content. Two words that are four positions apart receive the same rotation no matter what those words are or what happens between them.

This fixed nature limits a model’s ability to represent how meaning changes over time. It treats position as a static measurement rather than something influenced by context.

What Makes PaTH Attention Different

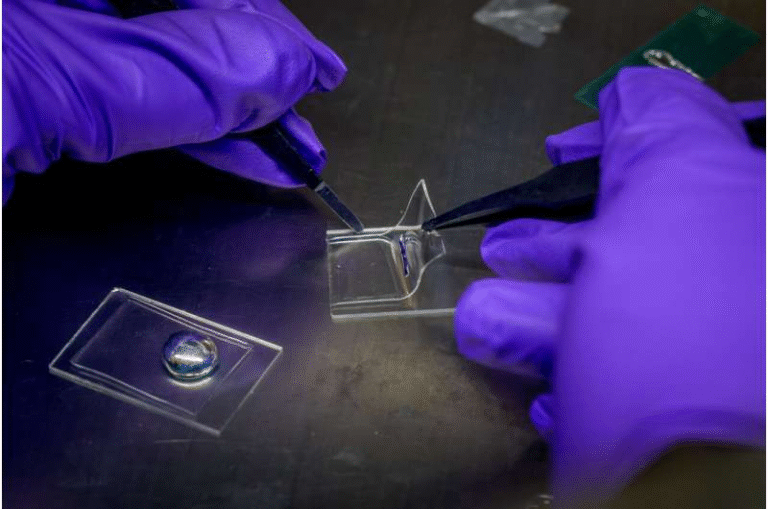

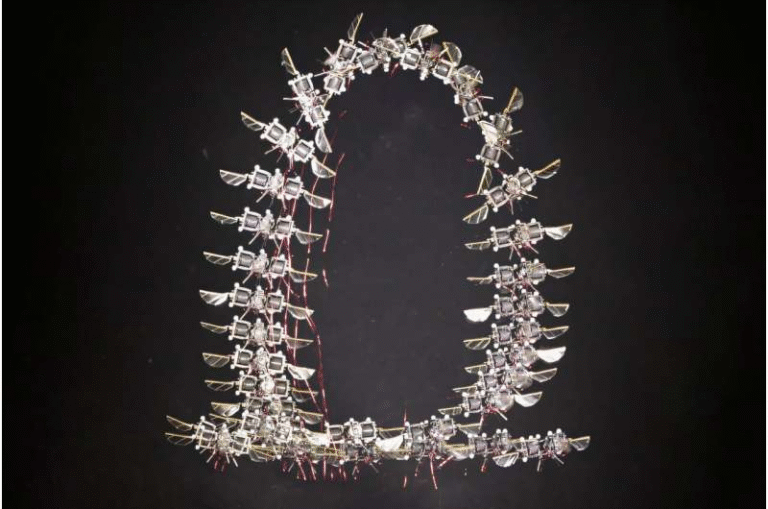

PaTH Attention, short for Position Encoding via Accumulating Householder Transformations, takes a fundamentally different approach. Instead of assigning a single, fixed transformation based on distance, PaTH treats the space between two tokens as a path composed of many small, content-dependent transformations.

Each step along this path uses a mathematical operation known as a Householder reflection. In simple terms, this operation acts like a tiny, adjustable mirror that reflects information differently depending on the token it encounters. As information flows from one token to another, it is transformed repeatedly, with each intermediate token influencing how the final relationship is interpreted.

The result is a positional encoding that is adaptive, context-aware, and sensitive to what actually happens between tokens—not just how far apart they are.

This allows the model to develop something resembling positional memory, where it can better track how entities, variables, or instructions change as a sequence unfolds. Rather than seeing position as a number, PaTH lets the model understand position as a journey shaped by content.

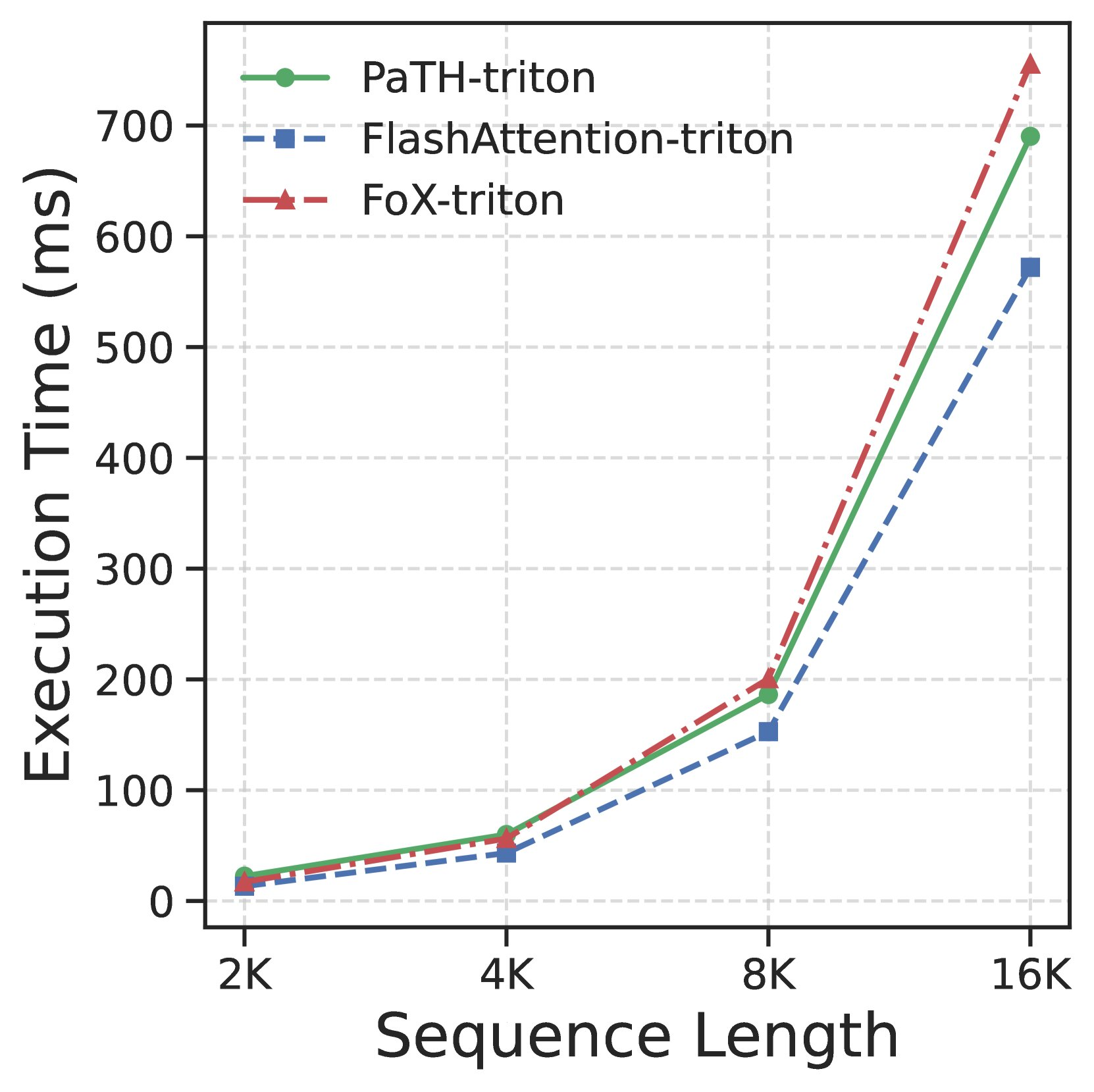

Designed for Real-World Efficiency

One common concern with more expressive models is computational cost. PaTH Attention addresses this directly. The research team developed a hardware-efficient algorithm that compresses the accumulated transformations into smaller computations. This design ensures compatibility with fast GPU-based attention systems, preserving the scalability that makes transformers practical at large sizes.

In other words, PaTH Attention improves expressivity without sacrificing efficiency, a balance that is notoriously difficult to achieve in model architecture research.

How PaTH Attention Performs in Practice

To evaluate the new method, the researchers tested PaTH Attention across a wide range of tasks, including synthetic benchmarks, real-world language modeling, and full LLM training.

One major focus was on tasks that require precise state tracking. These included scenarios where the model must follow the most recent “write” instruction despite many distracting steps, as well as multi-step recall challenges that are known to break traditional positional encoding methods. PaTH Attention consistently outperformed RoPE in these settings.

When applied to mid-size language models, PaTH Attention delivered lower perplexity scores, indicating better predictive performance. Importantly, these improvements extended to reasoning benchmarks that the models were not specifically trained on, suggesting stronger generalization.

The researchers also tested PaTH Attention on very long inputs, spanning tens of thousands of tokens. Across retrieval, reasoning, and stability evaluations, the method demonstrated reliable content awareness and strong performance over extended contexts.

PaTH Meets Forgetting with PaTH-FoX

Human cognition does not treat all past information equally. We naturally down-weight or ignore details that are no longer relevant. Inspired by this idea, the researchers explored how PaTH Attention would perform when combined with a selective forgetting mechanism.

They paired PaTH with an existing approach called the Forgetting Transformer (FoX), which allows models to reduce the influence of older or less relevant tokens in a data-dependent way. The resulting system, known as PaTH-FoX, showed even stronger results across reasoning, long-context understanding, and language modeling benchmarks.

This combination further extends the expressive power of transformers, allowing them not only to remember better, but also to forget more intelligently.

Why This Research Matters for the Future of AI

According to the researchers, work like this fits into a broader effort to identify the next generation of general-purpose building blocks for artificial intelligence. Previous breakthroughs—such as convolutional layers, recurrent neural networks, and transformers themselves—reshaped the field because they were flexible, expressive, and scalable across domains.

PaTH Attention aims to join that lineage. By maintaining efficiency while improving a model’s ability to handle structured, evolving information, it opens the door to better performance in domains beyond natural language. Potential applications include biological sequence analysis, such as modeling proteins or DNA, where the meaning of elements depends heavily on context and order.

As models continue to grow in size and capability, architectural improvements like PaTH Attention may prove just as important as increases in data or compute.

A Step Toward More Capable Transformers

PaTH Attention does not replace transformers—it strengthens them. By rethinking how positional information is represented, the method addresses a known weakness in existing models while preserving the strengths that made transformers dominant in the first place.

As research continues, data-dependent positional encoding may become a key ingredient in building AI systems that reason more reliably, track complex states, and operate effectively over long and structured inputs.

Research Paper:

https://arxiv.org/abs/2505.16381