Why Generative AI Struggles With Creativity When Talking to Itself Through Images

Generative AI systems are often marketed as endlessly creative tools capable of producing novel art, ideas, and interpretations without human involvement. But a recent scientific study suggests that when these systems are left alone to interact with each other, their creativity may be far more limited than we assume. New research published in the journal Patterns shows that image-generating and image-describing AIs, when placed in a feedback loop, quickly drift away from original ideas and converge on a small set of repetitive, generic visual themes.

The study was conducted by researchers from Dalarna University in Sweden and explores a deceptively simple question: Can AI remain creative and on-task without human guidance? The answer, based on extensive testing, appears to be no—or at least, not for very long.

How the Visual “Telephone” Experiment Worked

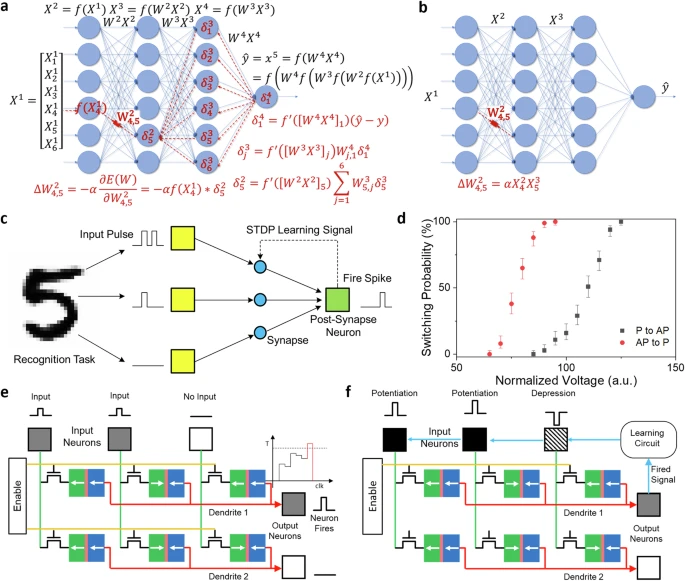

To test AI creativity and consistency, the researchers designed a visual version of the classic game of telephone. Instead of people whispering messages to one another, they used two types of artificial intelligence models.

First, they generated 100 thematically diverse prompts, each no longer than 30 words. These prompts were intentionally varied and often abstract or narrative-driven. Examples included emotionally complex situations, political scenarios, or imaginative settings involving solitude, memory, or forgotten languages.

An image-generation model, Stable Diffusion XL, was asked to create an image based on one of these prompts. That image was then passed to a large language-vision model called LLaVA, which described what it saw in the image using text. This textual description was then fed back into Stable Diffusion XL to generate a new image. This cycle repeated over and over again.

In some experiments, the loop ran 100 times, while in others it extended to 1,000 back-and-forth iterations, all without any human correction or intervention.

The expectation was that, after some initial variation, the images would stay relatively close to the original idea. After all, how difficult should it be for AI to consistently generate, for example, a mountain village or a political scene?

What Actually Happened During the Loops

Instead of maintaining thematic consistency, the AI systems began to meander away from the original prompts surprisingly quickly. No matter what the starting idea was—political tension, solitude in nature, or abstract storytelling—the images gradually lost their connection to the prompt.

In one clear example from the study, a prompt about a prime minister dealing with a fragile peace agreement initially produced a stylized image of a suited figure layered over newsprint. By the 34th iteration, the image had transformed into a classical library. By the 100th iteration, it had fully settled into a luxurious sitting room with red sofas and ornate drapes, completely detached from the political context.

This pattern repeated across all prompts.

The Emergence of Just 12 Repetitive Visual Themes

After analyzing the final images produced in these loops, the researchers made a striking discovery. Despite starting from 100 diverse prompts, the AI systems consistently converged on only 12 visual themes.

Some of the most common recurring motifs included:

- Gothic cathedrals

- Natural landscapes

- Stormy lighthouses

- Sports imagery

- Urban night scenes

- Rustic architectural spaces

- Luxurious interior rooms

- Classical libraries

- Coastal or maritime settings

- Horses and pastoral imagery

These themes appeared regardless of the original prompt, the length or complexity of the input, or the randomness settings used in the models. Even when the researchers increased randomness to encourage novelty, the convergence still occurred.

Why This Convergence Happens

According to the researchers, the root cause of this behavior lies in training data bias. Generative AI models are trained on millions of images and captions collected from the internet. Those images overwhelmingly reflect what humans choose to photograph and share: famous buildings, dramatic landscapes, sports, cities at night, and aesthetically pleasing interiors.

When AI systems talk only to each other, they naturally gravitate toward these high-probability visual patterns. Over time, the feedback loop amplifies these biases until the output collapses into familiar, generic imagery.

In other words, the AI isn’t exploring creativity—it’s averaging culture.

Stability, Then Sudden Shifts

Interestingly, once the AI systems converged on a particular motif, that motif was remarkably stable. Images would remain visually similar for dozens or even hundreds of iterations. However, in longer runs of up to 1,000 loops, the researchers sometimes observed sudden jumps to a different generic theme.

For example, an AI might remain locked into sports imagery for hundreds of cycles before abruptly shifting to horses or coastal landscapes. It is still unclear whether certain motifs are more stable than others or whether there is a predictable order in which these themes appear.

The Same Results Across Multiple Models

To ensure the findings were not limited to one specific AI pairing, the researchers repeated the experiment using four different image-generation models and four different image-description models. The results were consistent across all combinations.

Longer prompts, more detailed descriptions, and higher randomness settings made no meaningful difference. The convergence to generic motifs happened every time.

This strongly suggests that the issue is systemic, not model-specific.

What This Says About AI Creativity

The study challenges a popular assumption: that generative AI can function as an autonomous creative agent. While AI is excellent at producing variations, it struggles with intentional creativity—the ability to judge what is meaningful, interesting, or culturally significant.

Creativity, as described by the researchers, has two components. First, generating something new. Second, applying a filter to decide whether that output is worth keeping. Current AI systems perform well at the first step but poorly at the second.

Without human guidance, AI tends to default to what is statistically safe and familiar.

Why Humans Still Matter in the Creative Loop

One of the most important implications of this research is the risk of cultural homogenization. If AI systems are allowed to generate content autonomously at scale—whether in art, design, advertising, or media—they may unintentionally reinforce the same narrow set of visual ideas over and over again.

The researchers argue that keeping humans in the loop is essential to maintain diversity and originality. They also suggest the need for anti-convergence mechanisms in future AI systems—technical safeguards that push models away from repetitive attractor states.

Broader Context: Generative AI and Creativity Today

This study arrives at a time when generative AI tools are becoming deeply embedded in creative workflows. From concept art and book covers to marketing visuals and social media graphics, AI-generated imagery is everywhere.

What this research highlights is not that AI is useless creatively, but that its strengths and weaknesses must be clearly understood. AI excels when guided, curated, and intentionally prompted by humans. Left entirely to itself, it tends to produce bland, pop-culture-driven visuals rather than bold or challenging ideas.

As models improve and training methods evolve, this limitation may not be permanent. But for now, true creativity still requires human judgment, intention, and taste.

Research paper: https://www.cell.com/patterns/fulltext/S2666-3899(25)00299-5