Reinforcement Learning Speeds Up Model-Free Training of Optical AI Systems

Optical computing has been gaining attention as a promising path toward faster, more energy-efficient artificial intelligence, and a new study from researchers at the University of California, Los Angeles (UCLA) adds an important piece to that puzzle. The work shows how reinforcement learning can be used to train optical AI hardware directly in the real world, bypassing many of the limitations that have held this field back for years.

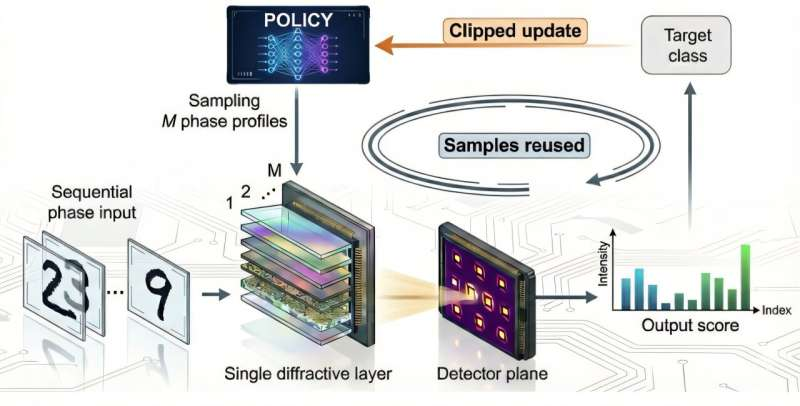

At the center of this research is a simple but powerful idea: instead of trying to perfectly simulate how light behaves inside complex optical systems, let the system learn directly from physical experiments. The UCLA team demonstrates that this approach is not only possible but also highly effective when combined with a reinforcement learning algorithm called proximal policy optimization (PPO).

Why Optical AI Is So Hard to Train

Optical computing relies on light rather than electronic signals to process information. One especially promising class of optical hardware is diffractive optical processors, which use carefully designed phase masks to shape how light propagates and interferes. These systems can perform large-scale parallel computations at the speed of light and with extremely low energy consumption.

However, training these systems has been a long-standing challenge. Traditionally, researchers design and train optical processors in model-based simulations, often referred to as “digital twins.” These simulations attempt to capture the physics of light propagation and device behavior. While this works well on paper, real optical hardware rarely behaves exactly like the model.

In practice, experimental systems suffer from misalignments, manufacturing imperfections, environmental noise, and unknown distortions. Capturing all of these effects accurately in a simulation is extremely difficult. As a result, optical systems that perform well in simulations often fall short when deployed in real experiments.

This gap between simulation and reality has been one of the biggest roadblocks to scaling optical AI beyond controlled laboratory demonstrations.

Moving to Model-Free, In-Situ Training

The UCLA researchers take a different approach by abandoning detailed physical modeling altogether. Instead of training a diffractive optical processor in simulation, they train it directly on the physical hardware, using real optical measurements as feedback.

This is known as model-free in-situ training. The system does not need to know the underlying physics of the setup, nor does it rely on an approximate digital model. It treats the optical hardware as a black box and learns how to control it purely through interaction and feedback.

To make this work efficiently, the team uses proximal policy optimization, a reinforcement learning algorithm known for its stability and sample efficiency. PPO has been widely used in robotics and control tasks, but this study shows that it is also well-suited for physical optical systems.

How PPO Helps Optical Systems Learn

In reinforcement learning, an agent takes actions, observes outcomes, and receives rewards that guide future behavior. In this case, the “agent” controls the diffractive features of the optical processor, while the “environment” is the physical optical setup itself.

PPO plays a critical role because it allows the system to:

- Reuse measured experimental data for multiple training updates

- Constrain how much the control policy changes during each update

- Avoid unstable or erratic behavior during training

These properties are especially important in optical experiments, where each measurement can be time-consuming and noisy. PPO significantly reduces the number of physical experiments needed while maintaining stable learning behavior.

The result is a training process that is both faster and more robust than earlier policy-gradient-based methods.

Experimental Proof Across Multiple Optical Tasks

To demonstrate the effectiveness of their approach, the UCLA researchers tested PPO-based in-situ training across a wide range of optical tasks.

One key experiment involved focusing optical energy through a random, unknown diffuser. Diffusers scramble incoming light in complex ways that are extremely difficult to model. Despite having no prior knowledge of the diffuser’s properties, the optical processor learned how to focus light efficiently. Importantly, it achieved this faster than standard policy-gradient optimization methods, highlighting PPO’s superior exploration and learning efficiency.

The team also applied the same framework to hologram generation, where the system learned to shape light into desired patterns, and to aberration correction, where optical distortions were compensated directly through hardware learning rather than analytical correction models.

Another striking demonstration involved handwritten digit classification performed entirely in the optical domain. During training, the diffractive processor was adjusted based on real measurements from the optical hardware. As learning progressed, the output patterns corresponding to different digits became clearer and more distinct. The system successfully classified digits without any digital post-processing, showing that meaningful AI tasks can be executed directly in optical hardware.

Why This Approach Is a Big Deal

The advantages of PPO-based model-free training extend well beyond the specific experiments demonstrated in the study.

First, it dramatically reduces dependence on accurate physical models, which are often the weakest link in experimental optical AI. Second, PPO’s data efficiency lowers the experimental cost and time required for training. Third, the stability of the algorithm makes it well-suited for noisy and imperfect real-world environments, which are unavoidable in physical systems.

Perhaps most importantly, this framework allows optical systems to adapt autonomously to changes in their environment. If conditions drift over time or hardware components age, the system can continue learning and correcting itself through ongoing interaction.

Broader Implications Beyond Diffractive Optics

While the study focuses on diffractive optical processors, the underlying idea is far more general. Any physical system that provides measurable feedback and allows real-time adjustment of parameters could potentially benefit from this approach.

This includes photonic accelerators, nanophotonic processors, adaptive imaging systems, and other forms of emerging optical and physical AI hardware. In all of these cases, building accurate models is challenging, making model-free reinforcement learning an attractive alternative.

The work also fits into a broader trend toward intelligent physical systems that can learn, adapt, and compute directly in the hardware layer, rather than relying entirely on digital electronics and software abstractions.

A Step Toward Autonomous Physical Intelligence

What makes this research particularly exciting is not just the technical performance, but the direction it points toward. By combining optical hardware with reinforcement learning, the researchers demonstrate a path toward systems that learn from experience, much like biological systems do, rather than from carefully engineered simulations.

As optical AI continues to evolve, approaches like this could help unlock its full potential, bringing ultra-fast, energy-efficient computation closer to practical, real-world deployment.

Research Paper:

Yuhang Li et al., Model-free Optical Processors Using In Situ Reinforcement Learning with Proximal Policy Optimization, Light: Science & Applications (2026).

https://www.nature.com/articles/s41377-025-02148-7