From Brain Scans to Alloys Teaching AI to Make Sense of Complex Research Data

Artificial intelligence is already deeply woven into modern scientific research. From analyzing brain scans and medical images to predicting the properties of new materials, AI tools promise faster insights and better predictions. But there is a persistent problem that researchers across fields keep running into: real-world data is messy. It rarely looks like the clean, perfectly labeled datasets that most machine-learning models are trained on.

A research team at Pennsylvania State University believes they have found a way to tackle this challenge. Their newly developed AI framework, called ZENN, is designed to help machines understand complex, heterogeneous data instead of being confused by it. The work was featured as a PNAS Showcase study in the Proceedings of the National Academy of Sciences, highlighting its potential impact across science and engineering.

Why Real-World Data Is So Hard for AI

Most traditional machine-learning systems quietly assume that all data points are created equal. In practice, that assumption almost never holds. Scientific data often comes from multiple sources: high-precision computer simulations, noisy experimental measurements, medical scanners with varying resolutions, or sensors operating under different conditions.

These differences introduce noise, uncertainty, and inconsistency. Standard AI models typically treat these variations as minor imperfections rather than fundamental properties of the data. As a result, predictions may look accurate on paper but fail when applied to real-world problems. This limits not only performance but also trust in AI-driven scientific discovery.

ZENN was built specifically to confront this issue head-on instead of ignoring it.

What Exactly Is ZENN

ZENN stands for Zentropy-Embedded Neural Networks. It is an AI framework that embeds principles from thermodynamics directly into neural networks. Rather than treating data as uniformly reliable, ZENN teaches models to recognize that different datasets carry different levels of certainty and disorder.

The framework was developed by Shun Wang, Wenrui Hao, Zi-Kui Liu, and Shunli Shang, all affiliated with Penn State’s materials science, engineering, and mathematics departments. Their goal was not just to improve accuracy, but also to make AI systems more interpretable and scientifically grounded.

The Science Behind Zentropy

At the heart of ZENN is a concept called zentropy, an advanced theory of entropy developed by Zi-Kui Liu. In classical physics, entropy is often described as a measure of disorder. Zentropy goes further by unifying ideas from quantum mechanics, thermodynamics, and statistical mechanics into a single predictive framework.

Zentropy views real systems as constantly balancing order and disorder. Without energy input, systems naturally drift toward randomness. This idea turns out to be surprisingly useful for AI, especially when dealing with imperfect data.

ZENN uses this framework to decompose data into two key components:

- Energy, which represents meaningful patterns and signals

- Intrinsic entropy, which captures noise, uncertainty, and disorder

By explicitly modeling both, ZENN can learn what parts of the data are trustworthy and what parts should be treated with caution.

How ZENN Differs From Traditional Neural Networks

Most neural networks rely on cross-entropy loss, a mathematical technique that works well when training data is clean, consistent, and homogeneous. But when datasets are mixed—such as combining experimental measurements with theoretical simulations—cross-entropy struggles.

ZENN replaces this one-size-fits-all approach with a thermodynamics-inspired learning process. One of its most important features is a tunable temperature parameter. This parameter helps the model infer hidden differences between datasets, such as whether a measurement comes from a highly controlled simulation or a noisy real-world experiment.

In simple terms, ZENN learns how cautious it should be with different data sources. This allows it to focus on the true signal while still accounting for uncertainty.

Making AI Less of a Black Box

Another major advantage of ZENN is interpretability. Many AI systems are criticized for being black boxes that produce predictions without explaining how they arrived at them. ZENN’s structure provides insight into why a model behaves the way it does.

By separating energy from entropy, researchers can analyze how uncertainty influences predictions. This is especially important in scientific fields where understanding the underlying mechanism matters as much as the final result.

Testing ZENN in Materials Science

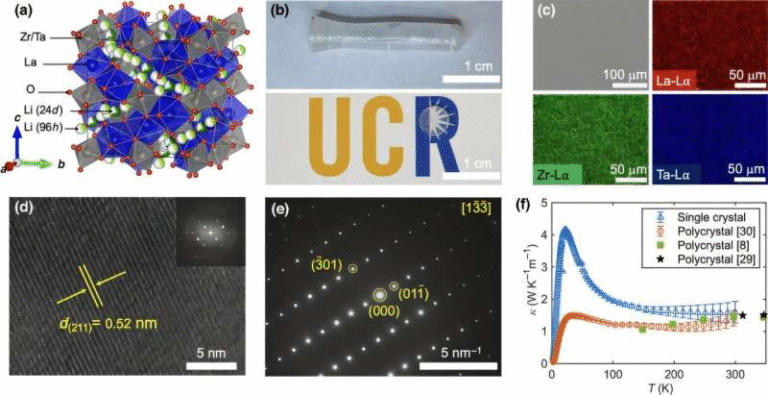

To demonstrate the framework, the researchers applied ZENN to a materials science case study involving iron-rich iron platinum, an alloy known for its rare property of negative thermal expansion—it contracts when heated instead of expanding.

Using ZENN, the team reconstructed the material’s free-energy landscape, a fundamental concept in thermodynamics that describes how a system’s energy changes under different conditions. The model not only matched the performance of larger, more complex neural networks but also revealed the thermodynamic mechanisms responsible for the alloy’s unusual behavior.

This result showed that ZENN is not just a predictive tool but also a way to uncover physical insights hidden within complex datasets.

Potential Impact on Biomedical Research

The researchers believe ZENN could be especially valuable in biomedical science, where data heterogeneity is the norm rather than the exception. Diseases such as Alzheimer’s disease involve a wide range of data types, including brain imaging, genetic profiles, molecular markers, and clinical records.

Integrating these datasets is notoriously difficult. ZENN’s ability to balance signal and uncertainty could help identify disease subtypes, track progression, and detect key transition points in disease development. Similar benefits could apply to cryo-electron microscopy studies of amyloid structures, where data quality often varies across samples.

Applications Beyond Medicine and Materials

ZENN’s flexibility opens doors in many other research areas. The team highlighted potential applications in:

- Climate research, including fossil pollen analysis

- Environmental monitoring, combining GIS data with sensor measurements like PM2.5 levels

- Urban studies, integrating housing price data and mental health indicators

- Advanced imaging systems, where multiple data streams must be aligned

Penn State researchers are already establishing cross-disciplinary collaborations to explore these possibilities.

Bridging Simulations and Reality

One of the most exciting prospects of ZENN lies in its ability to bridge the gap between idealized simulations and real-world experiments. In materials science and engineering, simulations often predict remarkable properties that are difficult to reproduce in practice.

By learning from both simulations and experiments simultaneously, ZENN could guide the design of materials that are not only theoretically impressive but also manufacturable. Potential applications include medical implants for bone repair and advanced data platforms like ULTERA, which manages and analyzes large, complex datasets.

Looking Toward Quantum Computing

The researchers also see promise in quantum computing, a field where uncertainty is not a flaw but a fundamental feature. Embedding zentropy-aware reasoning into AI models could provide new tools for interpreting quantum information and managing probabilistic states more effectively.

Challenges and the Road Ahead

Despite its promise, ZENN is not without challenges. Scaling the framework to extremely large or highly complex systems remains an open problem. However, the team views this work as part of a broader shift in how AI is used in science—not just as a pattern-finding tool, but as a way to understand mechanisms.

This approach reflects a growing belief that the future of scientific AI lies in models that respect the complexity of real data rather than pretending it does not exist.

Research paper: https://www.pnas.org/doi/10.1073/pnas.2511227122