Unearthing Experimental Materials Data Buried in Scientific Papers Using Large Language Models

Technologies that shape modern life — from smartphones and electric vehicles to renewable energy systems — depend on a vast range of functional materials. Developing and improving these materials is a central goal of materials science, but predicting how a material will behave is far from straightforward. Even small differences in composition or synthesis methods can dramatically change properties like conductivity, magnetism, or thermal performance. Because of this complexity, researchers have long relied on painstaking experiments and human intuition built through years of experience.

A growing body of work now suggests that data science and artificial intelligence can help change this situation. In particular, researchers are turning to large language models (LLMs) to uncover experimental materials data that has been effectively hidden for decades inside scientific papers. A recent study led by Dr. Yukari Katsura at Japan’s National Institute for Materials Science (NIMS) demonstrates how LLM-assisted tools can dramatically accelerate the collection and organization of experimental data from published research, laying the groundwork for more powerful materials discovery in the future.

Why Experimental Data in Materials Science Is Hard to Use

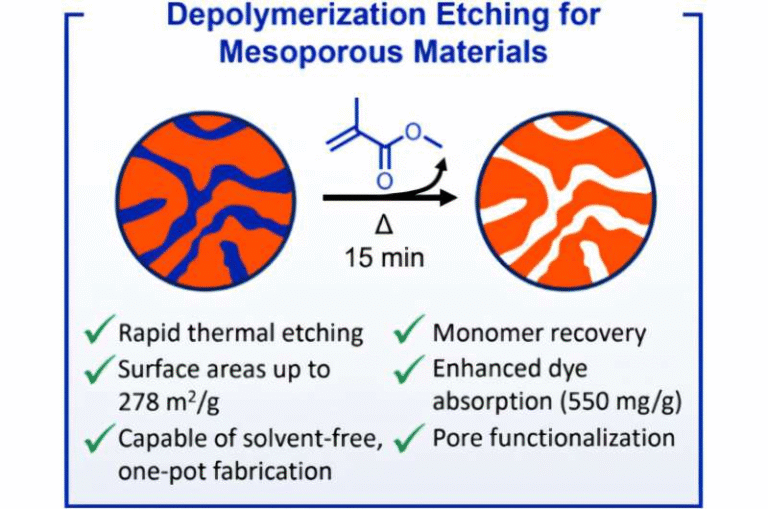

Materials science faces a unique challenge when it comes to data. Unlike some scientific fields where results are already well standardized, materials research data often appears in free-form text, tables, and graphs scattered across millions of papers. These papers are written to communicate scientific claims, not to serve as machine-readable data sources.

As a result, much of the world’s experimental materials data remains locked inside PDFs, inaccessible to large-scale analysis. Traditional theoretical models struggle to capture the complexity of real materials, making experimental data essential. However, manually extracting this data is slow, expensive, and limited in scale.

This bottleneck has become more visible as machine learning methods have advanced. Machine learning thrives on large, well-structured datasets, but building such datasets in materials science has historically required enormous human effort.

How Machine Learning and LLMs Can Help

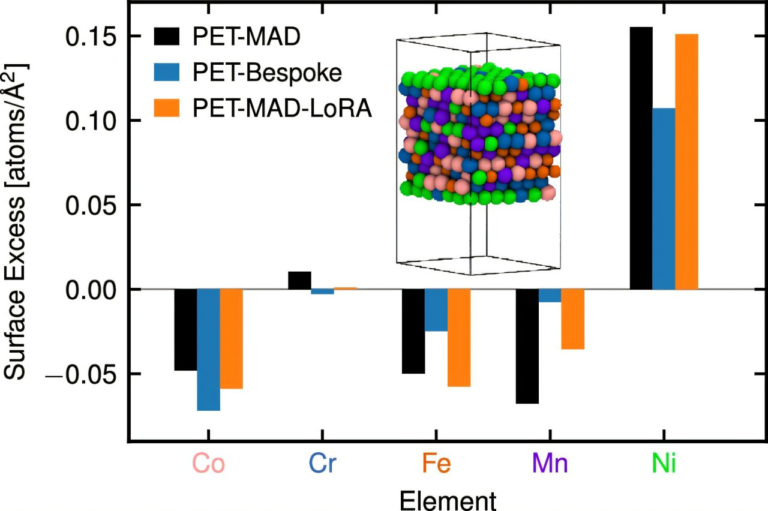

Machine learning differs from traditional theory-driven approaches by learning empirical trends directly from data. If enough high-quality experimental data can be gathered, machine learning models can begin to replicate — and sometimes surpass — human intuition in predicting material properties.

This is where large language models come in. Modern LLMs can read and interpret complex technical text while considering context and background knowledge. Instead of relying on rigid rules, they can flexibly extract relevant information from dense scientific writing. This capability opens the door to automating the transformation of papers into structured datasets.

With sufficiently large datasets, researchers can gain a bird’s-eye view of experimental results across entire fields, enabling new insights and more accurate property predictions.

The Starrydata Project: Building a Materials Data Backbone

To address this challenge, Dr. Katsura launched the Starrydata project in 2015. Starrydata is an open materials property database built by collecting experimental data directly from scientific papers. From the beginning, the project focused on high-quality, manually curated data, supported by a custom web system known as Starrydata2.

This approach succeeded in assembling an unprecedented volume of experimental materials data, particularly in specific domains. However, manual curation alone could not keep pace with the growing volume of published research. To scale up, the team began exploring how LLMs could assist data collection without sacrificing accuracy.

New LLM-Assisted Tools for Faster Data Curation

The NIMS team developed two new tools designed to streamline and accelerate the Starrydata workflow. Their findings were published in the journal Science and Technology of Advanced Materials: Methods.

The first tool, Starrydata Auto-Suggestion for Sample Information, focuses on targeted assistance. When a user pastes text from a paper’s abstract or experimental methods section into the Starrydata2 system, the text is sent to an LLM via the OpenAI API. The model then suggests candidate entries for predefined data fields specific to each materials domain. These suggestions appear directly below each input field, allowing curators to quickly review and refine the extracted information. This tool is already fully integrated into the Starrydata2 platform.

The second tool, Starrydata Auto-Summary GPT, takes a broader approach. Users can upload an entire open-access paper PDF, which the system then deconstructs. The LLM automatically extracts and summarizes descriptions of figures, tables, and samples, outputting the results as structured JSON data. This JSON output can be displayed as a clear, readable table in a web browser, making it much easier for human curators to locate and enter relevant experimental data.

Although the extracted data is not yet automatically merged into the main Starrydata database, these tools significantly reduce the time required for data collection by highlighting where key information resides.

Practical Constraints and Ongoing Challenges

Despite their promise, LLM-assisted tools still face important limitations. One major challenge is graph interpretation. While LLMs can summarize text effectively, they struggle to accurately read numerical values directly from plotted images. For this reason, extracting data points from graphs remains a task handled by human curators using a separate semi-automated tool developed by the Starrydata team.

Another constraint involves publisher policies. Many academic publishers prohibit automated AI processing of paper PDFs. To stay within legal and ethical boundaries, the current system targets open-access papers, which are freely available for analysis.

Data quality is also a concern. Automated extraction can introduce inconsistencies, ambiguous labels, or subtle errors. The Starrydata project addresses this by keeping humans firmly in the loop, using LLMs as assistants rather than replacements.

Why Deconstructing Papers Matters

Scientific papers are carefully structured narratives designed to support specific claims. However, once experiments are complete, the underlying data often has value far beyond its original context. By deconstructing papers back into experimental data, researchers make it possible for others to reuse results in entirely new ways.

Dr. Katsura’s long-term vision is a future where experimental data from all areas of materials science is shared digitally and explored from a global perspective. Such a system would not only support faster materials discovery but also help establish data curation itself as a recognized form of scientific research.

Current Scope and Future Expansion

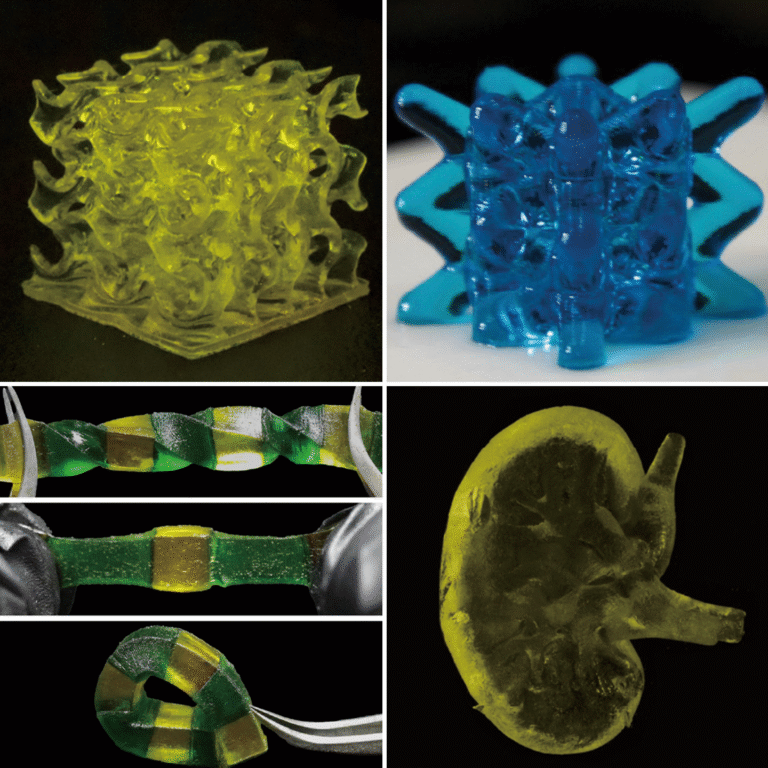

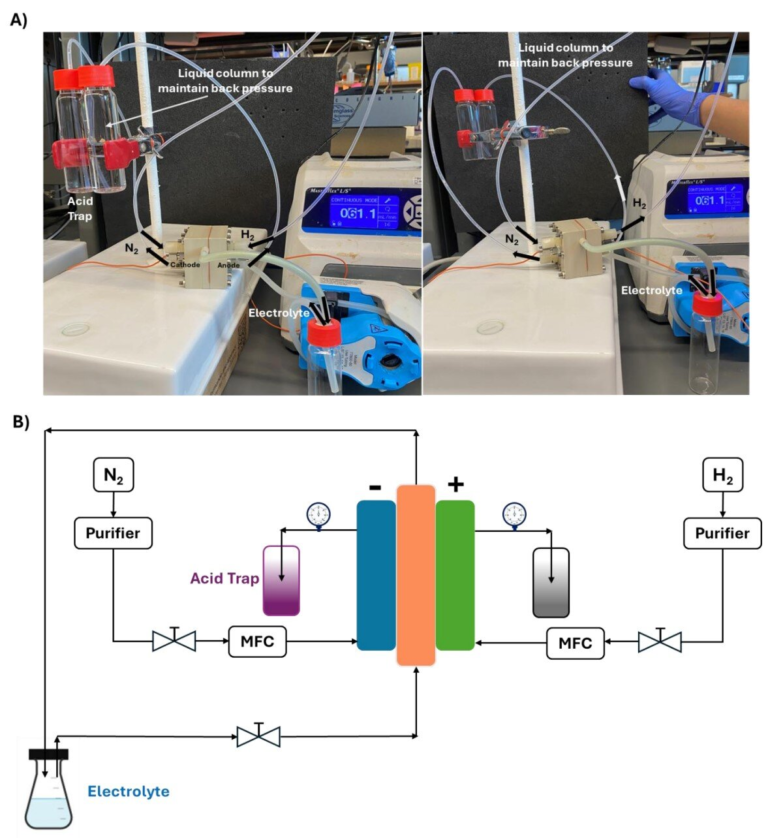

At present, Starrydata has made the most progress in areas such as thermoelectric materials, which convert heat into electricity, and magnetic materials. While the database is still limited in scope, it is already being used by leading researchers around the world as an open resource for materials development.

The research team is continuing to refine their tools, expand coverage to additional materials domains, and raise awareness of the importance of large-scale experimental datasets. As more open-access research becomes available and AI tools improve, the potential impact of projects like Starrydata is expected to grow substantially.

Broader Implications for Materials Science

The integration of LLMs, machine learning, and experimental data curation represents a shift toward a more data-driven materials science ecosystem. Instead of relying solely on intuition and isolated experiments, researchers can increasingly draw insights from collective experimental knowledge accumulated over decades.

While challenges remain, the work behind Starrydata demonstrates that AI-assisted data extraction is not just a theoretical idea but a practical tool already reshaping how scientific knowledge is reused.

Research paper reference:

https://doi.org/10.1080/27660400.2025.2590811