AI Breakthrough Solves a Century-Old Physics Puzzle Using THOR Framework

Researchers from the University of New Mexico (UNM) and Los Alamos National Laboratory (LANL) have developed an artificial intelligence system called THOR — short for Tensors for High-dimensional Object Representation — that has successfully cracked one of physics’ most notoriously difficult computational challenges. This breakthrough allows scientists to solve high-dimensional physics equations that previously took weeks or even months to compute, and it does so within seconds.

The THOR framework represents a major leap forward in statistical physics, materials science, and computational thermodynamics, all fields that depend on understanding how materials behave under different temperatures, pressures, and atomic arrangements. Let’s unpack what this means, how it works, and why it’s such a big deal.

Understanding the Problem: The Configurational Integral

In the world of statistical mechanics, scientists use something called the configurational integral to describe how particles interact in a material. It’s essentially a mathematical way of accounting for every possible arrangement of atoms in a given system. Once you can compute that, you can predict important material properties — like heat capacity, pressure, phase transitions, and structural stability.

The problem? This integral is incredibly complex. When you try to calculate it directly, the number of possible configurations explodes exponentially with each added particle — something scientists call the “curse of dimensionality.” Even the most advanced supercomputers can’t handle this explosion efficiently. In fact, trying to solve these integrals directly for realistic systems could take longer than the age of the universe with conventional methods.

For decades, physicists have relied on approximation techniques such as Monte Carlo simulations and molecular dynamics. These simulate particle motion over time to estimate results indirectly. While valuable, they’re slow, limited in accuracy, and require enormous computing power. That’s why what THOR achieves — solving the configurational integral directly — is revolutionary.

The Birth of THOR: AI Meets Tensor Networks

THOR was designed by Boian Alexandrov, a senior AI scientist at Los Alamos, working alongside Dimiter Petsev, a chemical and biological engineering professor at the University of New Mexico. Their idea was to merge two powerful concepts: machine learning and tensor network mathematics.

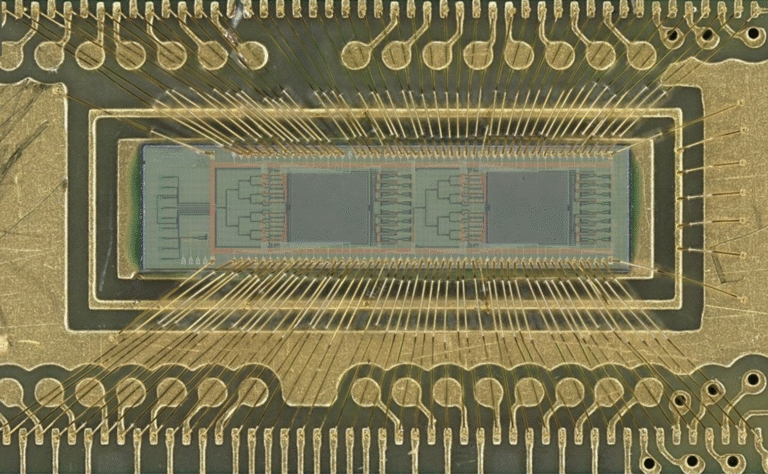

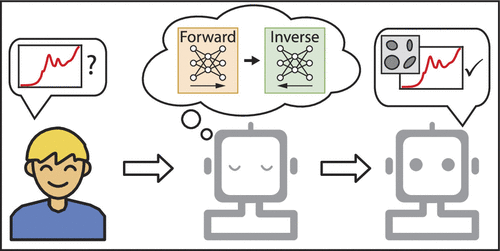

At its core, THOR uses tensor-train algorithms, mathematical structures that can compress and represent extremely high-dimensional data without losing crucial information. Think of it like breaking an impossibly large Rubik’s cube into smaller, more manageable sections while still capturing the overall structure.

In this framework, THOR doesn’t need to handle every possible atomic configuration individually. Instead, it compresses them into a manageable form using tensor train cross interpolation, a mathematical trick that identifies and focuses only on the most meaningful parts of the data.

By combining these tensor techniques with machine learning-based atomic models (known as machine learning potentials), THOR learns how atoms interact without having to compute every interaction from scratch. These potentials are trained on accurate datasets from physics simulations or experiments, allowing the AI to fill in gaps intelligently.

The result: calculations that once required weeks of supercomputer time are now completed in seconds — without sacrificing accuracy.

Testing the Framework: Copper, Argon, and Tin

The research team validated THOR’s capabilities through a series of benchmark tests. They applied the framework to systems like:

- Crystalline copper (Cu) under varying pressures

- Crystalline argon (Ar) in its solid state under high pressure

- Tin (Sn) undergoing a solid-solid phase transition, which involves changing from one crystal structure to another

In all these tests, THOR produced results consistent with traditional simulations from Los Alamos — but it did so over 400 times faster.

To put this in perspective, simulations that might have taken 2,000–3,000 hours to complete using classical molecular dynamics methods were reduced to a few minutes with THOR. That’s a performance leap that redefines what’s computationally possible in materials science.

Why This Matters: From Supercomputers to Real-Time Discovery

Before THOR, solving complex materials problems often meant weeks of waiting on expensive supercomputers. Now, these same calculations can be run on ordinary workstations. This makes high-level computational materials research more accessible, faster, and significantly more energy-efficient.

Accurately computing the configurational integral helps scientists explore thermodynamic stability, phase boundaries, and mechanical behavior of materials with unprecedented precision. This could lead to breakthroughs in fields like metallurgy, semiconductor design, planetary science, and even fusion energy research.

With faster and more scalable tools, researchers can now explore how materials behave under extreme conditions — such as the high pressures found in planetary cores or the extreme temperatures in nuclear reactors — without waiting weeks for results.

And because THOR integrates seamlessly with modern machine learning potentials, it can evolve as new, more accurate atomic models emerge. This adaptability makes it a future-proof tool in the ever-growing intersection of AI and physical science.

How Tensor Networks Tame Complexity

The secret sauce of THOR lies in how it tackles the “curse of dimensionality.” A tensor is basically a high-dimensional generalization of matrices and vectors — imagine a multi-layered grid containing data about every atomic position and its energy.

Traditional methods need to compute every element in that grid — a computational nightmare. THOR, however, uses tensor-train decomposition, which represents that massive tensor as a sequence of smaller, interconnected tensors.

To make this work efficiently, the researchers introduced a specialized version of tensor train cross interpolation, which selects only the most informative points in the dataset. It’s like being able to describe a whole 3D landscape by looking at a few strategic points rather than mapping every grain of sand.

This process is further enhanced by identifying crystal symmetries, allowing THOR to ignore redundant configurations that behave identically. The end result: precise integrals computed in a fraction of the time, without any noticeable loss in accuracy.

Beyond Physics: What This Means for AI and Science

The implications of THOR extend well beyond statistical mechanics. It represents a broader shift in how artificial intelligence can merge with theoretical physics to handle complex mathematical problems that once seemed unsolvable.

THOR’s approach — compressing high-dimensional problems through tensor networks — could be applied to quantum chemistry, neuroscience, climate modeling, and even financial forecasting, where high-dimensional relationships are common.

In quantum many-body physics, for example, tensor networks are already used to simulate entangled quantum systems. THOR demonstrates that these same mathematical ideas can power breakthroughs in classical systems too, opening a bridge between AI, quantum computing, and statistical physics.

The Road Ahead: Opportunities and Challenges

Despite its success, THOR is still in its early stages. It performs exceptionally well on crystalline solids, where atomic positions and symmetries are well-defined. However, extending it to more disordered systems — like liquids, glasses, or amorphous materials — remains an open challenge.

As the complexity of the material increases, the tensor ranks (a measure of how much data needs to be stored) may grow too quickly, limiting the compression efficiency. Researchers are exploring ways to control this “rank explosion” while maintaining accuracy.

Another challenge is the dependence on machine learning potentials. These models must be trained carefully on high-quality data; otherwise, inaccuracies in the potential will propagate into the final results. Future work may combine THOR with adaptive learning, where the system can improve its own potentials as it encounters new configurations.

Nonetheless, the research community is optimistic. By combining first-principles physics, tensor mathematics, and AI, THOR has already achieved something thought impossible for over a century.

A Historical Perspective: From Monte Carlo to Machine Learning

To appreciate how big this leap is, it’s worth looking back. The Monte Carlo method, developed in the mid-20th century (partly at Los Alamos during the Manhattan Project), was the last major revolution in computational statistical physics. It allowed scientists to approximate difficult integrals using random sampling — a method still used everywhere today.

Now, almost 80 years later, Los Alamos scientists have once again changed the game. By fusing AI with tensor networks, they’ve replaced stochastic approximation with direct, deterministic computation of the configurational integral.

This could eventually reshape how we approach not just material simulations but any field that suffers from the curse of dimensionality — from cosmology to drug discovery.

Open Access and Collaboration

The THOR framework is open-source and publicly available on GitHub, encouraging other researchers to experiment, validate, and expand upon it. The team also plans to extend THOR to systems involving defects, impurities, and multi-component alloys.

Because it works efficiently with machine learning interatomic models, THOR could soon integrate into widely used simulation packages, making it a powerful plug-in for computational materials scientists worldwide.

In short, THOR has the potential to democratize high-end materials computation, putting once-impossible calculations into the hands of any researcher with a decent workstation.

Final Thoughts

The development of THOR isn’t just a technical achievement — it’s a glimpse into a new era of computational science. By breaking a century-old barrier in statistical mechanics, it shows how AI can do more than recognize patterns or write text — it can help us unlock the deepest mathematical problems of nature.

As computing and machine learning continue to advance, frameworks like THOR could become central to everything from designing advanced alloys to predicting material performance in extreme environments. It’s an exciting moment for physics, and for science as a whole.

Research Reference:

Breaking the curse of dimensionality: Solving configurational integrals for crystalline solids by tensor networks

Published in Physical Review Materials (2024)

https://link.aps.org/doi/10.1103/PhysRevMaterials.8.095401