AI Learns to Spot Exploding Stars with Just 15 Examples

A new study published in Nature Astronomy (2025) has shown that Google’s Gemini, a large language model (LLM), can successfully identify exploding stars and other celestial events after seeing only 15 examples. This breakthrough could reshape how astronomers detect cosmic events, making the process faster, cheaper, and more transparent.

The research, led by Fiorenzo Stoppa, Turan Bulmus, Stephen Smartt, and colleagues from the University of Oxford, Radboud University, and Google DeepMind, demonstrates how a general-purpose AI can handle a specialized scientific task with minimal training data.

What the Researchers Did

The team tested Gemini on three major astronomical survey datasets:

- Pan-STARRS (Panoramic Survey Telescope and Rapid Response System)

- MeerLICHT (a South African-Dutch telescope whose name means “more light”)

- ATLAS (Asteroid Terrestrial-impact Last Alert System)

Each of these telescopes scans the night sky continuously, capturing enormous numbers of images. Astronomers then need to figure out which of these images show something real — like a supernova or variable star — and which ones are just false signals caused by noise, cosmic rays, or satellite trails.

Traditionally, this requires specialized neural networks that must be trained on thousands of examples, often tailored to each telescope’s data. But this new study took a completely different route: instead of building another domain-specific neural net, the researchers prompted Gemini — a general LLM that already understands images and text — with a small number of 15 labeled examples.

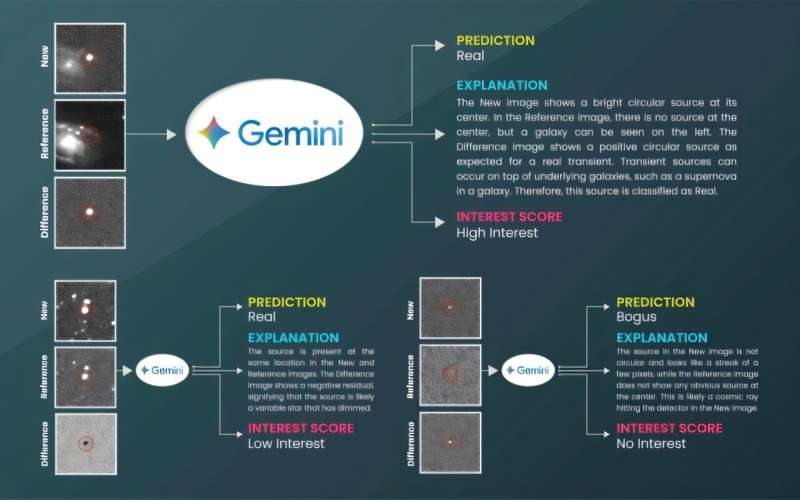

Each example included three images:

- A new image (showing a possible transient),

- A reference image (of the same patch of sky taken earlier), and

- A difference image (created by subtracting one from the other to reveal changes).

The prompts told Gemini to classify each candidate as “No interest”, “Low interest”, or “High interest”, and to describe why it made that choice.

Accuracy and Results

The performance results were remarkable. Even with just 15 examples, Gemini reached an average accuracy of more than 93% across all three datasets. Specifically:

- ATLAS: 91.9% accuracy

- MeerLICHT: 93.4% accuracy

- Pan-STARRS: 94.1% accuracy

For context, traditional deep-learning models trained on thousands of images achieve similar performance but require extensive training and computational power.

Six months later, after Gemini had received algorithmic updates, the researchers ran the same tests again — and it maintained similar or even slightly improved accuracy. This consistency suggests that LLMs can adapt and remain robust across updates, an encouraging sign for long-term use in astronomy.

Why This Matters

Astronomical sky surveys generate millions of alerts each night, and only a small fraction correspond to genuine cosmic events like supernovae, tidal disruption events, or variable stars. The rest are artefacts. Sorting through them quickly is a massive challenge.

For years, astronomers have been training machine learning models to separate real from bogus signals, but these models tend to act as black boxes — they output predictions without clear explanations. Gemini’s approach changes that.

Not only did it classify images correctly, but it also provided natural-language explanations describing what it saw. For instance, it might say that a bright circular object appeared in the difference image while the reference image showed nothing, implying a real transient event.

This kind of explainability is rare in AI-based astronomy. It allows scientists to trust and verify the AI’s reasoning, reducing the mystery behind the decision-making process.

A Built-In Self-Check System

Another clever feature was how the researchers used a second LLM to act as a “reviewer.” After Gemini classified and explained each event, the second model assessed whether Gemini’s reasoning was coherent. If it wasn’t, that classification could be flagged for human review.

This two-model setup created a human-in-the-loop system that combines automation with scientific oversight — a balance crucial for astronomy, where false positives can waste precious telescope time.

The Power of Few-Shot Learning

The real highlight of this study is the success of few-shot learning — getting powerful results with only a handful of examples.

Instead of months of data labeling and model training, Gemini learned from a few annotated samples and some carefully written prompts. The researchers found that the phrasing and structure of prompts mattered — reordering examples or slightly changing the wording affected accuracy.

This finding opens up possibilities for quickly adapting AI models to new telescopes or different types of celestial events without retraining from scratch.

Beyond Astronomy: Why This Matters for AI

While this research focuses on stars and galaxies, its implications stretch far beyond astronomy. It shows that general-purpose AI models can be repurposed for scientific discovery — provided they are guided with precise examples and clear instructions.

This could apply to other data-heavy fields, such as medical imaging, environmental monitoring, or particle physics, where scientists often face the same problem: separating meaningful signals from background noise.

Gemini’s success shows that with proper prompting, multimodal LLMs — models that process both images and text — can handle specialized scientific problems previously thought to require custom-built systems.

What Are Exploding Stars Anyway?

To appreciate why this matters, it helps to understand what these “exploding stars” are. In astronomy, the term usually refers to supernovae — cataclysmic explosions marking the death of massive stars.

When a star runs out of nuclear fuel, its core collapses under gravity. If it’s massive enough, this collapse triggers a shockwave that blasts the star’s outer layers into space, creating a supernova that can outshine entire galaxies for weeks.

Supernovae are crucial to astronomy because they:

- Forge heavy elements like gold, silver, and iron.

- Help measure cosmic distances (as “standard candles”).

- Influence the formation of new stars and planets.

Identifying them quickly is essential, since astronomers want to capture their evolution — from the first burst of light to the fading remnants. That’s where AI steps in. With surveys like the Vera C. Rubin Observatory’s LSST expected to capture millions of alerts nightly, automated classification systems are not just helpful — they’re necessary.

The Bigger Picture for Astronomy

This research marks a step toward interpretable and scalable AI tools for space science. Future applications could include:

- Prioritizing telescope time by ranking events by “interest level.”

- Detecting entirely new types of transients that don’t fit known patterns.

- Integrating LLMs with robotic telescopes to enable faster follow-ups.

- Using AI onboard spacecraft or lunar robots to make quick decisions without Earth’s guidance.

Because the model can reason in natural language, it could also assist citizen scientists, allowing non-experts to help in classifying astronomical data using AI explanations they can actually understand.

Challenges Ahead

Despite its success, the method has limitations. Gemini’s performance was tested only on specific datasets with familiar types of transients. Unusual or never-before-seen phenomena could still confuse the model.

There’s also the issue of dataset differences — telescopes have varying resolutions and noise levels. The study noted that the model handled these differences surprisingly well, but scaling to all telescopes will require further work.

Finally, while the AI can explain its decisions, those explanations still come from statistical pattern recognition — not true understanding. Human astronomers will continue to play a key role in confirming discoveries and interpreting the science behind them.

The Takeaway

This research is a powerful example of how AI is transforming astronomy. With only 15 examples, a general-purpose model like Gemini can perform image classification tasks that once required huge datasets and months of work. Even more importantly, it does so while explaining its reasoning in plain language.

In the near future, astronomers may use AI systems not only to sift through data but also to collaborate with them — sharing a dialogue about what’s in the sky.

Research Reference: Textual interpretation of transient image classifications from large language models – Nature Astronomy (2025)