Supercomputer-Powered AI Learns to Decode the Language of Biomolecules

A group of scientists at the University of Glasgow has achieved something remarkable — they’ve trained an artificial intelligence model to understand how proteins “talk” to each other. The model, called PLM-Interact, was built using one of the UK’s most powerful supercomputers, and it’s opening new doors in understanding diseases, viruses, and the fundamental language of life itself.

This study, recently published in Nature Communications (2025), shows how the team repurposed a supercomputer originally used by physicists to study the universe and turned it toward biology. The result: an AI that can predict how proteins interact, how mutations affect these interactions, and even how viruses engage with human cells.

What Exactly Is PLM-Interact?

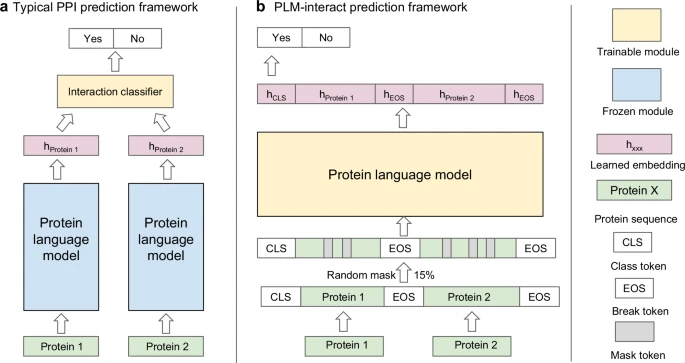

PLM-Interact stands for Protein Language Model–Interact. It’s a large language model (LLM) — the same general AI framework used to power chatbots and translation systems — but instead of learning human languages, this one learns the language of biomolecules.

Proteins are made of long chains of amino acids, and just like words form sentences, these chains fold and interact to create meaning in biological systems. Understanding how two proteins interact (a process called protein-protein interaction, or PPI) is key to understanding how cells work — and how things go wrong in diseases.

The problem is that finding PPIs experimentally is slow, expensive, and complex. It can take months or years of lab work to confirm how two proteins connect. That’s where PLM-Interact comes in: it uses machine learning to predict these interactions faster and more accurately than existing methods.

How the Model Was Built

To train this molecular translator, the Glasgow team tapped into DiRAC, the UK’s high-performance computing facility. They used Tursa, a GPU-powered cluster originally designed for astrophysical simulations. Instead of modeling galaxies, it was now analyzing proteins — and it did so using 650 million parameters, a scale similar to many advanced AI systems.

The model was trained on over 421,000 pairs of human proteins and their known interactions. Later, it was expanded to include 22,383 human–virus protein interactions, covering nearly 6,900 proteins (5,882 from humans and 996 from viruses).

This enormous dataset allowed the model to learn the “grammar” of how proteins communicate — how one protein binds to another, how shape and charge influence bonding, and how tiny mutations can change everything.

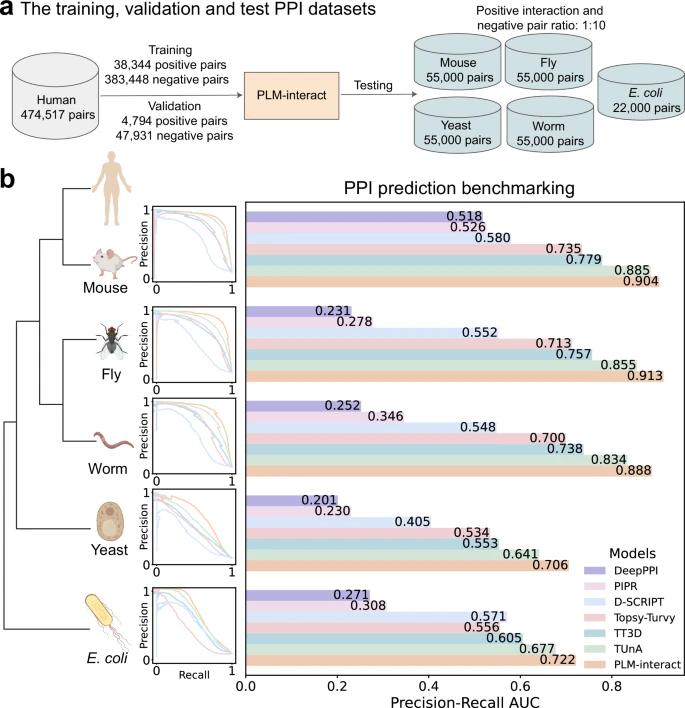

b) Taxonomic tree of training and test species aligned with each model’s precision–recall curve and AUPR benchmark. Predicted interaction probability distributions appear in Supplementary Fig. 3. Species icons created in BioRender (Liu, D., 2025). Source: Nature Communications (2025)

Outperforming the Competition

The results speak volumes. PLM-Interact predicted protein interactions with 16% to 28% higher accuracy than other top AI protein models. When tested against tools like Google DeepMind’s AlphaFold3, which has been a major name in structural biology, PLM-Interact showed stronger results in specific interaction tests.

For example, in five key biological processes — including RNA polymerization and protein transportation — PLM-Interact accurately predicted all five crucial protein-protein interactions. AlphaFold3, by contrast, correctly identified only one.

This improvement isn’t just about bragging rights. It shows that AI is learning to go beyond just predicting protein structure (what they look like) to predicting behavior (how they interact and function). That’s a major leap forward.

Predicting the Impact of Mutations

Beyond identifying whether two proteins interact, PLM-Interact can also predict what happens when one of them mutates. Mutations — small changes in DNA that alter proteins — are at the root of many diseases, including cancers and genetic disorders.

PLM-Interact can model whether a mutation will strengthen or weaken a protein interaction, offering insights into whether that mutation might cause disease or block an essential biological process.

This feature could become incredibly valuable in personalized medicine, where doctors and researchers look at a patient’s unique genetic mutations to design targeted treatments. Knowing in advance how a mutation might affect cellular communication could save both time and lives.

Understanding Virus–Host Interactions

The team also explored how PLM-Interact handles virus–host interactions — in other words, how viruses hijack human proteins to infect cells. During the COVID-19 pandemic, scientists realized how critical it is to understand these interactions quickly.

By feeding the model thousands of examples of human and viral proteins, PLM-Interact learned to predict how viral proteins attach to human ones. This could eventually help researchers identify which viruses have the potential to cause outbreaks or even pandemics, long before they emerge in the real world.

The researchers behind the model hope that in the future, this technology could be used to map viral threats, find drug targets, and speed up the development of vaccines or antivirals.

The People Behind the Project

PLM-Interact is the result of collaboration between biologists, computer scientists, and physicists at the University of Glasgow. The project was led by Dr. Ke Yuan from the School of Cancer Sciences, Prof. Craig Macdonald from the School of Computing Science, and Prof. David L. Robertson from the MRC–University of Glasgow Centre for Virus Research (CVR).

The first author of the paper, Dan Liu, developed the model as part of their PhD work — a clear example of how interdisciplinary training and access to cutting-edge technology can lead to major breakthroughs.

Why Protein-Protein Interactions Matter

Proteins are the workhorses of the cell. They carry out almost every function you can imagine — from building structures and transporting molecules to defending the body from pathogens.

When two proteins interact, they often trigger biological pathways. For example, one protein might signal another to start copying DNA, or to trigger immune responses. But when these interactions go wrong — due to a mutation, infection, or chemical imbalance — diseases can emerge.

Cancers, Alzheimer’s disease, and many genetic disorders are linked to miscommunication between proteins. So, the ability to map and predict these interactions is like getting a blueprint of how life operates at the molecular level.

The Role of Supercomputing in Modern Biology

Training PLM-Interact required massive computational power, which is why the team turned to the DiRAC supercomputer. Interestingly, DiRAC was originally built for theoretical physics, helping researchers simulate galaxies and subatomic particles.

Repurposing that technology for biology shows how scientific fields are increasingly overlapping. Supercomputing has already transformed astrophysics, and now it’s doing the same for biomedicine.

By providing access to high-performance GPUs, technical support, and software engineering expertise, DiRAC allowed the Glasgow team to train their model much faster and more efficiently than would otherwise be possible.

What’s Next for PLM-Interact

The researchers aren’t stopping here. They plan to expand the model’s dataset, integrate more types of biological data, and make predictions about other types of biomolecular interactions — such as protein–RNA or protein–DNA bindings.

The ultimate goal is to create a system that can predict molecular behavior at scale, potentially even spotting future viral threats or identifying new treatments before clinical trials begin.

While PLM-Interact is still primarily a research tool, its implications stretch across medicine, virology, genetics, and drug discovery. It’s a clear example of how AI and supercomputing are reshaping biology.

The Bigger Picture

This study is part of a growing trend in AI-driven biology. Over the past few years, models like AlphaFold, ProtT5, and now PLM-Interact have shown that AI can uncover biological patterns that were once invisible to humans.

These models aren’t replacing experiments — they’re making them smarter. By narrowing down possibilities and highlighting the most likely interactions, scientists can focus their lab work on the most promising leads.

The hope is that in the coming years, tools like PLM-Interact will be standard in labs around the world, helping researchers tackle diseases faster, predict viral mutations earlier, and understand life more deeply.

Research Paper: PLM-interact: Extending Protein Language Models to Predict Protein–Protein Interactions (Nature Communications, 2025)