AI Research Reveals How Covert Attention Works and Uncovers Previously Unknown Neuron Types

Understanding how the brain focuses on important information without moving the eyes has long been a challenge in neuroscience. This subtle ability, known as covert attention, allows humans and animals to mentally prioritize parts of a visual scene while their gaze remains fixed. A new study now shows that artificial intelligence can do more than mimic this behavior—it can actually help explain how it emerges in the brain and even point scientists toward new types of neurons that were previously overlooked.

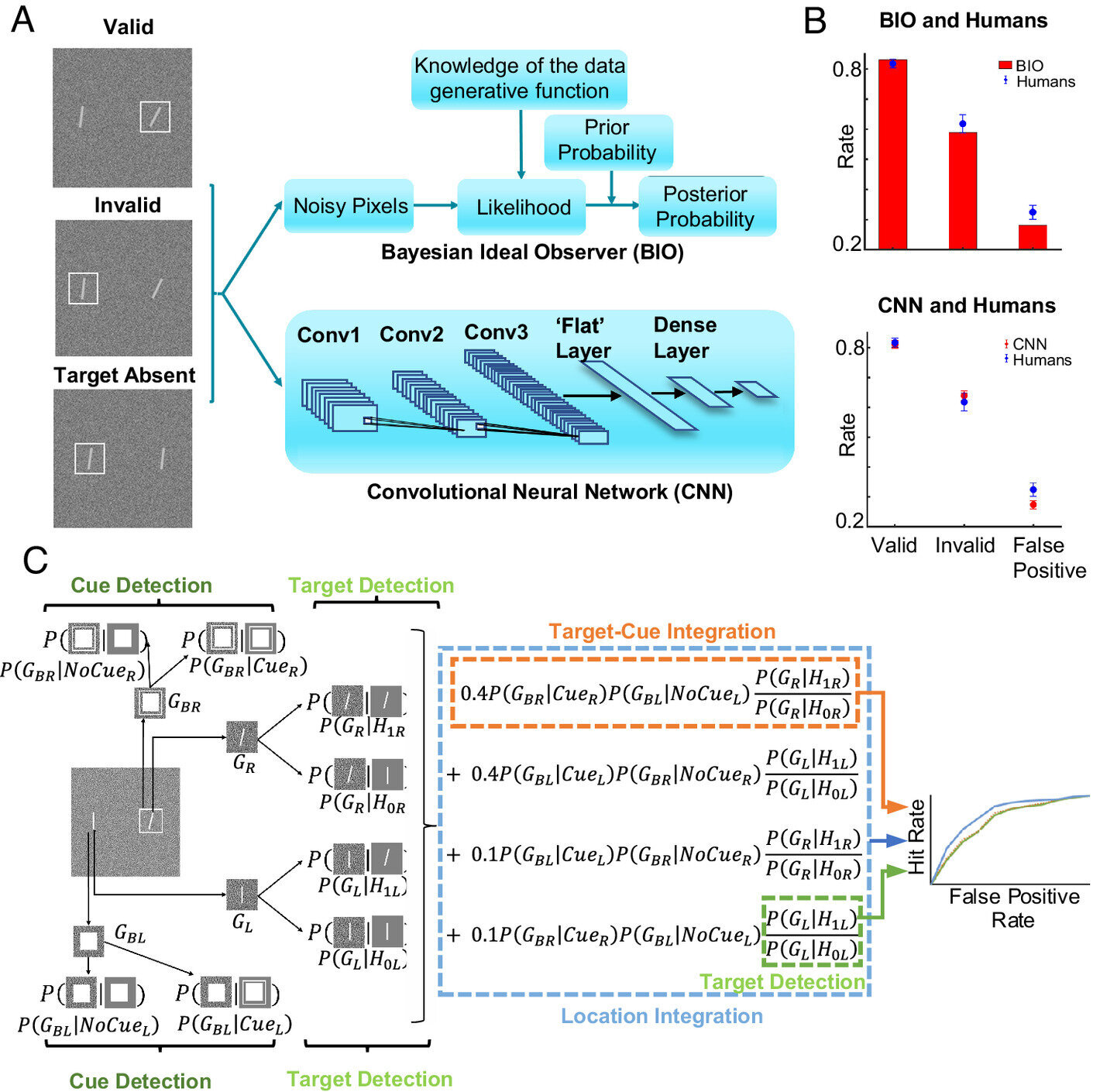

Researchers from the University of California, Santa Barbara used convolutional neural networks (CNNs)—a form of AI inspired by biological vision—to explore how covert attention might arise naturally from learning, rather than from specialized attention modules in the brain. Their findings, published in the Proceedings of the National Academy of Sciences (PNAS) in 2025, suggest that attention may be an emgent property of neural systems working together to optimize performance.

What Is Covert Attention and Why It Matters

Covert attention is the brain’s ability to shift focus without moving the eyes. Everyday examples include watching traffic while driving or scanning a crowd to see how people react—all without obvious eye movements. In laboratory settings, scientists often study this phenomenon using cueing tasks, where a brief signal such as an arrow or flash hints at where a target will appear. When attention is properly oriented, people detect the target faster and more accurately.

For decades, this ability was thought to depend on specialized brain regions, particularly in primates, and was often linked to higher-level cognition and even consciousness. However, evidence has steadily accumulated showing that animals with much simpler brains—such as mice, archer fish, and bees—also exhibit forms of covert attention. This raised an intriguing question: could attention arise not from a dedicated “attention system,” but from learning itself?

Using AI as a Model Brain

Directly answering this question in biological brains is extremely difficult. The human brain contains billions of neurons, and current imaging techniques cannot monitor individual neurons at scale. AI models, however, offer a unique workaround. In artificial neural networks, every unit can be measured, analyzed, and categorized.

In earlier work published in 2024, the same research team showed that CNNs containing between 200,000 and one million artificial neurons naturally developed the behavioral signatures of covert attention when trained on visual detection tasks. Importantly, these networks had no built-in attention mechanism. Their only goal was to perform the task as accurately as possible.

The new study goes much deeper, focusing not just on behavior but on the internal neural mechanisms that made this emergent attention possible.

Peering Inside the Neural Network

Instead of treating the CNN as a black box, the researchers analyzed 1.8 million artificial neurons across ten independently trained networks. They exposed these networks to a classic Posner cueing task, measuring how individual units responded to cues and targets presented at different locations.

What they found was striking. Many artificial neurons showed response patterns similar to those previously reported in primate and mouse brains. Even more interestingly, the analysis revealed several neuron types that had not been emphasized—or in some cases even identified—in prior attention research.

Newly Identified Neuron Types

One of the key discoveries was the presence of cue-inhibitory neurons. While most attention studies focus on neurons that increase their activity when attention is directed somewhere, these neurons did the opposite. Their activity decreased when a cue appeared. This finding suggests that attention is not just about amplifying signals, but also about actively suppressing irrelevant information.

Another surprising discovery was the location-opponent neuron. These neurons increased activity when a target and cue appeared at one location, while simultaneously suppressing activity for other locations. This push–pull mechanism effectively sharpens perception where the target is expected and dampens noise elsewhere. While opponency is well known in other visual processes—such as color vision or motion detection—it had not previously been highlighted as a mechanism for covert attention.

The researchers also identified location-summation neurons, which combine information across multiple spatial locations, further contributing to flexible attentional control.

Interestingly, one neuron type found in the CNNs—combining cue opponency with target summation—was not found in mouse brain data, suggesting that biological systems may impose constraints that artificial networks do not.

Confirmation in Real Brains

To test whether these AI-predicted neuron types were biologically meaningful, the team analyzed existing neural recordings from mouse superior colliculus, a midbrain structure involved in visual processing and orienting behavior. Remarkably, they found clear evidence of location-opponent, cue-inhibitory, and location-summation neurons in real mouse brains.

This validation strongly supports the idea that AI models can do more than replicate behavior—they can predict neural mechanisms that exist in biological systems but may have been missed due to experimental limitations or research biases.

Rethinking Attention

Taken together, these findings challenge the traditional view that covert attention depends on specialized, hard-wired brain modules. Instead, attention may arise as a natural consequence of learning to optimize performance, emerging from interactions among many neurons rather than being imposed from the top down.

This perspective also explains why covert attention appears across species with vastly different brain architectures. If attention emerges from general learning principles, it does not require a large cortex or uniquely human brain structures.

Why This Matters for Neuroscience and AI

This research highlights a powerful new role for AI in neuroscience. By acting as a fully observable model brain, neural networks allow scientists to study population-level mechanisms that are currently inaccessible in biological experiments. These insights can then guide new hypotheses and experiments in animals and, eventually, humans.

At the same time, understanding emergent attention in AI could help improve artificial systems, leading to more efficient and biologically inspired approaches to perception and decision-making.

A Broader Look at Covert Attention

Covert attention is a fundamental cognitive process that influences everything from perception and learning to reaction time and accuracy. It is distinct from overt attention, which involves eye or head movements, but the two often work together. Disorders of attention, such as ADHD, may involve disruptions in these underlying neural mechanisms, making this line of research potentially relevant for future clinical studies.

By revealing that attention can emerge from simple learning systems, this study opens the door to rethinking how the brain allocates its limited resources—and how artificial systems might do the same.

Final Thoughts

This work represents a clear example of AI advancing neuroscience, not by replacing biological research, but by complementing it. By uncovering emergent neural mechanisms and predicting real neuron types, convolutional neural networks are proving to be valuable tools for understanding the mind.

As research at the intersection of human and machine intelligence continues, studies like this suggest that the boundary between artificial and biological systems may be far more informative—and far more interconnected—than previously imagined.

Research paper:

Emergent neuronal mechanisms mediating covert attention in convolutional neural networks – Proceedings of the National Academy of Sciences (2025)

https://doi.org/10.1073/pnas.2411909122