Quantum Theory Meets Computational Limits: How Realistic Constraints Are Reshaping Our Understanding of Entanglement

A major new study published in Nature Physics has revealed that our long-standing assumptions about quantum entanglement—one of the cornerstones of modern quantum theory—might need a serious update. The research, led by Professor Jens Eisert from Freie Universität Berlin, takes a closer look at how computational limitations change the way we understand and measure entanglement.

For decades, scientists have assumed that quantum systems could be manipulated with nearly perfect precision, as long as the operations followed the rules of locality (meaning the operations act on separate subsystems and communicate only through classical channels). But this new work argues that even when experiments are ideal, computational limits—the sheer difficulty of performing certain operations efficiently—can change how entanglement behaves.

In simpler terms: quantum entanglement doesn’t just depend on physics—it depends on what’s computationally possible.

The Core Idea Behind the Study

The paper, titled “Entanglement Theory with Limited Computational Resources,” introduces a new framework for studying entanglement when only efficient computations are allowed. In traditional theory, physicists assume that you can perform any mathematically valid quantum operation, no matter how complex it is. This assumption works fine on paper, but it doesn’t hold up in practice.

Eisert’s team shows that when we limit ourselves to operations that are computationally feasible (say, those that can be done in polynomial time), the standard measures of entanglement no longer behave the same way.

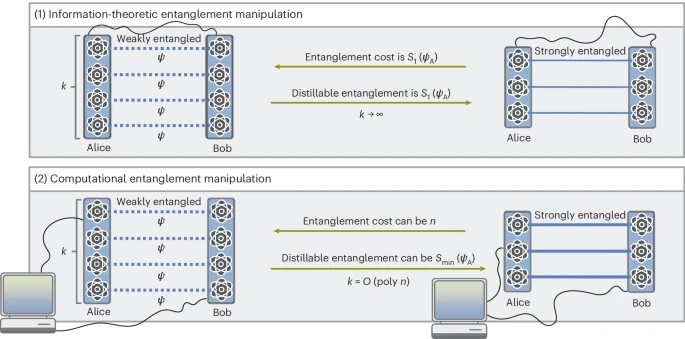

To capture this more realistic scenario, the researchers define two new quantities:

- Computational Distillable Entanglement – This measures how much usable entanglement (in the form of entangled bits, or ebits) can be extracted from many copies of a quantum state when operations are restricted to efficient algorithms.

- Computational Entanglement Cost – This quantifies how many ebits are required to create a given quantum state under the same efficiency constraints.

These two measures mirror older concepts in entanglement theory but add a layer of computational realism. They show that what’s theoretically possible and what’s computationally achievable can differ dramatically.

Why This Matters

Quantum theory is now more than a century old, and it’s been incredibly successful in explaining the behavior of atoms, photons, and other tiny particles. It has also given rise to quantum technologies—from lasers and semiconductors to the emerging fields of quantum computing and quantum communication.

However, this success has often relied on idealized assumptions. In practice, quantum systems are not only subject to noise and decoherence but also to the computational power of the devices and algorithms we use to control them.

Eisert’s work highlights this overlooked aspect. It suggests that entanglement manipulation—the process of converting quantum states into useful forms—may be much harder than previously believed, not because of experimental flaws, but because of inherent computational limits.

This discovery essentially bridges quantum physics and computer science. It shows that the boundary between what can be done in the quantum world and what can be computed efficiently is not just technical—it’s fundamental.

What the Researchers Found

The study focuses on bipartite pure states, a common setup in quantum information where two separate systems share a quantum connection. Traditionally, the amount of entanglement in such a system is given by the von Neumann entropy of one subsystem.

But under computational constraints, this no longer holds true. The researchers found that the min-entropy—a measure based on the largest probability in a distribution—becomes more relevant when only efficient operations are allowed.

This subtle shift has big consequences. It means that under realistic, computationally limited conditions:

- The amount of extractable entanglement from a quantum state can be much smaller than predicted by standard theory.

- The cost of creating even weakly entangled states can skyrocket, requiring far more resources than idealized models suggest.

- Some states that appear nearly separable (barely entangled) might still demand a maximal number of ebits to prepare efficiently.

Even more surprisingly, the team found that in certain cases, knowing the exact description of a quantum state doesn’t help. If your operations are limited to efficient algorithms, it may be no easier to process a known state than an unknown one.

In short, efficiency changes everything.

Connecting Physics and Computer Science

The study’s broader message is about communication between disciplines. Eisert and his co-authors argue that there has been a gap—a kind of “cultural divide”—between two scientific communities:

- Entanglement theorists, who study quantum states under idealized mathematical assumptions.

- Quantum algorithm and complexity researchers, who focus on what can actually be computed with limited resources.

According to Eisert, these groups have not interacted enough, and as a result, some contradictions in quantum theory have gone unnoticed. By linking computational complexity with entanglement theory, the new research invites both sides to rethink how they define the limits of quantum operations.

This kind of cross-disciplinary work is becoming more important as the world enters what UNESCO has declared the International Year of Quantum Science and Technology (2025). It’s a reminder that quantum physics is not just about abstract equations—it’s about what we can actually compute and build in the lab.

Implications for Quantum Computing and Communication

The findings have far-reaching implications for the design and efficiency of quantum computers and quantum networks.

In theory, quantum computers can perform certain tasks exponentially faster than classical computers by harnessing entanglement and superposition. But this study shows that the computational cost of manipulating entangled states may be higher than expected.

That means even with perfect quantum hardware, there might be fundamental algorithmic limits on what can be done efficiently. Tasks like state compression, quantum teleportation, and entanglement distillation could face bottlenecks that have nothing to do with noise or decoherence, but with computational feasibility itself.

The researchers also explored how these constraints affect quantum measurements and entropy estimation. They found new lower bounds on how many copies of a state are needed to accurately measure the von Neumann entropy when efficient operations are used. This has implications for quantum state tomography, error correction, and information transmission.

The Broader Context: What Is Quantum Entanglement?

To appreciate the impact of this study, it helps to understand what quantum entanglement actually is.

Entanglement occurs when two or more particles become connected in such a way that the state of one cannot be described independently of the others. If you measure one particle, the outcome instantly affects the other—even if they’re separated by vast distances.

Albert Einstein famously called this “spooky action at a distance,” but today it’s one of the most tested and verified phenomena in physics. Entanglement underpins many technologies, including:

- Quantum computing, where entangled qubits perform coordinated operations.

- Quantum cryptography, which allows unbreakable communication protocols.

- Quantum teleportation, which transfers information between distant particles without physically moving them.

However, entanglement is not a free resource. Creating and maintaining it requires precision, energy, and now—as Eisert’s study shows—computational efficiency.

The Role of Computational Complexity

Computational complexity is a branch of theoretical computer science that studies how difficult problems are to solve. It asks questions like: how many steps does it take to perform a task? How much memory is needed?

In the quantum world, similar questions arise. Can we find efficient algorithms for manipulating entangled states? Are there problems that are theoretically solvable but computationally intractable?

The new study effectively imports these questions into quantum information theory. It suggests that entanglement transformations—the processes by which one quantum state is converted into another—might belong to different complexity classes depending on how computationally demanding they are.

This means that entanglement theory can no longer be treated as purely mathematical. It has to respect the same computational limits that govern every other field of science and engineering.

The Takeaway

Eisert’s team has not just introduced new mathematical tools—they’ve redefined the playing field. By incorporating computational constraints into entanglement theory, they’ve shown that our understanding of quantum correlations must evolve.

What we once thought were purely physical limits are now seen as partly computational. And that realization could reshape how researchers design algorithms, interpret measurements, and even define quantum resources.

The work also serves as a call for greater collaboration between physicists and computer scientists. Many breakthroughs happen not in isolated disciplines but where they overlap—in those “cultural gaps” where new ideas emerge.

As quantum technologies mature, this intersection between physics and computation will become even more crucial. The future of quantum science won’t just depend on how powerful our machines are—but also on how smart and efficient our algorithms can be.

Reference:

Leone, L., Rizzo, J., Jerbi, S., & Eisert, J. (2025). Entanglement theory with limited computational resources. Nature Physics.

https://www.nature.com/articles/s41567-025-03048-8