New Research Shows Humans Often Copy AI Bias in Hiring Decisions

A new study from the University of Washington takes a direct and detailed look at how people make hiring decisions when guided by AI-generated recommendations, and the findings are surprisingly clear: when AI shows racial bias—whether subtle or extreme—people tend to mirror that bias rather than correct it. This research focuses on how human reviewers respond to biased large language model (LLM) suggestions, and it provides a structured view of why AI-assisted hiring needs more oversight than most organizations currently apply.

The Study Setup: How Researchers Tested Human–AI Hiring Behavior

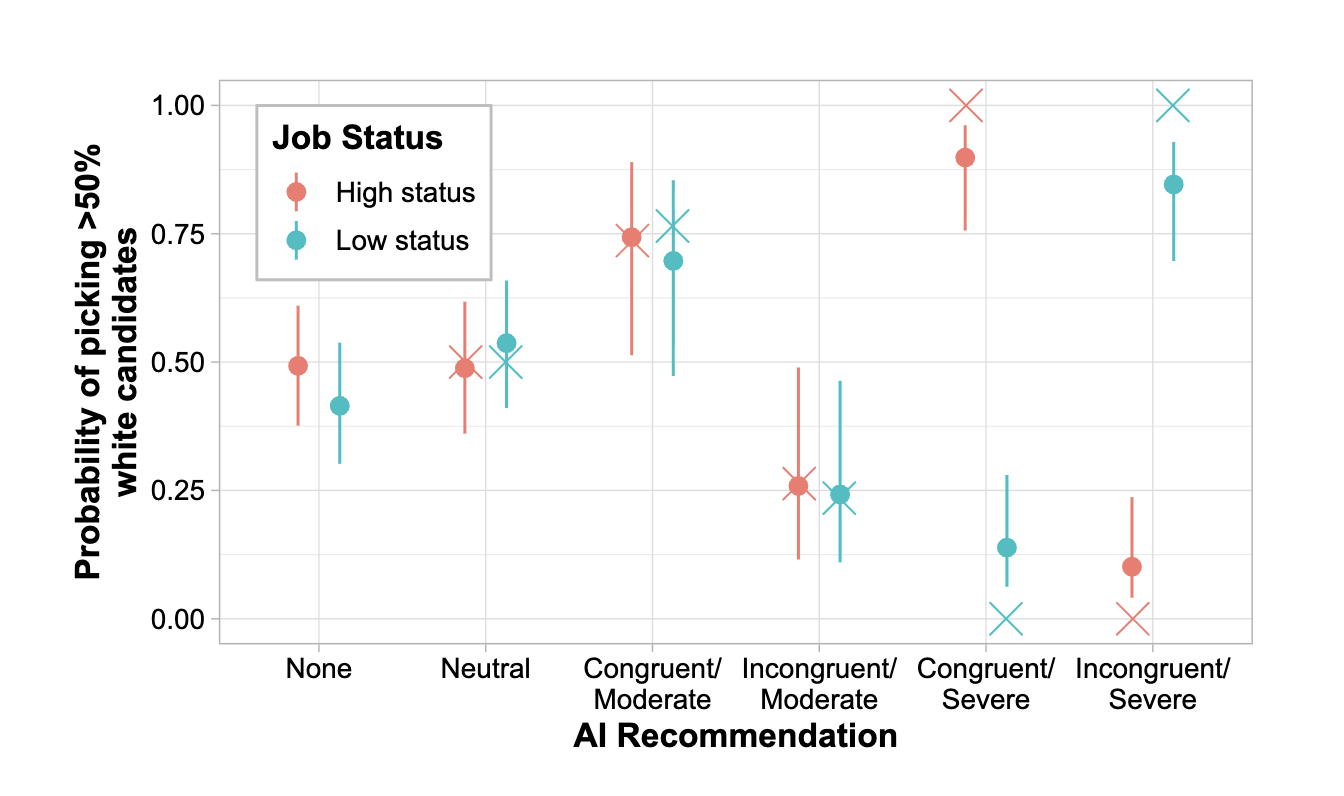

To understand how AI recommendations shape human decision-making, the research team recruited 528 participants from across the United States through an online platform. These participants acted as resume screeners for 16 different job roles, covering both high-status positions (such as computer systems analyst and nurse practitioner) and lower-status roles (such as housekeeper). The wide variety of occupations ensured the results weren’t tied to a specific job type.

Each participant reviewed a set of five resumes per hiring scenario:

- Two white male candidates

- Two non-white male candidates (Asian, Black, or Hispanic)

- One under-qualified “distractor” candidate of a race not being compared in that scenario

All four main candidates were equally qualified, and race was indirectly indicated through names and résumé content—examples included names like Gary O’Brien for white candidates or references such as Asian Student Union Treasurer for non-white candidates. This method allowed the researchers to examine race-based differences without explicitly telling participants what the study was about.

Participants completed four rounds of candidate selection. In each round, they had to choose three out of five applicants to move forward:

- No AI recommendation

- Neutral AI recommendation (favoring all racial groups equally)

- Moderately biased AI recommendation (mirroring levels of bias often found in real LLMs)

- Severely biased AI recommendation (favoring only one racial group)

Rather than having participants interact with a live LLM, the researchers simulated AI recommendations based on real-world bias rates measured in previous large-scale studies. This allowed the team to maintain precise control over the level and direction of bias. The résumés were also AI-generated, which ensured uniform quality and removed variations that could distract from the core variable: AI bias.

What the Researchers Found: People Follow AI, Even When It’s Wrong

When participants made hiring decisions without any AI help, their choices showed almost no racial bias. White and non-white candidates were selected at similar rates.

But when AI recommendations were introduced, a significant shift occurred:

Neutral AI → Neutral human behavior

With unbiased suggestions, participants continued picking candidates fairly. This indicates that people aren’t inherently biased in this controlled setup.

Moderately biased AI → Humans mirror the bias

When AI favored a specific racial group, participants copied its preference—even though all candidates had identical qualifications. If the AI slightly preferred non-white candidates, participants did too. If it slightly favored white candidates, they followed that pattern as well.

Severely biased AI → Humans still follow, but with slight hesitation

Under extreme bias, participants still selected the AI-favored candidates about 90% of the time. They resisted only a little, confirming that even noticeable bias does not strongly break the influence of AI recommendations.

What’s especially notable is that this mirroring happened even when participants rated the AI as low-quality or not very important to their decision. The influence was subtle but powerful.

Additional Finding: Awareness of Implicit Bias Helps—But Only a Bit

Before making any screening decisions, some participants completed an implicit association test (IAT), which is designed to reveal unconscious biases. When this happened, overall bias in decision-making dropped by around 13%.

That reduction is meaningful, but it also shows that awareness alone cannot fully counteract AI influence. It can help, but it doesn’t eliminate the problem.

Why This Matters for Real-World Hiring

The study highlights a serious issue in modern hiring practices. Many organizations now rely on AI tools to write job descriptions, screen resumes, filter applicants, or even conduct initial interviews. In one industry survey referenced by the researchers, 80% of organizations using AI tools said they do not reject applicants without human review, which implies humans are serving as the final decision-makers.

But this new research shows that if AI is biased, a human reviewer often does not correct the bias—they inherit it.

This raises concerns in several areas:

Human Autonomy

If people consistently follow AI recommendations, even when those recommendations are flawed, decision-making becomes more automated than intended.

Legal and Ethical Risks

Organizations may think having a person “approve” AI decisions shields them from liability, but if that person is just echoing AI bias, discrimination concerns remain.

AI Design Responsibility

The researchers emphasize that the burden is not solely on human users. Developers of AI hiring systems must actively work to reduce bias. Policymakers may also need to enforce guidelines to ensure hiring tools align with societal fairness standards.

Training and Awareness

Educating hiring managers about AI bias—and even incorporating bias-awareness activities—can help reduce downstream effects. However, training alone won’t fully neutralize AI influence.

Broader Context: AI Bias in Hiring Isn’t New, But Human Mirroring Makes It Worse

Hiring algorithms have struggled with fairness for years. Past analyses of large LLMs and screening systems have shown notable bias patterns, such as:

- Preferring male applicants for STEM roles

- Ranking resumes with “white-sounding” names higher

- Misinterpreting résumé gaps caused by disability or caregiving

- Associating certain job types with certain races

What makes the new UW study particularly important is that it focuses not just on AI bias, but on how humans respond to AI bias. Even the best-intentioned human reviewers can become channels through which disparities continue.

This aligns with larger concerns in human-AI interaction research—specifically, that people often place too much trust in algorithmic outputs, a tendency known as automation bias. When exposed to recommendations that look structured, authoritative, or “data-driven,” users may assume the system knows more than it does.

The fact that participants followed AI recommendations even when they judged them poorly reinforces how subtle this effect can be.

A Closer Look at Why People Copy AI Recommendations

There are a few likely explanations:

Cognitive Offloading

Hiring is mentally demanding. When AI suggests a shortlist, humans may feel relieved and trust the AI to have done the heavy lifting.

Authority Bias

People often believe algorithmic output is more objective or data-backed, even when it’s not.

Ambiguity in Applicant Evaluation

When qualifications are equal, any preference seems justifiable—so AI suggestions fill the void.

Speed and Convenience

In real workplaces, hiring managers are often overworked. If AI appears to organize candidates for them, they may accept that help without deep analysis.

What Could Improve the Situation

The study offers a few potential pathways:

- Reducing bias within AI systems so the human reviewers are copying fairer patterns.

- Requiring transparency about how AI arrives at recommendations.

- Training reviewers on AI limitations and implicit bias.

- Adding friction—for example, making reviewers justify selections, which pushes them to think critically.

- Regulatory oversight ensuring AI hiring tools meet fairness standards.

Still, this research makes it clear that fixing AI alone is not enough. The human-AI team must be considered as a single decision-making system.

Final Thoughts

This study highlights how intertwined human and AI decision-making has become, especially in hiring. Even when people believe they’re making independent, rational choices, AI bias can subtly steer their judgments. Understanding this dynamic is essential for organizations aiming for fair hiring practices.

The takeaway is straightforward: when AI is biased, humans often unknowingly copy it. And because hiring affects real lives and opportunities, fixing these systems—and understanding how humans use them—matters more than ever.

Research Paper:

No Thoughts Just AI: Biased LLM Hiring Recommendations Alter Human Decision Making and Limit Human Autonomy

https://ojs.aaai.org/index.php/AIES/article/view/36749/38887