A New Algorithm Makes Language Model Outputs Faster, Smarter, and Much Easier to Control

Researchers from Yale University and collaborating institutions have introduced a new algorithm that significantly improves how language models follow strict rules while generating text. The work, led by Assistant Professor Alex Lew, has earned major recognition in the AI research community and is already influencing how developers think about controlling large language models.

The study, titled Fast Controlled Generation from Language Models with Adaptive Weighted Rejection Sampling, was selected as one of just four Outstanding Papers at the Conference on Language Modeling (COLM 2025), held in Montreal in October 2025. This recognition highlights both the technical strength of the research and its practical relevance to real-world language model applications.

At its core, the paper tackles a persistent and frustrating problem in artificial intelligence: how to make language models reliably follow hard constraints without slowing them down or distorting their output.

Why Controlling Language Models Is Hard

Modern language models are inherently probabilistic systems. Every word, or token, they generate is sampled from a probability distribution based on what seems most likely to come next. This randomness is what gives models creativity and flexibility—but it also means they can ignore instructions or break rules, even when explicitly asked not to.

For example, you might want a model to:

- Generate valid Python or JSON code

- Use only simple words

- Follow a strict poetic structure, like a haiku

- Produce chemically valid molecules

- Output text that fits a precise format, such as SQL queries or structured data

Simply asking the model to follow these constraints is not enough. There is always some probability that it will fail.

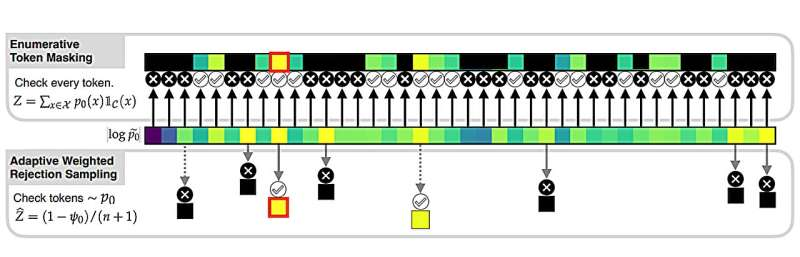

To solve this, researchers have traditionally relied on a technique known as locally constrained decoding. This approach acts like a muzzle on the model, preventing it from ever selecting a token that would violate the constraint. At every step of generation, the algorithm checks which of the tens of thousands of possible next tokens are allowed and blocks the rest.

While this guarantees correctness, it comes with serious downsides.

The Limitations of Locally Constrained Decoding

The first issue is speed. Checking every possible next token at each step is computationally expensive, especially when vocabularies can exceed 100,000 tokens. This makes generation slow and inefficient, particularly for longer outputs.

The second issue is more subtle but just as important: local constraints can lead to globally poor results. Because the model is only prevented from making illegal moves at the last possible moment, it can wander into situations where no good continuation exists.

A simple example illustrates the problem. Suppose a language model is asked to write a headline about the economy using only words with five letters or fewer. Locally constrained decoding might allow the model to generate a phrase like “Fed Chair Says It’s Time to Bite the,” only to discover that it cannot complete the idiom with the word “Bullet” because it violates the constraint. At that point, the model is stuck with no natural way to continue.

The output is technically valid, but linguistically broken.

A Faster and More Global Solution

Instead of muzzling the model at every step, Lew and his co-authors propose a fundamentally different approach: Adaptive Weighted Rejection Sampling, or AWRS.

This method comes from classical computational statistics, not traditional natural language processing. Rather than checking every possible token, the algorithm samples only a small subset of candidate tokens and checks whether they satisfy the constraint. If a sampled token fails, it is rejected and another is sampled. Crucially, this process is done in a way that preserves the true underlying probability distribution of the language model.

In practical terms, this means:

- The algorithm might only check two or three tokens instead of tens of thousands

- Constraints are applied globally, not just at the last moment

- The resulting text remains faithful to what the model actually believes is likely

The judges at COLM emphasized that this approach solves a real and widespread problem and does so in a way that actually works in practice.

How the Algorithm Maintains Accuracy

One of the key technical achievements of the paper is that AWRS provides unbiased estimates of the probabilities involved in generation. This matters because naive rejection sampling can accidentally skew the output distribution, making some sequences more likely than they should be.

The researchers show how to compute low-variance, unbiased importance weights that correct for rejected samples. This allows the algorithm to be safely integrated into sequential generation processes without sacrificing correctness.

Another important insight is that the algorithm becomes faster as language models get better. If a model already tends to produce valid outputs, AWRS rejects fewer samples and runs extremely efficiently. This makes it especially well-suited for modern large language models.

Demonstrated Applications Across Domains

The paper does not stop at theory. The authors demonstrate substantial speedups and quality improvements across a wide range of tasks, including:

- Valid Python code generation

- Structured JSON output

- Text-to-SQL translation

- Molecular synthesis

- Pattern-constrained text generation

In each case, the algorithm dramatically reduces the number of constraint checks required while maintaining strict correctness guarantees.

Open-Source and Ready for Use

The algorithm has already been implemented as part of the open-source GenLM toolkit, making it accessible to researchers and developers. This lowers the barrier for adoption and allows others to experiment with controlled generation without reinventing the underlying machinery.

The research team includes contributors from multiple leading institutions, reflecting the collaborative nature of modern AI research.

Why This Matters for the Future of AI

Controlled generation is becoming increasingly important as language models are deployed in high-stakes environments, such as software development, scientific research, data processing, and automated reasoning systems. In these settings, being almost correct is not good enough.

This work shows that it is possible to:

- Enforce strict rules

- Preserve natural language quality

- Avoid performance bottlenecks

- Ground modern AI techniques in well-understood statistical principles

It also serves as a reminder that some of the most powerful ideas in today’s AI systems come from revisiting and adapting classical methods, rather than inventing entirely new ones.

Additional Context: Controlled Generation in Modern AI

Controlled text generation is a growing research area because language models are increasingly used as general-purpose engines, not just chatbots. From code assistants to chemistry tools, models must often operate within rigid boundaries. Techniques like AWRS represent a shift away from brute-force enforcement toward smarter probabilistic control, a direction that is likely to shape future language model architectures and decoding strategies.

As models continue to scale and move into more structured domains, approaches like this may become standard components of language model toolkits.

Research Paper:

https://arxiv.org/abs/2504.05410