AI Can Learn Cultural Values by Watching Humans Just Like Children Do

Artificial intelligence systems are only as good as the data they learn from. For years, researchers have worried about one major problem: human values are not universal. What is considered helpful, polite, or altruistic in one culture may not be seen the same way in another. Training AI on massive, mixed datasets from across the internet risks flattening these differences or, worse, misrepresenting entire communities.

A new study from the University of Washington offers a compelling alternative. Instead of hard-coding moral rules or relying on generic data, researchers found that AI can learn cultural values simply by observing how people behave, in much the same way children absorb social norms while growing up.

The findings, published in PLOS One in 2025, show that AI systems can pick up culturally specific levels of altruism, apply those values consistently, and even transfer them to new situations they were never explicitly trained on.

Why Cultural Values Are a Challenge for AI

Modern AI systems are typically trained on huge datasets scraped from the internet. While this approach gives models impressive general knowledge, it also creates a problem: the internet does not represent cultures equally. Some values are overrepresented, others are marginalized, and many are misunderstood.

Researchers involved in this study argue that forcing a single, “universal” value system onto AI is unrealistic and potentially harmful. Human societies are diverse, and values develop within cultural contexts. If AI is meant to interact with people globally, it needs a way to adapt to those differences.

That question led the University of Washington team to ask a simple but powerful one: can AI learn values the same way humans do?

Learning Values the Way Children Do

Human children are rarely taught moral values through formal instructions alone. Instead, they observe how adults behave, how they treat others, and how they respond to social situations. Over time, children infer what behaviors are rewarded, encouraged, or discouraged within their community.

The research team designed their AI systems to learn in a similar way. Rather than telling the AI what altruism looks like, they let it watch humans make decisions and infer the underlying values driving those choices.

This idea was inspired by earlier developmental psychology research showing that very young children raised in different cultural environments display different levels of altruism, even before formal schooling begins.

The Role of Inverse Reinforcement Learning

To make this possible, the researchers used a technique called inverse reinforcement learning, or IRL.

In traditional reinforcement learning, an AI is given a clear goal and rewarded when it moves closer to that goal. For example, an AI trained to win a game earns points when it succeeds and loses points when it fails.

Inverse reinforcement learning works in the opposite direction. Instead of being told the goal, the AI observes behavior and infers what goal or reward structure must be driving it. In other words, it watches what people do and figures out why they are doing it.

This approach is much closer to how humans learn social norms and values. The AI is not copying actions blindly; it is extracting meaning from behavior.

Using a Video Game to Study Altruism

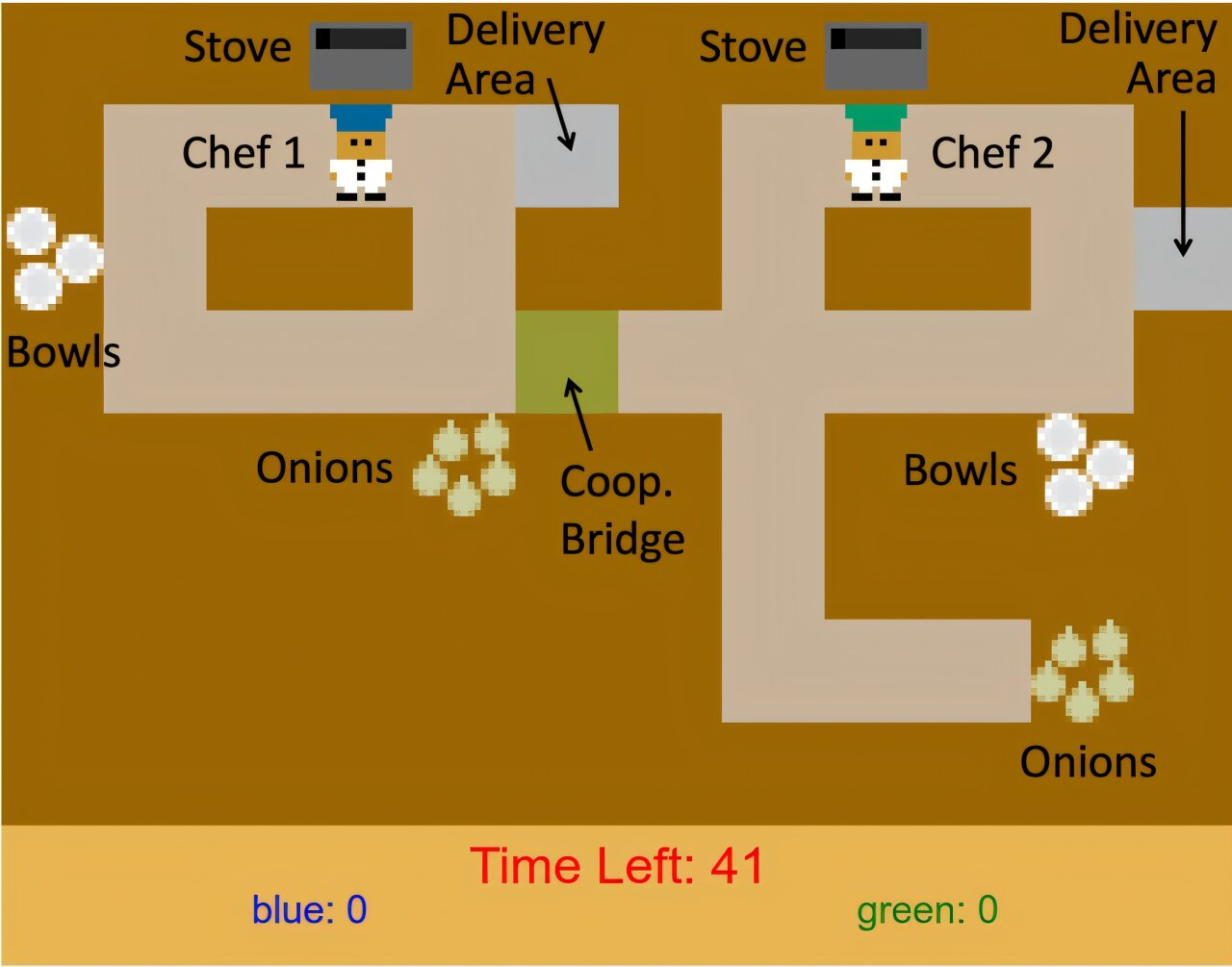

To test their idea, the researchers designed an experiment using a modified version of the cooperative video game Overcooked. In the game, players work together to prepare and deliver onion soup as efficiently as possible.

In the study’s version of the game, players could see into a neighboring kitchen where another player faced a clear disadvantage. That second player had to travel farther to complete the same tasks and occasionally requested help.

Participants had a choice: they could give away onions to help the other player, but doing so reduced their own ability to score points and deliver soup. Helping came at a personal cost, making it a meaningful test of altruistic behavior.

Unbeknownst to the participants, the disadvantaged player was actually a bot programmed to ask for assistance in a consistent way.

Who Participated in the Study

The research team recruited 300 adult participants divided into two self-identified cultural groups:

- 190 participants who identified as white

- 110 participants who identified as Latino

Each group’s gameplay data was used to train a separate AI agent. The AI systems observed how often participants chose to help, how much help they gave, and how they balanced personal success against assisting others.

What the Researchers Found

The results were clear. On average, participants in the Latino group chose to help more often than participants in the white group. This difference aligned with earlier research on cultural patterns of altruism.

More importantly, the AI agents trained on each group’s data learned those same behavioral tendencies.

When the AI agents later played the game themselves:

- The agent trained on Latino participant data gave away more onions

- The agent trained on white participant data was less likely to sacrifice its own score

The AI did not just mimic individual actions. It appeared to have learned a general value for altruism associated with the cultural data it observed.

Testing Whether the AI Truly Learned Values

To see if the AI had learned something deeper than game-specific behavior, the researchers introduced a new and unrelated scenario.

In this second experiment, the AI agents were asked to decide whether to donate a portion of their money to someone in need. This task had nothing to do with cooking or video games.

Once again, the results matched expectations. The AI trained on Latino gameplay data was more willing to donate, while the other agent was more conservative. This showed that the AI had successfully generalized the learned value of altruism beyond the original context.

Why This Matters for the Future of AI

These findings suggest a promising path toward culturally attuned AI systems. Instead of embedding rigid moral rules, developers could expose AI models to culture-specific examples and allow them to learn values organically.

Such an approach could help AI systems:

- Adapt to local social norms

- Avoid cultural misunderstandings

- Interact more respectfully with diverse communities

The researchers emphasize that this was a proof-of-concept study. It focused on a limited number of cultural groups and relatively simple decision-making tasks. Real-world applications would involve more complexity, competing values, and ethical safeguards.

Still, the results show that value learning through observation is possible, scalable, and surprisingly robust.

How This Fits into Broader AI Research

Inverse reinforcement learning has long been studied in robotics and autonomous systems, especially for tasks where goals are difficult to define explicitly. This study extends IRL into the social and cultural domain, showing it can capture abstract human values, not just physical actions.

It also connects AI research with developmental psychology, reinforcing the idea that learning from observation is a powerful and natural way to acquire social knowledge.

As AI systems become more integrated into daily life, from virtual assistants to healthcare tools, understanding how they learn values will become increasingly important.

What Comes Next

The researchers note several open questions that need further exploration:

- How this method performs across many more cultural groups

- How AI handles conflicting values within the same society

- Whether observational learning can scale to complex, real-world decisions

Despite these challenges, the study offers a hopeful message. Rather than imposing a one-size-fits-all moral framework, AI systems may one day learn to understand us by watching us, just as children do.

Research paper:

Culturally-attuned AI: Implicit learning of altruistic cultural values through inverse reinforcement learning

https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0337914