AI “CHEF” Could Help People With Cognitive Decline Complete Everyday Home Tasks

In the United States, about 11% of adults over the age of 45 report experiencing some level of cognitive decline. While this decline may be mild, it can still interfere with everyday activities that many people take for granted, such as cooking meals, managing medications, paying bills, or making phone calls. These challenges often reduce independence and increase reliance on caregivers. A new research project led by scientists and clinicians at Washington University in St. Louis explores how artificial intelligence could help bridge this gap and allow people to live independently for longer.

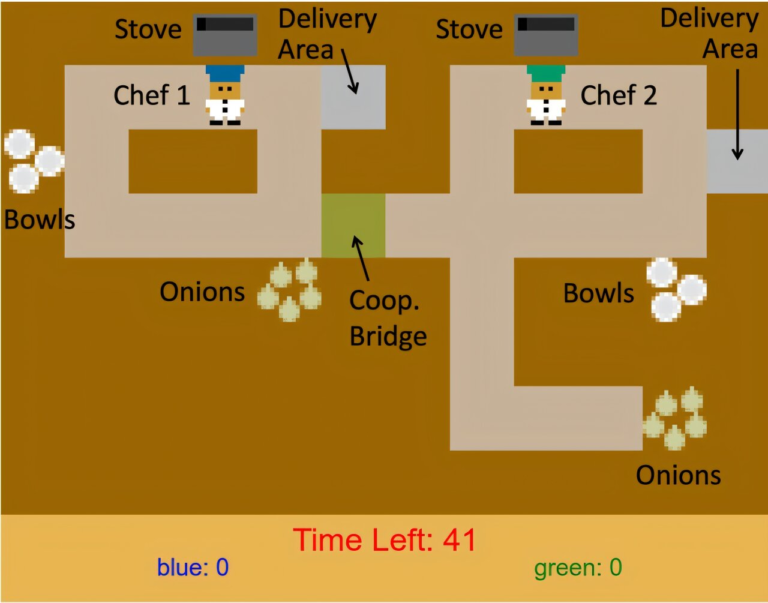

Researchers have developed a system known as CHEF-VL, short for Cognitive Human Error Detection Framework with Vision-Language models. This AI-based framework is designed to observe how people complete everyday tasks and identify when something goes wrong, particularly errors related to task sequencing, which are common in individuals experiencing cognitive decline.

A Collaboration Between Computer Science and Occupational Therapy

The CHEF-VL project is the result of a close collaboration between computer scientists and occupational therapists, an approach that allowed the team to combine technical expertise with clinical insight. The research was led by Ruiqi Wang, a doctoral student working under Chenyang Lu, the Fullgraf Professor of Computer Science at the McKelvey School of Engineering and director of WashU’s AI for Health Institute. On the clinical side, Wang worked closely with Lisa Tabor Connor, associate dean and director of occupational therapy at WashU Medicine, along with her research team.

To develop and test the system, the team collected video data from more than 100 individuals, including people with and without self-reported cognitive decline. Each participant was asked to complete a structured everyday task while being observed and recorded. This dataset became the foundation for training and evaluating the AI system.

Why Cooking Was Chosen as the Test Task

Occupational therapists often assess daily functioning using the Executive Function Performance Test, which includes four core activities: cooking, making a phone call, paying bills, and taking medications. For this study, the team selected cooking because it is a complex activity that involves planning, sequencing, memory, and safety awareness.

The researchers built a smart kitchen equipped with an overhead camera system. Participants were asked to prepare a simple meal: oatmeal cooked on a stovetop. While the task sounds straightforward, it involves many steps that must be completed in the correct order.

Participants were given step-by-step instructions, including gathering ingredients, boiling water, adding oats, cooking the mixture for two minutes, stirring, serving the oatmeal, and finally returning used dishes to the sink. Throughout the task, occupational therapy students closely monitored performance, watching for errors or safety issues such as boiling water spilling over the pot. When needed, they provided supportive cues to keep participants safe.

How the CHEF-VL System Works

The CHEF-VL framework analyzes cooking performance using state-of-the-art vision-language models, a class of AI systems capable of understanding text, images, and video together. This is a major shift from earlier approaches that relied heavily on hand-crafted rules or wearable sensors.

One part of the system focuses on recognizing human actions, such as stirring, pouring, or placing items on the stove. Another component tracks environmental changes, such as whether water is boiling or ingredients have been added. These streams of information are combined using an algorithm designed to detect cognitive sequencing errors, such as skipping a step, repeating a step, or performing actions out of order.

Importantly, the system accounts for the fact that even people without cognitive decline make mistakes while cooking. The goal is not to eliminate all errors, but to identify patterns that suggest cognitive difficulty and to understand which errors are more challenging for AI systems to detect reliably.

Validating AI Against Human Observation

To ensure the system’s accuracy, Connor’s occupational therapy team manually coded every error made during the cooking task. These human annotations served as a reference point for evaluating how well the AI detected the same mistakes.

By comparing AI predictions with expert human observations, the researchers were able to determine which types of errors were detected successfully and which required further refinement. This iterative process allowed both teams to improve the system’s performance and align it more closely with real-world clinical assessments.

Why Vision-Language Models Matter

Traditional cognitive assessments are often paper-based tests administered in clinical settings. While useful, these tests do not always reflect how a person functions in everyday life. According to the researchers, vision-language models offer a way to observe real-world behavior in natural environments, providing insights that conventional tests may miss.

These models also allow for flexibility in how tasks are completed, recognizing that people may perform the same activity in different ways. This adaptability is crucial for building AI systems that work across diverse households and routines.

Recognition and Research Impact

The CHEF-VL system was formally introduced in a research paper published in December in the Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. The work will also be presented at UbiComp/ISWC 2026, a major international conference focused on ubiquitous and wearable computing.

The impact of the research extends beyond publications. Ruiqi Wang received a 2025 Google Ph.D. Fellowship, becoming the first student from McKelvey Engineering to earn this highly competitive award. The fellowship recognizes innovative research with strong real-world potential, particularly in health-related fields.

Future Directions and Real-World Use

While promising, the CHEF-VL system is not yet ready for everyday home deployment. Researchers emphasize that additional work is needed to improve reliability, expand the range of tasks the system can handle, and ensure safety in real-world settings.

The long-term vision is to create a nonintrusive AI assistant that supports people with mild cognitive decline without replacing human caregivers. By offering timely prompts or feedback, such systems could help individuals remain in their own homes longer while maintaining confidence and independence.

How This Fits Into the Broader Assistive Technology Landscape

AI-based assistive technologies are becoming increasingly important as populations age worldwide. Systems like CHEF-VL represent a growing trend toward context-aware AI, where technology adapts to human behavior rather than forcing people to adapt to rigid systems.

In the future, similar frameworks could be applied to tasks beyond cooking, including medication management, financial tasks, and household organization. Combined with advances in privacy-preserving AI and smart home infrastructure, these systems may play a key role in community health and aging in place.

Looking Ahead

The researchers involved in CHEF-VL see this project as an initial step rather than a finished product. Their goal is to continue refining the technology so it can support independence, improve self-confidence, and reduce caregiver burden. With ongoing collaboration between clinicians and technologists, AI systems like CHEF-VL could eventually become practical tools in everyday homes.

Research paper: https://doi.org/10.1145/3770714