Guided Learning Shows How Previously Untrainable Neural Networks Can Finally Learn

For years, some neural network architectures have carried an unfortunate label: “untrainable.” These are models that, despite repeated attempts, tend to overfit, collapse during training, or fail to learn anything meaningful on modern machine-learning tasks. A new study from researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) challenges that assumption in a big way. Their work shows that many of these problematic networks are not fundamentally broken. Instead, they often just need the right kind of early guidance to unlock their potential.

The research introduces a method called guided learning, which helps neural networks learn by briefly aligning them with another network during training. This approach has been shown to dramatically improve performance in architectures that were previously considered impractical for real-world use. The findings suggest that what we often call “untrainable” may really be a problem of poor initialization and unfavorable starting points, rather than flawed network design.

At the heart of this work is a simple but powerful idea: neural networks do not just learn from data. They also inherit strong inductive biases from their architecture, and these biases can be transferred from one network to another in surprisingly effective ways.

What Guided Learning Actually Does

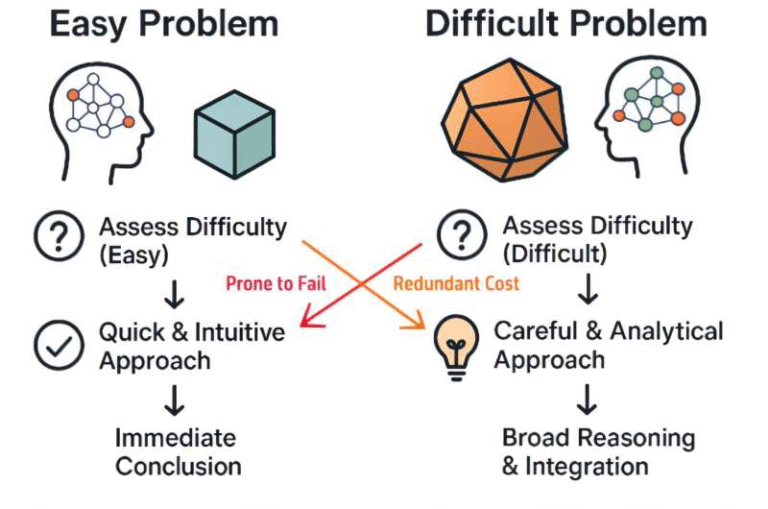

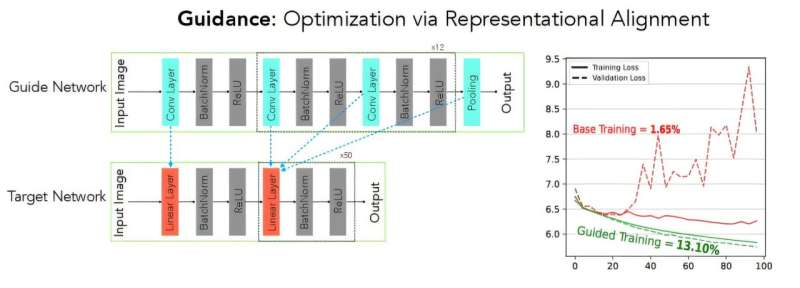

Guided learning works by encouraging a target neural network to match the internal representations of another network, known as the guide. Instead of focusing on final predictions or outputs, the method aligns the hidden layer activations between the two models. In other words, the target network is nudged to organize information internally in a way that resembles how the guide network does it.

This is an important distinction from traditional knowledge distillation, where a student model tries to copy the outputs of a teacher model. Knowledge distillation depends heavily on the teacher being well trained. If the teacher is untrained or poorly trained, the student gains little or nothing. Guided learning, on the other hand, does not rely on meaningful outputs. It relies on representational similarity, which turns out to be useful even when the guide network has never seen real data.

The researchers used similarity measures to compare layer-by-layer representations and added a guidance loss term during training. This extra loss encourages alignment early on but does not need to remain active throughout the entire training process.

A Short Alignment Phase With Long-Lasting Effects

One of the most interesting questions explored in the study was whether guidance needs to be applied continuously or if it mainly serves as a better starting point. To answer this, the researchers ran experiments using deep fully connected networks, a type of model known for overfitting almost immediately on complex tasks.

Before training these networks on real data, the researchers let them spend a few steps aligning with another network using random noise inputs. This brief “practice session” acted like a warm-up. After this short alignment phase, guidance was removed entirely.

The results were striking. Networks that normally collapsed or overfit early remained stable, achieved lower training loss, and avoided the severe performance degradation that typically plagues standard fully connected networks. Even without ongoing guidance, the benefits persisted throughout training.

This showed that guidance is not just a crutch. It can function as a powerful initialization strategy, placing networks into regions of parameter space where learning becomes much easier.

Why Untrained Guides Still Work

One of the most surprising findings in the study is that untrained guide networks can still be incredibly useful. When the researchers tried knowledge distillation using an untrained teacher, it failed completely, as expected. The outputs of an untrained model contain no meaningful signal.

Guided learning behaved very differently. Even when the guide network was randomly initialized and never trained, aligning internal representations still produced strong improvements in the target network’s performance. This happens because untrained networks still encode architectural structure. Their layers, connectivity patterns, and activation flows impose biases on how information is processed.

By aligning with these internal structures, the target network inherits useful organizational principles without copying meaningless outputs. This finding highlights a crucial insight: architecture alone carries valuable information, separate from learned weights or data exposure.

Making “Bad” Architectures Useful Again

The researchers tested guided learning across a wide range of architectures that are typically seen as problematic:

- Fully connected networks (FCNs), which often overfit badly on image datasets

- Plain convolutional networks without residual connections

- Recurrent neural networks, which struggle on certain sequence tasks

- Transformers, particularly in setups where they lack strong inductive biases

In many cases, guided learning dramatically narrowed the performance gap between these architectures and more modern, carefully engineered designs. Networks that were once dismissed as outdated or ineffective suddenly became competitive.

This does not mean that architectural innovations like residual connections or attention mechanisms are unimportant. Instead, it suggests that some of their benefits may come from placing networks in favorable regions of parameter space, something guided learning can also achieve.

Rethinking Why Neural Networks Fail

Beyond performance improvements, the study has deeper implications for how researchers think about neural network training. The results suggest that failure often has less to do with the task or the dataset and more to do with where the network starts its learning journey.

By separating architectural bias from learned knowledge, guided learning provides a new tool for studying neural network design. Researchers can examine how easily one architecture can guide another and use that information to understand functional similarities and differences between models.

This opens the door to more principled ways of comparing architectures, rather than relying solely on benchmark results. It may also help explain why some networks train smoothly while others struggle, even when they appear similar on paper.

Representational Similarity as a Research Tool

The method relies on measuring representational similarity between networks, an idea that has been gaining traction in recent years. By analyzing how different models encode information internally, researchers can uncover hidden structures that are not visible from outputs alone.

Guided learning takes this concept a step further by actively using representational similarity to shape training dynamics. This makes it not just an analysis tool, but a practical training technique. Over time, this approach could help identify which components of network design truly matter and which are less critical than previously thought.

What This Means for the Future of Machine Learning

The biggest takeaway from this research is that “untrainable” does not mean hopeless. Many architectures fail not because they lack capacity or expressiveness, but because they start in the wrong place. With even brief guidance, these networks can learn effectively, avoid common failure modes, and reach performance levels once thought out of reach.

The CSAIL team plans to further investigate which architectural elements contribute most to these improvements and how guided learning might influence future model design. There is also interest in exploring whether guidance can reduce training costs or make simpler architectures viable alternatives to increasingly large and complex models.

In a field that often focuses on building ever bigger and more complicated systems, this work is a reminder that how we train networks can matter just as much as what we train. Guided learning offers a fresh perspective on old problems and a promising path toward better, more efficient machine learning.

Research paper: https://arxiv.org/abs/2410.20035