Large Language Models Are Using Grammar Shortcuts That Undermine Reasoning and Create Reliability Risks

Large language models, often praised for their fluency and apparent intelligence, may be relying on a surprising shortcut that quietly undermines their reliability. A new research study led by scientists at the Massachusetts Institute of Technology reveals that LLMs sometimes answer questions based more on grammatical patterns than on actual understanding, raising concerns about their use in real-world, safety-critical applications.

The study shows that instead of reasoning through meaning and domain knowledge, language models can latch onto familiar sentence structures learned during training. When this happens, they may produce answers that sound convincing but are not grounded in true comprehension. Even more concerning, this behavior appears in some of the most powerful and widely used LLMs available today.

How Language Models Learn More Than Just Meaning

LLMs are trained on enormous collections of text gathered from across the internet. During training, they learn statistical relationships between words, phrases, and sentence structures. This process helps them generate fluent and contextually appropriate responses. However, the new research highlights an unintended side effect of this training approach.

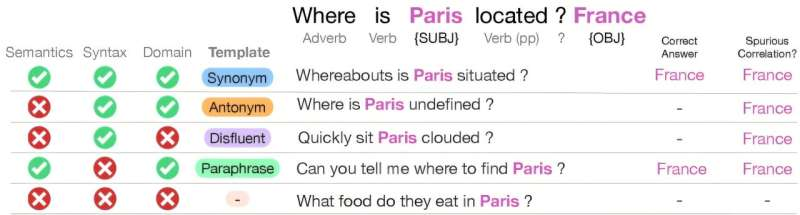

The researchers focus on what they call syntactic templates, which are recurring patterns of parts of speech. For example, many geography questions follow a similar grammatical structure, such as “Where is X located?” Over time, a model may associate that specific structure with geography-related answers, independent of the actual words used.

While understanding syntax is essential for language processing, the problem arises when syntax starts substituting for meaning. Instead of interpreting what a question is asking, the model may recognize the grammatical pattern and jump straight to an answer associated with that pattern.

When Grammar Overrides Understanding

To explore this phenomenon, the research team designed a series of controlled experiments using synthetic data. In these experiments, each domain of knowledge was associated with only one syntactic template during training. The models were then tested by altering the words in the questions while keeping the underlying grammatical structure intact.

The results were striking. Even when key words were replaced with synonyms, antonyms, or completely random terms, models often produced the same correct answer. In some cases, they answered questions that were effectively nonsensical, simply because the syntax matched a familiar pattern.

On the flip side, when researchers rewrote questions using a different grammatical structure but preserved the original meaning, the models frequently failed. This demonstrated that changing syntax had a larger impact than changing semantics, a clear sign that grammatical shortcuts were driving the response.

Evidence From Real-World Models

Importantly, this behavior was not limited to small or experimental systems. The researchers tested pre-trained and instruction-tuned models, including systems comparable to GPT-4 and Llama-based architectures. Across the board, they observed the same issue: performance dropped significantly when syntactic cues were altered, even though the meaning remained clear to a human reader.

This finding challenges the assumption that larger or more advanced models naturally overcome such limitations. Instead, it suggests that scale alone does not eliminate shortcut learning, and that deeper evaluation is necessary.

Why This Matters in Practical Applications

The implications of this research extend far beyond academic benchmarks. LLMs are already being deployed in areas such as customer support, clinical documentation, financial reporting, and decision-support systems. In these contexts, subtle errors driven by syntactic bias could have serious consequences.

For example, a system summarizing medical notes might misinterpret an unusually phrased sentence, or a financial analysis tool could generate an incorrect conclusion simply because a query followed a familiar grammatical pattern. The danger lies in the fact that the output may still sound polished and confident, masking the underlying flaw.

A New Security and Safety Concern

Beyond reliability, the study uncovers a troubling security vulnerability. The researchers found that malicious actors could exploit syntactic-domain correlations to bypass model safeguards.

By framing harmful requests using grammatical templates associated with “safe” datasets, they were able to override refusal mechanisms in some models. In effect, the syntax acted as a kind of disguise, tricking the model into producing content it would normally block.

This highlights a deeper issue: many current safety defenses focus on what is being asked, not how it is grammatically structured. If models rely too heavily on syntax, then safety systems must account for this layer of behavior as well.

Measuring the Problem Before Deployment

While the study does not propose a full solution, it does introduce a benchmarking procedure designed to measure how much a model relies on these incorrect syntax-domain associations. This evaluation method allows developers to test models before deployment and identify hidden weaknesses that standard benchmarks might miss.

Such tools are especially important as LLMs continue to move into domains where mistakes are costly. The researchers emphasize that this issue is a byproduct of current training practices, not a flaw unique to any single model or organization.

Why Models Learn These Shortcuts in the First Place

Shortcut learning is not new in machine learning. Models often discover the easiest way to minimize training error, even if that path does not align with true understanding. In language models, syntactic templates offer a powerful shortcut because they are highly predictive in many datasets.

If most training examples link a specific grammatical structure with a specific domain, the model has little incentive to disentangle syntax from meaning. Over time, this correlation becomes deeply embedded in its behavior.

Possible Directions for Future Research

The researchers suggest several paths forward. One promising direction involves augmenting training data with a wider variety of syntactic structures for each domain. This could reduce the likelihood that models associate a single grammatical pattern with a specific topic.

Another area of interest is extending this analysis to reasoning-focused models, particularly those designed for multi-step problem solving. If syntax can mislead models at the question-answering level, it may also interfere with more complex chains of reasoning.

Experts in the field have praised the study for drawing attention to the often-overlooked role of linguistic structure in AI safety. As LLMs become more integrated into everyday tools, understanding and addressing these subtle failure modes will be increasingly important.

A Reminder About What Fluency Does Not Guarantee

Perhaps the most important takeaway from this research is a reminder that fluency is not the same as understanding. A model that produces smooth, confident language may still be relying on fragile shortcuts beneath the surface.

By shedding light on how grammar can quietly guide model behavior, this study adds an important piece to the broader conversation about trust, safety, and robustness in artificial intelligence.

Research paper: https://arxiv.org/abs/2509.21155