MIT Researchers Bring Tensor Programming Into the Continuous World

Tensor programming has been at the heart of scientific computing for decades, quietly shaping how engineers, scientists, and AI researchers work with data. Now, researchers at the Massachusetts Institute of Technology have taken a major step forward by extending tensor programming beyond rigid grids and into the continuous world, where data lives at real-number coordinates rather than neat integer points.

This new work, developed at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), introduces what the researchers call the Continuous Tensor Abstraction (CTA). In simple terms, CTA allows programmers to work with continuous data using the same concise, math-like tensor notation that already powers tools such as NumPy and PyTorch. The result is a system that dramatically reduces code complexity while remaining fast and compatible with modern hardware.

How Tensor Programming Got Stuck on Grids

To understand why this matters, it helps to look at how tensor programming evolved. When FORTRAN first appeared in 1957, it revolutionized computing by letting programmers express calculations using arrays rather than low-level machine instructions. Over time, these arrays evolved into tensors, which are now fundamental to machine learning, physics simulations, and large-scale numerical analysis.

However, traditional tensor systems make a critical assumption: all data lives on an integer grid. Values are stored at locations like (1, 2) or (5, 7), and this structure has allowed hardware and software developers to optimize tensor operations to an incredible degree. GPUs, tensor cores, and compilers are all built around this idea.

The problem is that many real-world datasets do not naturally fit into this grid-based model.

The Challenge of Continuous Data

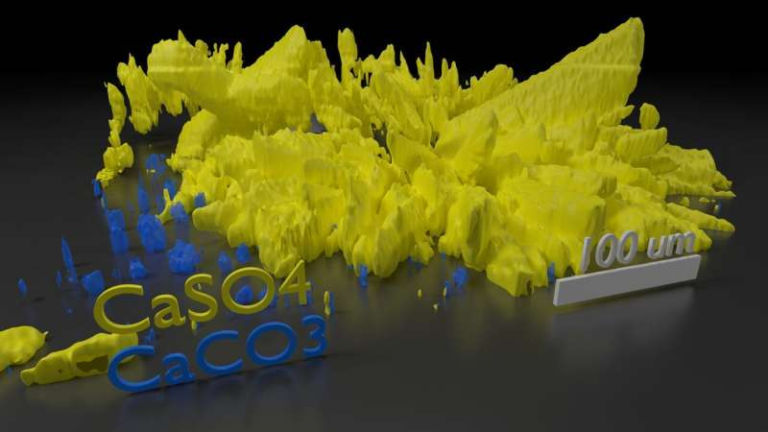

Data from 3D point clouds, geometric models, physical simulations, and scientific measurements often exists in continuous space. Coordinates can take on any real value, not just whole numbers. Examples include LiDAR scans, fluid dynamics simulations, geographic information systems, and even genomic data.

Until now, programmers working with these datasets have had to rely on specialized data structures and large amounts of custom code, often written outside mainstream tensor frameworks. This created a clear divide between the “tensor world” and the “non-tensor world,” with each developing its own tools, abstractions, and optimization strategies.

The MIT researchers set out to close this gap.

What the Continuous Tensor Abstraction Does Differently

The Continuous Tensor Abstraction allows tensor indices to be real numbers. Instead of writing something like A[3], programmers can write A[3.14]. This may sound simple, but it represents a fundamental shift in how tensors are defined and accessed.

At first glance, representing continuous data as an array seems impossible. Real numbers are infinite, and arrays are finite. The MIT team solved this problem using an idea called piecewise-constant tensors. Continuous space is divided into regions where the tensor’s value stays the same. This preserves essential information while making the data manageable for hardware.

An intuitive way to think about this is like creating a collage from colored rectangles. Even though the original image is continuous, breaking it into carefully chosen blocks still captures the important structure in a form computers can work with efficiently.

Introducing Continuous Einsums

One of the most powerful aspects of the new framework is the introduction of continuous Einsums. Traditional Einstein summation notation, or Einsum, is already widely used in tensor programming to express complex operations concisely.

Continuous Einsums generalize this idea to continuous domains. Programmers can now describe computations over real-valued spaces using the same compact mathematical notation they already know. According to the researchers, continuous Einsums behave intuitively like their discrete counterparts, making them easier to learn and adopt.

This design choice means scientists and engineers do not need to abandon familiar programming patterns to work with continuous data.

Massive Reductions in Code Size

One of the most striking outcomes of CTA is how much it reduces the amount of code needed to express complex algorithms.

In several case studies, tasks that previously required thousands of lines of specialized code were rewritten using just a few dozen lines of tensor expressions. In some examples, programs with around 2,000 lines of code were condensed into a single line of continuous Einsum notation.

This is not just a cosmetic improvement. Shorter, clearer code is easier to debug, maintain, and adapt to new problems.

Performance That Competes With Specialized Tools

Concise code is only useful if it runs efficiently, and the researchers made performance a central focus of their evaluation.

In geospatial search tasks, such as finding all points within a square or circular region (similar to what mapping tools do), CTA-generated programs used 62 times fewer lines of code than comparable Python tools. For circular, or radius-based, searches, CTA was also about nine times faster.

In 3D point cloud processing, commonly used in robotics and autonomous systems, a machine learning algorithm known as Kernel Points Convolution normally requires more than 2,300 lines of code. With CTA, the same task was implemented in just 23 lines, making the code over 100 times more concise.

The framework was also tested on genomic data analysis, where it searched for features in specific chromosome regions. Here, CTA produced code that was 18 times shorter and slightly faster than existing approaches.

In another test involving neural radiance fields (NeRFs), which are used to reconstruct 3D scenes from 2D images, CTA ran nearly twice as fast as a comparable PyTorch-based solution, while using roughly 70 fewer lines of code.

Why This Matters for Science and AI

The significance of this work goes beyond convenience. By extending tensor programming into continuous spaces, CTA acts as a bridge between two previously separate programming worlds.

Researchers can now express geometric algorithms, physical simulations, and machine learning models within a single, unified tensor framework. This opens the door to applying decades of tensor compiler optimizations to problems that were previously outside their reach.

It also raises intriguing possibilities for hardware acceleration. The researchers suggest that specialized components, such as ray-tracing cores, might one day be repurposed to accelerate continuous tensor programs.

A Broader Perspective on Continuous Computation

Continuous data is everywhere in science and engineering. From modeling airflow over aircraft wings to reconstructing 3D environments for robots, many of the hardest problems involve real-valued spaces.

By making continuous data a first-class citizen in tensor programming, CTA encourages researchers to rethink how these problems are expressed and optimized. Instead of crafting custom solutions for each domain, developers can rely on a shared mathematical language with strong compiler support.

Looking ahead, the team plans to explore even more advanced representations, including regions where tensor values are defined by variables rather than constants. This could further expand CTA’s usefulness in deep learning, computer graphics, and scientific visualization.

The Bigger Picture

For decades, tensor programming has been synonymous with grids, indices, and discrete structures. This research challenges that assumption and shows that tensors can naturally extend into continuous, infinite spaces without sacrificing performance or clarity.

By combining expressive mathematics, practical performance, and developer-friendly design, the Continuous Tensor Abstraction represents a meaningful step forward for both scientific computing and AI research.

As continuous data becomes increasingly important across disciplines, tools like CTA may well redefine how future systems are built and optimized.

Research paper:

https://doi.org/10.1145/3763146