MIT Researchers Build a Speech-to-Reality System That Turns Spoken Commands Into Real Objects Using AI and Robotics

Researchers at MIT have taken a major step toward a future where physical objects can be created almost as easily as asking for them out loud. A new speech-to-reality system developed by the team allows a person to describe an object using natural speech, after which a robotic arm automatically designs and builds that object in the real world — often in just five minutes.

The project brings together multiple rapidly advancing technologies, including natural language processing, 3D generative AI, and robotic assembly, into a single workflow. The result is a system where users can say something as simple as “I want a stool,” and then watch as a robot assembles that stool from modular components right in front of them.

How the Speech-to-Reality System Works

At its core, the system is designed to translate spoken language directly into physical fabrication. It begins with speech recognition software that converts the user’s voice into text. This text input is then interpreted by a large language model, which extracts the intent and key characteristics of the requested object.

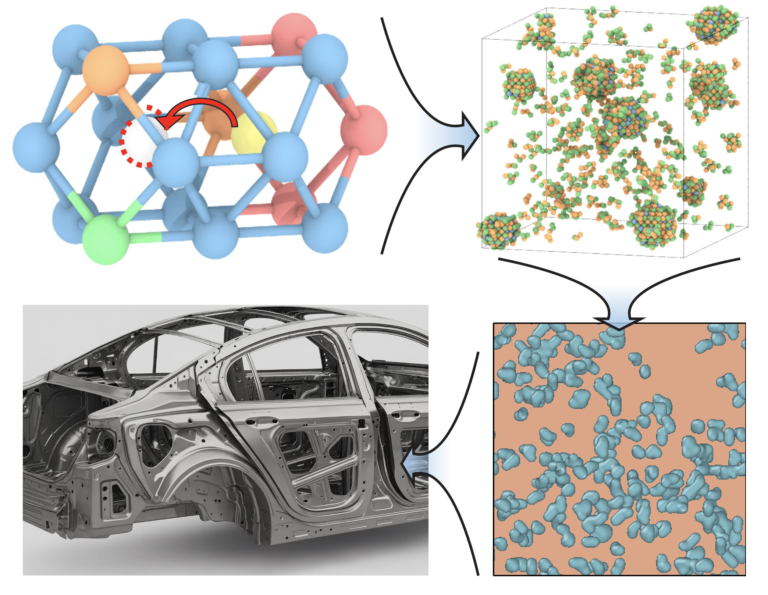

Once the system understands what the user wants, the next stage involves 3D generative AI. The AI produces a digital 3D mesh representing the object’s shape and structure. This mesh is not meant for visual display alone — it becomes the blueprint for physical construction.

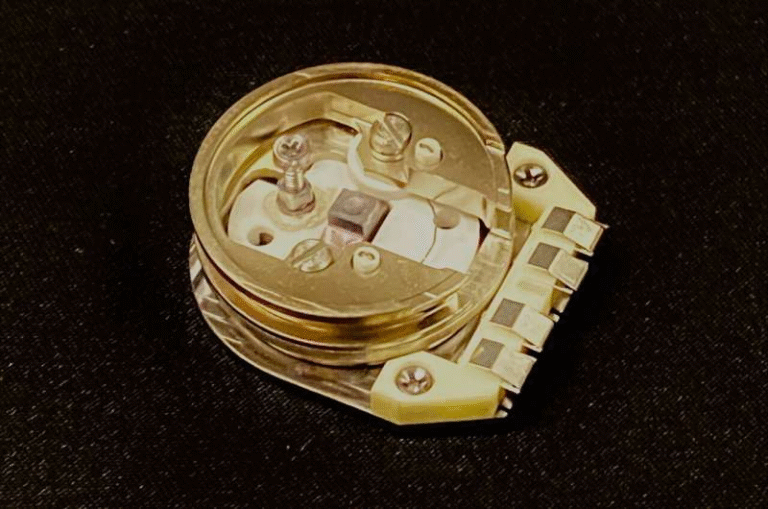

After generating the 3D model, the system applies a voxelization algorithm, which breaks the mesh down into discrete, block-like components. These components are designed to be physically assembled by a robot, rather than manufactured as a single continuous piece.

The system then performs geometric processing to adapt the AI-generated design to real-world constraints. This step ensures the object can actually be built by a robotic arm. Factors such as the number of parts, structural connectivity, overhang limitations, and stability are all accounted for at this stage.

Next, the software creates a feasible assembly sequence. This determines the exact order in which components must be put together so that the structure remains stable throughout construction. Along with this, the system generates automated path-planning instructions that guide the robotic arm’s movements as it picks up and places each component.

Finally, the robotic arm assembles the object on a tabletop workspace using modular building blocks. Unlike 3D printing, which can take hours or even days, this discrete assembly approach allows objects to be built within minutes.

Objects Built So Far

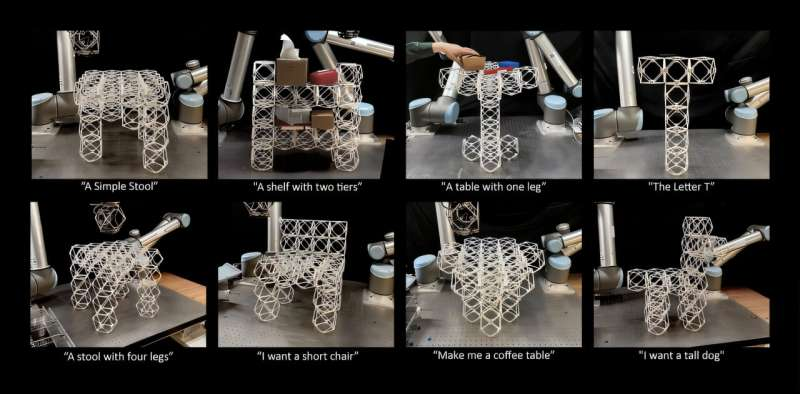

The researchers have already demonstrated the system by creating a variety of objects, including:

- Stools

- Chairs

- Shelving units

- Small tables

- Decorative objects, such as a stylized dog statue

These examples show that the system can handle both functional furniture and more expressive, artistic forms. Each object is assembled in real time after receiving a spoken command, with no manual intervention once the process begins.

Who Built the System

The project was led by Alexander Htet Kyaw, a graduate student at MIT affiliated with both the Department of Architecture and the Department of Electrical Engineering and Computer Science. Kyaw is also a fellow at the Morningside Academy for Design.

The idea for the system originated during Kyaw’s time in MIT professor Neil Gershenfeld’s well-known course, How to Make Almost Anything. Kyaw later continued developing the project at the MIT Center for Bits and Atoms (CBA), which is directed by Gershenfeld.

Additional collaborators include Se Hwan Jeon, a graduate student in MIT’s Department of Mechanical Engineering, and Miana Smith, a researcher at the Center for Bits and Atoms.

Why Modular Assembly Matters

One of the most important design choices behind the speech-to-reality system is its use of modular components instead of traditional fabrication techniques. Each object is made from individual blocks that can be assembled, disassembled, and reused.

This approach has several advantages. First, it significantly reduces material waste, since components are not permanently fused together. Second, it allows objects to be reconfigured over time — for example, disassembling a sofa and reassembling the same parts into a bed when needs change.

This modular philosophy aligns with broader efforts in sustainable manufacturing, where flexibility and reuse are becoming increasingly important.

Current Limitations and Planned Improvements

While the system is impressive, it is still a research prototype with some limitations. The objects built so far rely on magnetic connections between components, which limits how much weight the furniture can safely support.

The team plans to improve the system’s structural strength by replacing magnets with more robust connection mechanisms. This would allow the robot to build furniture capable of supporting greater loads and expanding practical use cases.

Researchers have also developed pipelines that can convert voxel-based structures into assembly sequences for small, distributed mobile robots. This opens the door to scaling the system beyond a single tabletop robotic arm, potentially enabling construction at much larger sizes.

Expanding Human-Robot Interaction

Kyaw has previous experience working with gesture recognition and augmented reality in robotic fabrication. As a result, future versions of the speech-to-reality system are expected to incorporate both speech and gestural control.

This would allow users not only to describe objects verbally, but also to refine designs through hand gestures or visual cues, creating a more intuitive and interactive fabrication process.

How This Fits Into the Bigger Picture

The speech-to-reality system reflects a growing trend in technology toward natural interfaces — systems that allow humans to interact with machines using everyday language and behavior instead of specialized tools or programming languages.

By removing the need for expertise in CAD software, robotics, or manufacturing processes, this system lowers the barrier to personal fabrication. In practical terms, it suggests a future where individuals can design and build physical objects as easily as generating digital content today.

The project also demonstrates how AI-generated designs can be directly connected to physical action, rather than remaining confined to screens and simulations.

Inspiration Behind the Vision

Kyaw has described his long-term vision as one inspired by science fiction, including the replicator devices seen in Star Trek and the friendly robots portrayed in Big Hero 6. The goal is not just faster fabrication, but greater access — enabling people everywhere to shape the physical world quickly, sustainably, and creatively.

The research team envisions a future where matter itself becomes programmable, allowing reality to be generated on demand through simple human input.

Academic Presentation and Publication

The team presented their work in a paper titled Speech to Reality: On-Demand Production using Natural Language, 3D Generative AI, and Discrete Robotic Assembly at the ACM Symposium on Computational Fabrication (SCF ’25). The event was held at MIT on November 21, 2025.

The paper formally documents the system’s architecture, algorithms, and experimental results, making it a significant contribution to the fields of computational fabrication, human-robot interaction, and AI-driven manufacturing.

Research Paper:

https://dl.acm.org/doi/10.1145/3745778.3766670