MIT Researchers Develop a Smarter Way for Large Language Models to Think Through Hard Problems

Large language models are getting better at answering questions, writing code, and solving complex problems—but behind the scenes, there is a growing challenge. These models often use the same amount of computational effort for every task, regardless of whether the problem is trivial or extremely difficult. That inefficiency has now caught the attention of researchers at MIT, who have developed a new approach that allows AI systems to adapt how much “thinking” they do based on task difficulty.

This research, presented at NeurIPS 2025, introduces a method that helps large language models dynamically decide how much computation to spend while solving a problem. The result is a system that is more efficient, more accurate, and less wasteful, especially when dealing with complex reasoning tasks.

Why Fixed Reasoning Budgets Are a Problem for AI

Most modern reasoning techniques for large language models rely on what is known as inference-time scaling. In simple terms, this means the model generates multiple possible solution paths or reasoning steps and then evaluates which ones are most promising.

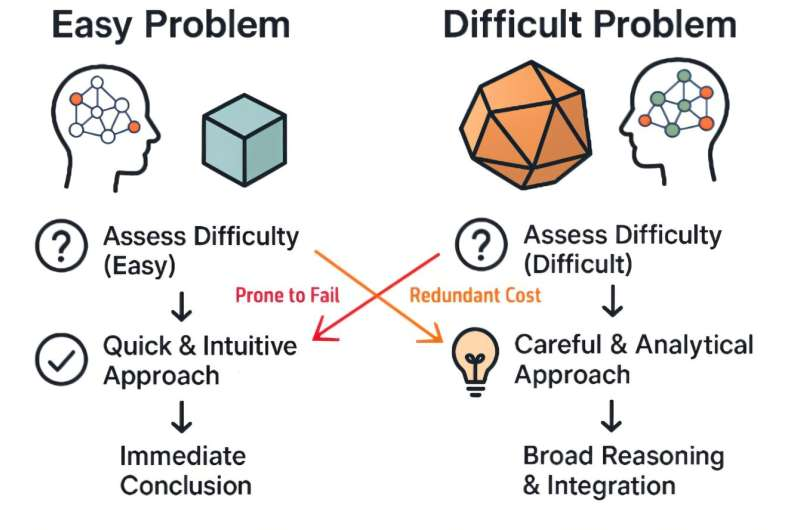

However, almost all existing approaches use a fixed computational budget. Whether the question is easy or extremely difficult, the model is allowed the same number of reasoning attempts or tokens. This leads to two major issues:

- Easy questions get overprocessed, wasting time, energy, and compute.

- Hard questions may not get enough reasoning steps, leading to incorrect or incomplete answers.

From a practical standpoint, this inefficiency has become a serious concern. The cost of inference—the compute required every time a user asks a question—has become one of the biggest bottlenecks for large AI systems. Reducing unnecessary computation without sacrificing accuracy is now a top priority for AI researchers and companies alike.

Introducing Instance-Adaptive Scaling

To solve this problem, the MIT research team developed a framework called instance-adaptive scaling. Instead of deciding upfront how much computation to use, the model adjusts its reasoning effort step by step, as it works through the problem.

This approach is inspired by how humans solve problems. When a task seems straightforward, we move quickly. When it becomes complicated, we slow down, reconsider our steps, and explore alternative solutions. The MIT method gives large language models a similar capability.

At the heart of this system is something called a Process Reward Model, or PRM.

What Is a Process Reward Model and Why It Matters

A Process Reward Model is a separate model that evaluates partial reasoning steps generated by the main language model. Rather than scoring only the final answer, it estimates how likely each intermediate solution is to eventually lead to the correct outcome.

In traditional inference-time scaling methods, PRMs are used to rank possible reasoning paths. The language model then focuses its computation on the most promising ones.

But there is a major flaw: existing PRMs tend to be overconfident.

When a PRM overestimates the chance that a partial solution is correct, the system may prematurely stop exploring alternative paths. This leads to reduced accuracy, especially on difficult problems.

Fixing Overconfidence Through Better Calibration

One of the most important contributions of this research is a new way to calibrate Process Reward Models.

Instead of producing a single probability score for a reasoning step, the calibrated PRM generates a range of probability estimates. This allows the system to better understand its own uncertainty. In other words, the model becomes more aware of what it does not know.

With better uncertainty estimates, the instance-adaptive scaling framework can make smarter decisions:

- If confidence is genuinely high, it reduces computation.

- If uncertainty remains, it allocates more reasoning steps and explores additional solution paths.

This calibration step turns out to be essential. Without it, adaptive reasoning would shrink computation too aggressively, harming accuracy.

How the System Adjusts Computation in Real Time

Unlike fixed-budget methods, the MIT framework does not make a single decision at the beginning of the reasoning process. Instead, adaptation happens continuously.

At every stage of reasoning:

- The PRM evaluates the problem and the partial answers.

- The system estimates how likely each path is to succeed.

- The language model decides whether to continue exploring, narrow its focus, or stop.

This dynamic adjustment allows the model to use as little as half the computation required by existing inference-time scaling methods while maintaining similar accuracy.

Strong Results on Mathematical Reasoning Benchmarks

The researchers evaluated their method on challenging mathematical reasoning tasks, including MATH500 and AIME24-25. These benchmarks are known for requiring multi-step reasoning and careful problem decomposition.

The results showed that:

- Instance-adaptive scaling significantly reduced computational cost.

- Accuracy remained comparable to or better than fixed-budget methods.

- Calibration techniques outperformed popular alternatives such as temperature scaling, isotonic regression, and histogram binning.

Perhaps most interestingly, smaller language models using this method were able to perform as well as—or even better than—larger models on complex problems.

Why This Matters for Energy Use and AI Deployment

Reducing computation has consequences beyond performance metrics. Large-scale AI systems consume enormous amounts of energy, especially during inference when millions of users are interacting with models simultaneously.

By allowing models to:

- Use fewer tokens on easy tasks

- Spend more compute only where it is truly needed

this approach could significantly lower energy consumption. That makes advanced reasoning systems more viable for high-stakes and time-sensitive applications, such as scientific analysis, engineering design, or safety-critical decision-making.

Broader Implications for AI Agents and Future Systems

The researchers believe this technique can be applied far beyond math problems. Potential future applications include:

- Code generation, where reasoning depth varies widely between tasks

- AI agents, which must make sequential decisions over time

- Reinforcement learning, where uncertainty estimation is critical

- Fine-tuning and alignment, where understanding model confidence is essential

The underlying idea—helping AI systems know what they don’t know—is seen as a key step toward building agents that can adapt, improve, and operate safely in real-world environments.

A Step Toward More Human-Like Reasoning in AI

One of the most compelling aspects of this research is how closely it mirrors human problem-solving behavior. Humans naturally adjust effort based on difficulty, revisiting steps when unsure and stopping early when confident.

By embedding this behavior into large language models, the MIT team has taken an important step toward more flexible, efficient, and self-aware AI systems—systems that can reason deeply when needed, but stay light and fast when they can.

Research Paper:

Know What You Don’t Know: Uncertainty Calibration of Process Reward Models

https://arxiv.org/abs/2506.09338