MIT’s New Flexible Mapping Technique Helps Robots Navigate Unpredictable Environments with Speed and Accuracy

Robots navigating disaster zones face a major challenge: they must quickly build a map of their surroundings while figuring out their own location within that map. This process, known as simultaneous localization and mapping, or SLAM, becomes even more difficult in places like collapsed mines, unstable buildings, or cluttered office corridors—environments where lighting, structure, and camera orientation can be unpredictable. A team of MIT researchers has now introduced a new AI-driven mapping system that tackles these obstacles more efficiently, more accurately, and without the need for finely calibrated cameras.

This new system, developed by MIT graduate student Dominic Maggio, postdoctoral researcher Hyungtae Lim, and Luca Carlone, an associate professor in MIT’s Department of Aeronautics and Astronautics, promises faster map construction, better alignment of visual information, and wider usability across real-world scenarios. Their research will be presented at the Conference on Neural Information Processing Systems, and the full findings are available on the arXiv preprint server.

What Problem the Researchers Wanted to Solve

Roboticists have been refining SLAM technology for decades, but it still struggles with several consistent problems:

- Traditional SLAM methods often fail in visually complex or challenging scenes.

- Many require carefully calibrated cameras, which is impractical in disaster-response situations.

- Newer machine-learning approaches can process only a limited number of images at once—around 60 images even on high-end GPUs—making them too slow for robots that need to map large areas rapidly.

In real disasters, a robot may need to process thousands of images quickly. If it can’t, rescue workers lose valuable time.

How the New System Works

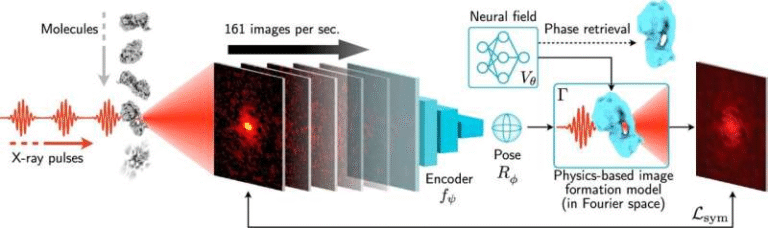

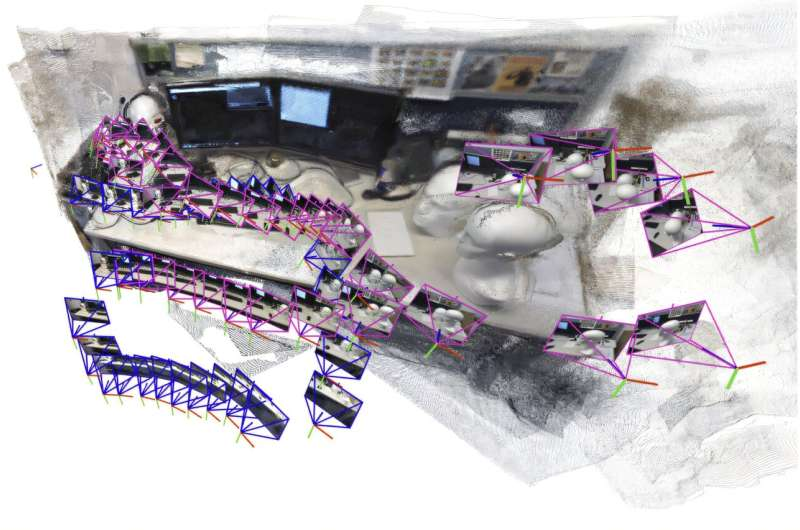

Instead of trying to build one huge map from all the images, the MIT team designed a method that breaks the environment into smaller submaps. These submaps are generated from a limited set of images the model can handle, and then the system aligns and stitches them together to produce a full 3D reconstruction of the environment.

This may sound simple, but when they first attempted it, the researchers discovered an unexpected issue: machine-learning models often produce submaps that are slightly deformed, with small geometric distortions. For example, a wall might look bent, or a room might appear stretched. Traditional alignment methods rely on rotating and shifting these maps, but that doesn’t fix distortions. Aligning imperfect submaps is much harder than expected.

The breakthrough came when Maggio revisited classical computer-vision research from the 1980s and 1990s. Those early papers explored mathematical transformations capable of correcting exactly these kinds of distortions. By combining modern AI mapping with classic geometry, the team developed a much more flexible alignment strategy.

This improved technique can handle the small twists, tilts, stretches, and other distortions that appear in AI-generated submaps. As a result, the system can reliably align them into a unified, accurate 3D map—even in difficult scenes.

What Makes This New Approach Stand Out

Here are the most significant improvements:

- Faster processing: The system can reconstruct a 3D scene in seconds.

- No camera calibration required: It works with standard cameras, even uncalibrated ones.

- High accuracy: The reconstructed scenes had an average error of under 5 centimeters.

- Real-time performance: Robots can estimate their position as the system builds the map.

- Simpler implementation: There is no need for complex tuning or specialized sensors.

The system was tested on scenes such as a crowded office corridor, and it handled long sequences effectively. In one demonstration, the model reconstructed a 55-meter loop around an office hallway using 22 submaps. It also produced detailed reconstructions of spaces like the interior of the MIT Chapel, using only short video clips captured on an ordinary smartphone.

Why This Matters for Search-and-Rescue Robots

In emergency-response robotics, every second matters. A robot exploring a partially collapsed structure must:

- navigate debris,

- rapidly build a usable map,

- locate possible survivors,

- and transmit clear information to human responders.

If a mapping system is slow, brittle, or dependent on perfect conditions, it becomes a liability instead of an asset.

The MIT technique solves several key problems:

- Scalability – robots can explore large areas without overwhelming the system.

- Robustness – it works despite distortions introduced by rough camera movement, poor lighting, or challenging visual surfaces.

- Speed – real-time processing means robots can move continuously.

- Accessibility – no special hardware is needed, making the system practical for field deployment.

The approach is promising for use in autonomous drones, underground exploration robots, and mobile ground robots operating in unstable environments.

Other Applications Beyond Rescue Work

Although disaster zones are a clear use case, the researchers highlight several additional areas where this mapping method could make a significant impact.

Extended Reality (XR) and Wearable Devices

Future AR and VR headsets must map environments in real time as users move. Traditional SLAM approaches struggle with complex indoor spaces or fast movements. The MIT method can help generate high-quality environmental maps quickly and could lead to more seamless mixed-reality experiences.

Industrial and Warehouse Robotics

Robots in warehouses often need to identify and move goods in spaces that change over time. A fast, adaptable mapping system allows these robots to reorient quickly and work efficiently, especially when shelves or objects shift between tasks.

Consumer Robotics

Home robots could use this technique for more reliable navigation, handling cluttered environments and unpredictable layouts.

How This Fits Into the Evolution of SLAM

The field of SLAM has gone through several major eras:

- Classical geometric SLAM (using features like edges and corners).

- Sensor-heavy SLAM using lidar or stereo-depth cameras.

- Machine-learning-based SLAM, which uses neural networks to interpret scenes directly from images.

- Now, a hybrid era that blends learning with classical computer vision—exactly the approach this MIT project uses.

The insight behind this latest work is straightforward but powerful: machine-learning models are excellent at understanding visual content, but classical geometry remains the best tool for correcting distortions and enforcing consistency across views. By bridging these worlds, the researchers created a flexible system that is both fast and mathematically grounded.

What Comes Next for the Research Team

While the system already performs impressively, the researchers plan to strengthen it even further. Their future goals include:

- making the system more reliable in extremely complicated environments,

- integrating it directly into real robots,

- testing it in field conditions such as rubble piles, mines, or multi-floor structures, and

- refining the mathematical components to reduce rare alignment failures.

If successful, this technique could become a standard tool in robotic navigation.

Link to the Research Paper

VGGT-SLAM: Dense RGB SLAM Optimized on the SL(4) Manifold

https://arxiv.org/abs/2505.12549