Neuromorphic Computer Prototype Learns Patterns With Far Fewer Computations Than Traditional AI

Neuromorphic computing has been gaining attention as researchers search for ways to make computers learn more like the human brain—efficiently, adaptively, and without enormous energy consumption. A new prototype from a team at The University of Texas at Dallas takes a meaningful step in that direction. Their experimental system demonstrates that brain-inspired hardware can learn patterns and make predictions using dramatically fewer training computations than conventional AI. This kind of work could eventually reshape how devices—from smartphones to robots—process information and learn from experience.

The project is led by Dr. Joseph S. Friedman, an associate professor of electrical and computer engineering and head of the NeuroSpinCompute Laboratory. His team collaborated with researchers from Everspin Technologies Inc. and Texas Instruments to publish their results in Communications Engineering. Their prototype showcases the potential of neuromorphic hardware powered by magnetic tunnel junctions, often abbreviated as MTJs, to support more energy-efficient machine learning.

Why Neuromorphic Computing Matters

Traditional AI relies heavily on massive datasets and computationally expensive training cycles. Graphics processing units (GPUs) and specialized accelerators can handle this workload, but the energy cost is enormous. Training a large modern AI model can cost hundreds of millions of dollars in compute resources and consume enormous amounts of electricity.

Neuromorphic computing takes inspiration from the human brain, where neurons and synapses work together to process and store data simultaneously. Instead of constantly moving information back and forth between separate memory and processing units, neuromorphic systems integrate the two. This leads to significantly lower power consumption, faster adaptation, and the ability to perform learning at the device level.

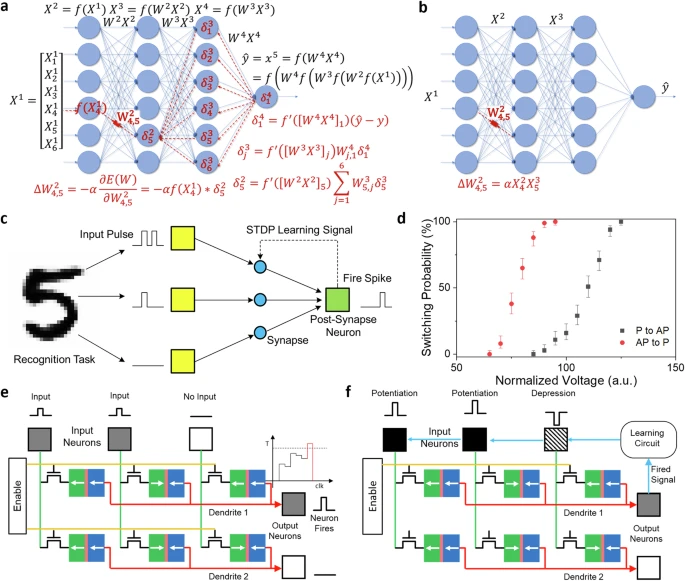

The prototype designed by Friedman’s team shows how this could be achieved using MTJ-based synapses. The system can strengthen or weaken its internal connections based on patterns it encounters—mimicking how biological synapses adjust during learning.

The Core Idea: Learning Based on Hebbian Principles

The device’s learning mechanism draws from a well-known principle proposed by neuropsychologist Dr. Donald Hebb. Often summarized as neurons that fire together, wire together, this rule describes how simultaneous activity between neurons strengthens their connection. Friedman’s team adapted this into hardware logic: when one artificial neuron triggers another, the synapse connecting them becomes more conductive.

This setup gives the prototype the ability to update itself based on experience. It doesn’t require external supervision or labels. Instead, it adapts organically as it receives data, similar to how a biological neural circuit might evolve when repeatedly exposed to a pattern.

Why Magnetic Tunnel Junctions Are a Big Deal

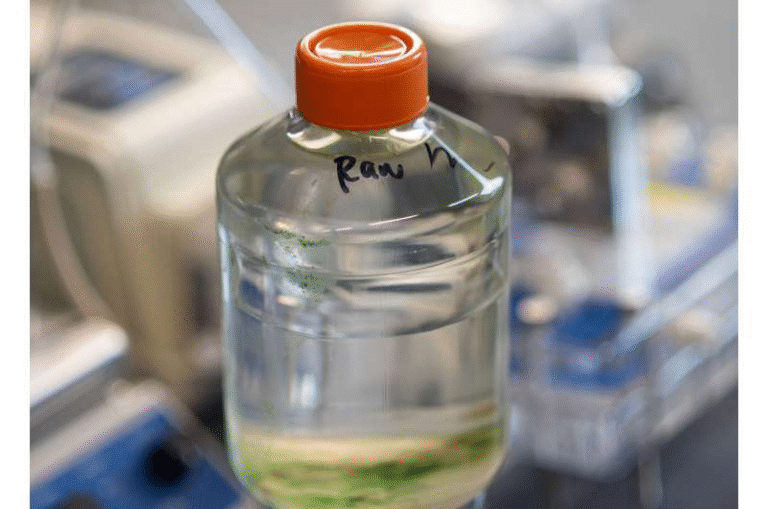

A major innovation in this project is the use of magnetic tunnel junctions. MTJs are nanoscale structures composed of two magnetic layers separated by an insulating barrier. Electrons pass—or “tunnel”—through the barrier more easily when the magnetic orientations of the two layers align, and less easily when they oppose each other. This produces two stable, binary states that can function as reliable synaptic weights.

The binary switching of MTJs provides stability that previous neuromorphic approaches struggled to achieve. Memristive devices, for example, can drift or become noisy over time. MTJs, commonly used in MRAM technologies, have well-characterized switching behavior and strong endurance, making them appealing for long-term neuromorphic hardware.

In the team’s neuromorphic setup, MTJs form the synaptic network. As signals pass through the network, the MTJs adjust their conductance states based on coordinated activity. This reinforces specific pathways much like synaptic strengthening in the brain. Because the devices are small and energy-efficient, they offer a path toward scalable neuromorphic hardware.

What the Prototype Accomplished

The researchers built a small-scale neuromorphic system that successfully learned patterns and made predictions. The key achievement is not just that it worked, but that it achieved learning with far fewer training computations than traditional AI systems require.

This reduction in computation is particularly important because it implies a future where smart devices—phones, drones, sensors, household robots—could learn directly on-device without reliance on cloud training or large data centers. This would cut down energy usage dramatically while also enhancing privacy, speed, and autonomy.

The team will now focus on scaling the prototype. Building neuromorphic hardware that mirrors the brain’s complexity requires expanding the number of neurons, synapses, and layers while keeping the system stable and energy-efficient. Scaling also involves developing circuitry that supports real-time learning without losing reliability.

The Broader Promise of Neuromorphic Hardware

Neuromorphic computing isn’t new as a concept, but materials and fabrication methods have only recently caught up to theory. Today, the field spans several key research directions:

- Spiking neural networks, which mimic biological spikes instead of continuous activation functions

- Memristive devices, which adjust resistance based on charge flow

- Spintronics, which uses electron spin—like in MTJs—to store and process data

- Event-driven computing, where computation happens only when necessary, reducing power

MTJ-based synapses belong to the spintronics family and offer an interesting combination of durability, speed, and extremely low energy consumption. This makes them especially compelling for edge devices or battery-powered hardware.

If neuromorphic hardware becomes mature enough for mainstream use, it could transform how we build AI systems. Instead of centralizing everything in cloud servers, learning could move out into the world—embedded into cars, wearables, home devices, and industrial sensors.

Comparing Neuromorphic and Traditional AI

It’s helpful to highlight where neuromorphic computing differs most from traditional AI:

Energy Efficiency

Neuromorphic systems eliminate constant data shuttling between memory and compute units, one of the biggest energy drains in traditional AI workflows.

Adaptability

Neuromorphic devices can adapt continuously, learning new patterns in real time. Traditional AI models usually require retraining, which is expensive.

Data Requirements

Hebbian learning does not rely heavily on labeled data. This could reduce data collection and annotation efforts, which are major bottlenecks in machine learning.

Physical Footprint

Neuromorphic chips are compact and potentially suitable for mobile devices. Large AI models, by contrast, often require server-scale infrastructure.

However, neuromorphic computing is not yet positioned to replace modern deep learning for complex tasks. Instead, it might complement it—offloading tasks to efficient local learners while reserving heavy computation for the cloud.

What Comes Next

The next big challenge is scaling. The prototype demonstrates the principles, but full neuromorphic computers need millions or billions of synapses. Ensuring uniform behavior across large arrays, integrating circuitry, and maintaining consistent switching thresholds are all hurdles the researchers must address.

Still, this work represents a promising milestone. Each advancement brings neuromorphic computing closer to real-world applications where efficiency, adaptability, and low power matter.

Research Reference

Neuromorphic Hebbian learning with magnetic tunnel junction synapses