New Computer Vision Method Accurately Links Real-World Photos to Floor Plans at the Pixel Level

Matching what we see around us to a map feels effortless for humans. Walk into a building, glance at a floor plan, and you can usually tell where you are within seconds. For computers, however, making this same connection has been a long-standing challenge. A new research effort from Cornell University is now pushing that boundary forward with a method that allows machines to connect ground-level photographs to architectural floor plans with pixel-level precision.

This work introduces both a new dataset and a new model, designed specifically to address one of the biggest weaknesses in modern computer vision: understanding scenes across dramatically different visual formats.

Why Photos and Floor Plans Are Hard to Match

Most current computer vision systems are trained almost entirely on photographs. They perform well when comparing one photo to another, even from different angles or lighting conditions. The problem arises when the input is no longer a photo but something abstract, like a floor plan.

Floor plans strip away textures, colors, and perspective. They reduce spaces to lines, symbols, and simple shapes. A camera photo, on the other hand, is full of visual clutter—furniture, lighting variations, reflections, and occlusions. Bridging this gap between real-world imagery and schematic representations has proven extremely difficult.

As a result, existing methods often fail badly, producing location errors that exceed 10% of the image size, which is far too inaccurate for real-world use in robotics or navigation.

Introducing C3Po and the C3 Dataset

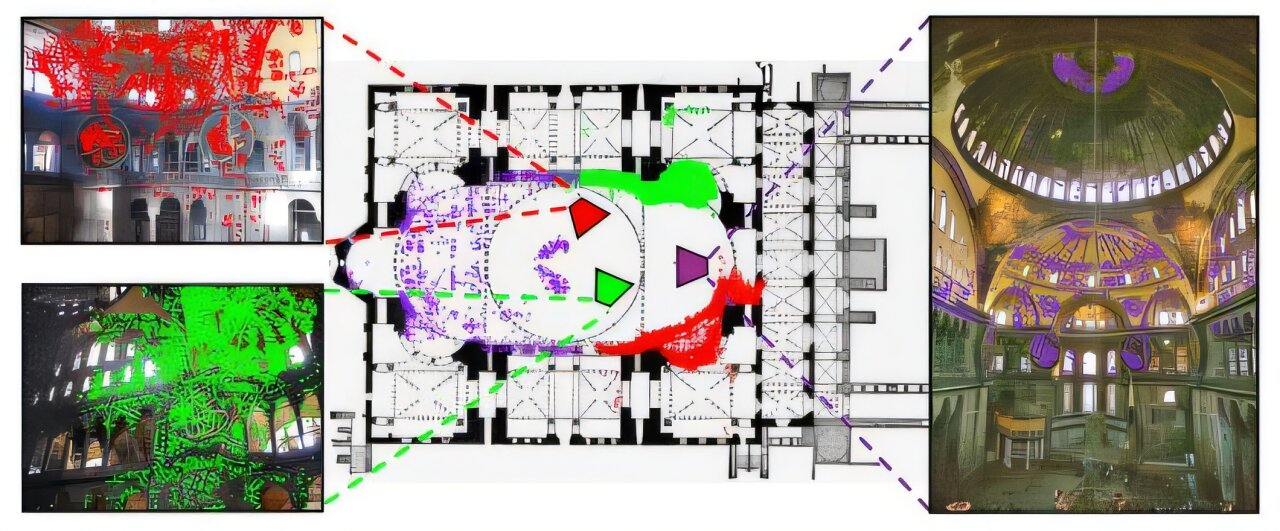

To tackle this problem, the Cornell team developed a system they call C3Po, short for Cross-View Cross-Modality Correspondence by Pointmap Prediction. Alongside the model, they created a massive new dataset known simply as C3.

The C3 dataset is one of the most detailed resources ever built for this task. It includes:

- 90,000 paired floor plan and photo examples

- 597 unique indoor scenes

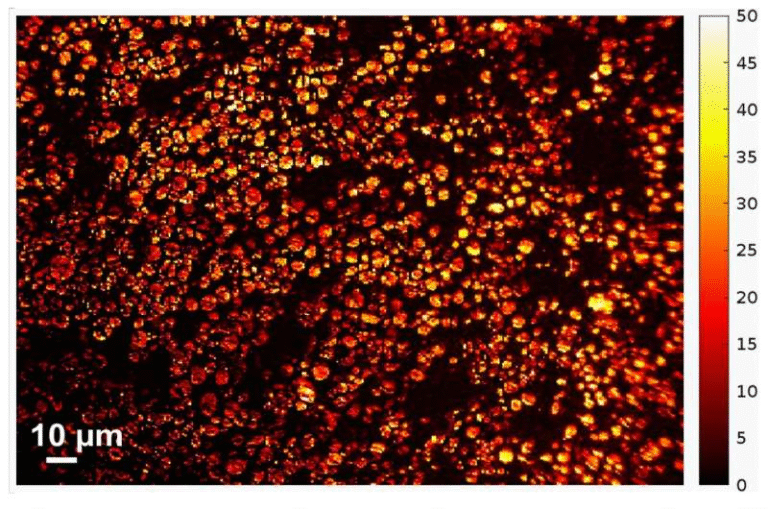

- 153 million pixel-level correspondences

- 85,000 precisely estimated camera poses

Each photo in the dataset is linked to a specific floor plan, with detailed annotations showing exactly which pixel in the image corresponds to which point on the plan. This level of detail is something earlier datasets simply did not provide at scale.

How the Dataset Was Built

Creating C3 was not a simple scraping exercise. The researchers followed a multi-step pipeline designed to ensure geometric accuracy.

First, they gathered large collections of photos for indoor spaces from public image sources. Using these images, they reconstructed each scene in 3D with structure-from-motion techniques. This process estimates camera positions and builds a sparse 3D point cloud of the environment.

Next, the researchers manually aligned these 3D reconstructions with publicly available floor plans. This alignment step is crucial. Once the 3D model is matched to the floor plan, every pixel in a photo can be mapped to an exact coordinate on the plan.

The result is a dataset that supports true geometric correspondence, rather than loose or approximate associations.

How C3Po Works

Instead of trying to directly match visual features between a photo and a floor plan, C3Po takes a different approach.

The model predicts a pointmap for each image. This pointmap assigns every pixel in the photo a corresponding location in the floor plan’s coordinate system. In other words, the model learns to answer a simple but powerful question: Where on the floor plan does this pixel belong?

By framing the task this way, C3Po avoids many of the pitfalls that plague traditional matching techniques. The model is trained on the C3 dataset, which exposes it to a wide range of building layouts, lighting conditions, and camera viewpoints.

Performance Improvements Over Existing Methods

When tested against prior state-of-the-art models, the results were striking.

- Existing methods struggled to produce usable matches, often with large spatial errors.

- C3Po reduced prediction errors by about 34% compared to the best previous approach.

- The model also produced more reliable confidence estimates, meaning its predictions were especially accurate when it indicated high confidence.

While the task remains challenging—symmetrical layouts and visually ambiguous spaces still cause problems—the improvement represents a major step forward.

Why This Matters for Robotics and Navigation

The ability to connect photos to floor plans has wide-ranging implications.

For indoor navigation, this technology could allow smartphones, AR headsets, or assistive devices to localize users inside complex buildings without relying on GPS.

For robots, understanding where a camera image fits within a known layout is essential for safe and efficient movement. A robot equipped with this capability could navigate offices, hospitals, or warehouses with far greater autonomy.

In 3D reconstruction, linking photos to architectural plans can help create more accurate digital twins of real spaces, which are increasingly important in construction, real estate, and virtual design.

A Step Toward Multi-Modal 3D Vision

One of the broader goals behind this research is pushing computer vision beyond single-modality training. Today’s large vision models are powerful, but they are limited by the data they see. When trained only on photographs, they struggle with drawings, maps, or diagrams.

By combining images, floor plans, and 3D geometry, the C3 project points toward a future where models can reason across many different representations of the same space. This multi-modal direction mirrors trends already underway in other areas of artificial intelligence.

Extra Context: Why Pixel-Level Correspondence Is So Important

Pixel-level correspondence is more than just a technical benchmark. It enables downstream tasks such as:

- Precise camera localization within buildings

- Automatic annotation of indoor spaces

- Cross-referencing architectural plans with visual inspections

- Improved simulation environments for AI training

Without pixel-level accuracy, small errors compound quickly, making systems unreliable in real-world settings. C3Po’s focus on dense, per-pixel mapping is what sets it apart from earlier work.

Looking Ahead

While C3Po does not solve every challenge in cross-modal vision, it establishes a strong foundation. The release of the C3 dataset is likely to have a lasting impact, giving other researchers the tools they need to explore new models and ideas in this space.

As indoor environments become increasingly important for robotics, augmented reality, and smart infrastructure, methods like this will play a key role in helping machines understand the built world the way humans already do.

Research Paper:

https://arxiv.org/abs/2511.18559