Patient Privacy in the Age of Clinical AI and Why Scientists Are Worried About Memorization Risk

Patient privacy has always been one of the core pillars of medical practice. Long before digital records, cloud storage, or artificial intelligence existed, doctors were expected to protect what they learned about their patients. The Hippocratic Oath, one of the oldest ethical guides in medicine, makes this expectation clear by emphasizing that personal information seen or heard during treatment must remain private. That idea of confidentiality has helped patients trust doctors with their most sensitive details for centuries.

Today, however, medicine operates in a very different environment. Health care systems increasingly rely on data-driven technologies, electronic health records, and large-scale artificial intelligence models. While these tools promise better predictions, improved diagnostics, and more efficient care, they also introduce new risks. A recent study by researchers affiliated with MIT closely examines one of the most concerning of these risks: the possibility that clinical AI systems may memorize individual patient data, even when trained on supposedly de-identified records.

Why Patient Privacy Still Matters So Much

In an era where personal data is constantly collected, analyzed, and sometimes exploited, health information remains uniquely sensitive. Medical records can reveal diagnoses, mental health conditions, genetic risks, substance use history, and other details that could deeply affect a person’s life if exposed. Privacy is not just a legal requirement; it is essential for patient trust. Without confidence that their data will remain confidential, patients may avoid care, withhold information, or disengage from the health system altogether.

As AI becomes more embedded in clinical decision-making, this trust faces new challenges. Large “foundation models” trained on massive health datasets are capable of extracting subtle patterns from patient data. But with that power comes the risk that these models may retain more information than intended.

The Risk of Memorization in Clinical AI

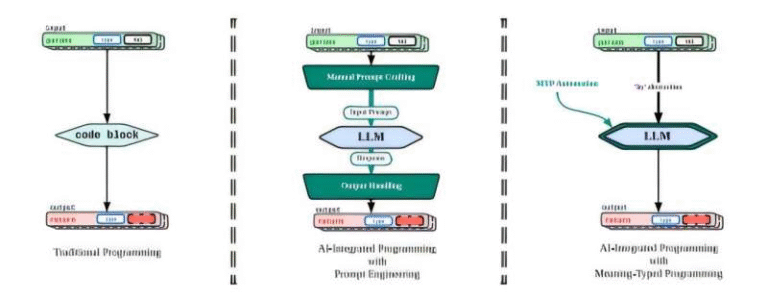

The research at the center of this discussion focuses on the difference between generalization and memorization in AI models. Generalization is what developers aim for: models learn broad trends across many patients and apply that knowledge to new cases. Memorization, on the other hand, occurs when a model relies on a single patient’s record to generate an output.

This distinction is crucial. If a model memorizes patient-specific details, it could potentially reveal sensitive information when prompted in certain ways. This kind of leakage is especially concerning because foundation models are already known, in other domains, to sometimes expose training data under targeted or adversarial prompts.

The study, co-authored by MIT researchers and published as a preprint on arXiv, was presented at NeurIPS 2025, one of the world’s leading conferences on machine learning. The researchers argue that current evaluation practices are not sufficient to detect or measure privacy risks in health care AI.

How the Researchers Studied the Problem

To better understand the real-world risks, lead author Sana Tonekaboni, a postdoctoral researcher at the Broad Institute of MIT and Harvard, collaborated with Marzyeh Ghassemi, an associate professor at MIT and a principal investigator at the Abdul Latif Jameel Clinic for Machine Learning in Health. Ghassemi leads the Healthy ML group, which focuses on building robust and responsible machine learning systems for medical use.

The team designed a structured testing framework aimed at answering a key question: how much information does an attacker need to extract sensitive patient data from a clinical AI model? Instead of treating all data leaks as equally harmful, their approach evaluates privacy risks in context.

They created multiple tiers of attack scenarios, each reflecting different levels of prior knowledge. For example, an attacker who already knows a large number of lab test results and dates might be able to coax more information out of a model. But if extracting meaningful data requires such extensive prior access, the practical risk may be low. In that case, the attacker already possesses highly protected information and gains little by targeting the model.

What the Tests Revealed

The researchers found a clear pattern: the more information an attacker already has about a patient, the more likely the model is to leak additional details. This reinforces the idea that privacy risks cannot be assessed in isolation. Context matters, especially in health care.

Importantly, the study shows how to distinguish between true model generalization and patient-level memorization. This distinction helps determine whether a privacy breach represents a meaningful threat or a theoretical concern.

Another key insight is that not all data leaks carry the same level of harm. Revealing basic demographic details such as age or sex is generally less damaging than exposing highly sensitive information like an HIV diagnosis, alcohol abuse history, or rare medical conditions. Patients with unusual or unique health profiles are particularly vulnerable, as they are easier to identify even in de-identified datasets.

The Broader Context of Health Data Breaches

These concerns are not occurring in a vacuum. Health data breaches are already a significant problem. Over the past two years alone, the U.S. Department of Health and Human Services has recorded 747 breaches affecting more than 500 individuals each, with most incidents classified as hacking or IT-related attacks. As medical records become increasingly digitized, the attack surface continues to grow.

AI systems trained on these records represent a new and complex layer of risk. Even if data is de-identified, memorization could undermine those safeguards once models are deployed or shared.

Why This Matters for the Future of Health Care AI

The researchers stress that their goal is not to halt progress in clinical AI, but to encourage practical and rigorous evaluation before models are released. Privacy testing should be treated as seriously as accuracy testing, especially when patient safety and trust are at stake.

They also emphasize the need for interdisciplinary collaboration. Addressing these challenges will require input from clinicians, privacy experts, legal scholars, and policymakers. Health data is protected for a reason, and AI developers must respect those boundaries as technology evolves.

Extra Context: AI and Privacy Beyond Health Care

Memorization risk is not unique to medicine. Large language models trained on internet-scale data have previously been shown to reproduce private text, phone numbers, or personal details when prompted correctly. In health care, however, the stakes are much higher. A leaked diagnosis or treatment history can lead to discrimination, stigma, or emotional harm.

Techniques such as differential privacy, data minimization, and controlled access are increasingly discussed as possible safeguards. However, this study highlights that technical solutions must be paired with realistic threat modeling and domain-specific evaluation.

What Comes Next

The research team plans to expand this work by incorporating broader expertise and refining their evaluation methods. Their hope is that these tools will become standard practice for assessing privacy risks in health care foundation models.

As AI continues to reshape medicine, one principle remains clear: innovation should not come at the cost of patient privacy. Trust, once lost, is difficult to regain, and safeguarding sensitive health data must remain a top priority.

Research paper:

https://arxiv.org/abs/2510.12950