Researchers Warn That AI Virtual Staining Can Sometimes Reduce Accuracy in Medical Image Analysis

Artificial intelligence keeps finding its way into nearly every corner of healthcare, and virtual staining is one of the most talked-about ideas in medical imaging right now. The concept sounds almost too good to be true: take a label-free microscopy image, run it through an AI model, and instantly get a synthetic “stained” version that looks like a traditional fluorescence-stained image—without spending time on chemical staining or risking damage to delicate samples.

But a new study from the Beckman Institute for Advanced Science and Technology and the Center for Label-free Imaging and Multiscale Biophotonics (CLIMB) shows that looking impressive doesn’t always mean performing better. The researchers evaluated how helpful these AI-generated images actually are for real scientific and clinical tasks. Their conclusion: virtual staining can help in some scenarios but may actually hurt accuracy in others, especially when powerful neural networks are used for downstream analysis.

This article walks through the full details of the findings, how the study was conducted, why the results matter, and what they reveal about the broader limitations of AI in biomedical imaging.

What Virtual Staining Is and Why It Exists

Microscopy images are essential in everything from disease diagnosis to drug development. Traditionally, scientists use chemical stains or fluorescent dyes to make specific structures—such as cell nuclei—stand out. This adds contrast and reveals important biological features, but staining has several drawbacks:

- It can be time-consuming.

- It can damage or kill cells.

- It prevents researchers from reusing the same sample for other types of analysis.

To avoid these issues, many labs rely on label-free imaging, which uses natural light interactions with cells to visualize them. However, label-free images typically have lower contrast, making subtle structures harder to see.

Virtual staining tries to combine the best of both worlds. Using deep learning–based image-to-image translation, a model predicts what a label-free image would look like if it had been chemically stained. Ideally, the result should show high-contrast cellular features without any wet-lab staining.

Because this approach promises speed, safety, and scalability, it has become a popular AI application in biomedical imaging. But the question the researchers asked is fundamental: Do virtual stains actually improve downstream tasks, or do they just look good to the human eye?

How the Study Was Designed

To answer this, the team needed large amounts of paired data—matching label-free images with ground-truth fluorescent stained images. Fortunately, the lab of Yang Liu recently developed the Omni-Mesoscope, a high-throughput imaging system capable of capturing tens of thousands of cells within minutes. This provided a massive dataset representing many different cell states, including responses to drug treatments.

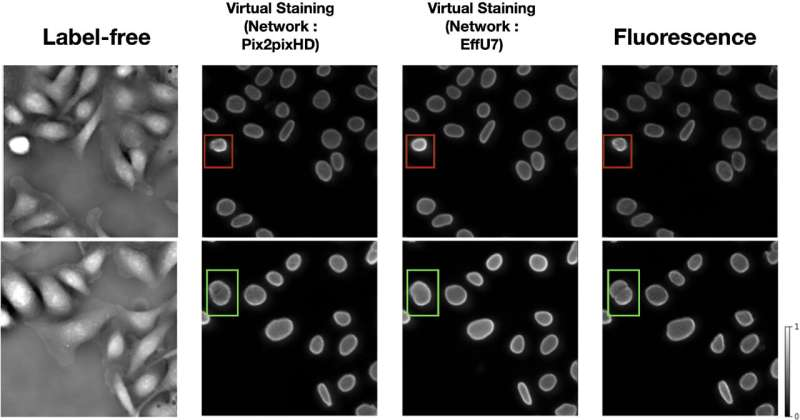

The researchers used these paired images to train and evaluate virtual staining models, including Pix2pixHD-based and EffU7-based networks. They then compared three image types:

- Label-free images

- Virtually stained images

- Ground-truth fluorescent stained images

To thoroughly test utility, they ran two important tasks that scientists and clinicians commonly use:

1. Segmentation Task

AI networks were asked to identify individual cell nuclei and crop each of them out—similar to isolating objects in a photograph. Good segmentation is essential for analyzing cell shape, counting cells, and running quantitative measurements.

2. Cell Classification Task

Networks were also asked to determine what state each cell was in, such as whether it had undergone drug treatment. This mimics real workflows in drug testing and disease research.

For each task, the team evaluated performance using five different neural networks with varying capacities. Some were simple, low-capacity models; others were high-capacity networks capable of learning very complex relationships.

What the Researchers Found

The results turned out to be far from straightforward—and in some cases, surprising.

Virtual Staining Helps Simple Models

When low-capacity networks processed the images:

- Virtually stained images performed significantly better than label-free images.

- This is likely because virtual staining highlights prominent features, making it easier for simple models to understand what they are seeing.

In these situations, AI-generated staining essentially acts as a helpful preprocessing step.

But With More Powerful Networks, the Advantages Disappear

For high-capacity networks, virtual staining did not improve segmentation accuracy. Both label-free and virtually stained images produced similar levels of performance.

And in the cell classification task, the findings were even more striking:

- Virtually stained images performed substantially worse than label-free images when processed by high-capacity networks.

This means the virtual staining process actually removed or altered information that the more advanced models needed to distinguish subtle biological states.

The Importance of the Data Processing Inequality

The team points to a well-known concept in information theory: the data processing inequality. In simple terms, it states that:

No processing step can increase the true information contained in raw data.

Virtual staining is a form of image processing. Even if it produces an image that looks clearer to a human viewer, it cannot add real biological information. In some cases, it may unintentionally remove or distort subtle patterns that matter for high-level analysis.

The researchers liken it to editing a photo: you can brighten it, sharpen it, or blur the background, but you cannot recreate detail that was never captured in the original shot.

What This Means for AI in Medicine

This study is a reminder that AI must be applied carefully in clinical or biological workflows. The researchers emphasize several important takeaways:

- Good-looking images are not automatically useful images.

- AI-generated content should be validated on the exact task it is meant to support.

- Virtual staining may be suitable for low-capacity analysis pipelines but risky for high-capacity tasks requiring subtle biological distinctions.

- Overreliance on AI without performance validation can lead to incorrect conclusions in sensitive fields like drug development or medical diagnosis.

While the team believes virtual staining remains a promising tool, they urge clinicians and researchers to evaluate it cautiously and scientifically before adopting it.

Extra Context: How Virtual Staining Fits Into the Broader AI Landscape

Virtual staining is part of a larger wave of AI innovations in microscopy and pathology, such as:

- Super-resolution imaging models that enhance detail beyond optical limits.

- Cell-type prediction models that infer molecular markers from brightfield images.

- Histopathology translation models that emulate H&E stains or immunohistochemistry.

All of these techniques face similar challenges:

- They require large paired datasets, which are difficult to obtain.

- They risk hallucinating or suppressing biologically relevant information.

- Regulatory approval in medical settings requires extensive validation.

As AI continues to expand into biomedical imaging, studies like this help define where the technology is trustworthy and where caution is necessary. The combination of powerful models and delicate biological data means unintended distortions can have real-world consequences.

Research Paper

On the Utility of Virtual Staining for Downstream Applications as It Relates to Task Network Capacity

https://doi.org/10.1364/BOE.576061