Robot Learns to Lip Sync by Watching YouTube Videos and Studying Its Own Face

Almost half of human attention during face-to-face conversation is directed toward the mouth. We instinctively watch lips move to better understand speech, emotion, and intent. Yet for decades, robots have struggled to replicate this seemingly simple behavior. Even advanced humanoid robots often rely on stiff, exaggerated mouth movements that feel unnatural. Now, researchers at Columbia University’s School of Engineering and Applied Science have taken a major step toward solving this problem by creating a robot that can learn realistic lip movements simply by watching humans and observing itself.

The research team, led by Hod Lipson at Columbia’s Creative Machines Lab, has developed a humanoid robotic face that can learn how to move its lips for speech and singing without being explicitly programmed with rules. Instead of following prewritten instructions, the robot learns by observation, much like a human child. This work was recently published in the journal Science Robotics and demonstrates what may be a turning point in how robots learn facial communication.

At the center of the study is a robotic face equipped with 26 small motors placed beneath a flexible artificial skin. These motors act like facial muscles, allowing the robot to stretch, compress, and shape its lips in complex ways. Building such hardware is already a challenge, as the motors must move quickly, precisely, and quietly while coordinating with one another. But hardware alone is not enough. The harder problem is figuring out how to control those motors so the face looks natural rather than unsettling.

To solve this, the researchers avoided traditional rule-based systems that map sounds to fixed mouth shapes. Those systems often produce robotic, puppet-like expressions. Instead, they let the robot learn directly from visual experience.

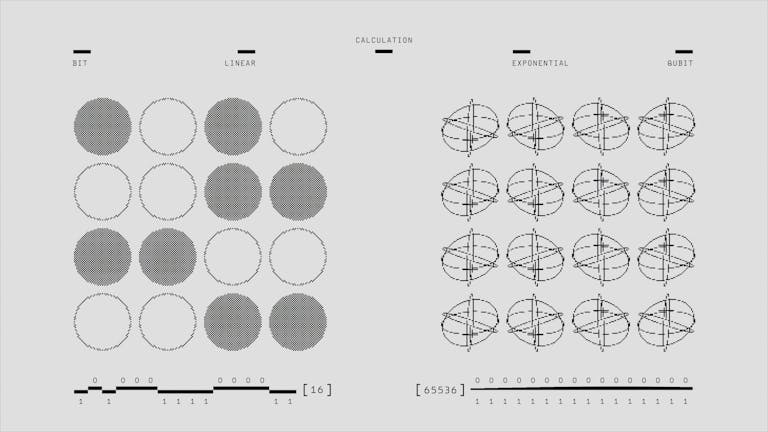

The learning process unfolded in two major stages. First, the robot was placed in front of a mirror. With no prior knowledge of how its face worked, it began making thousands of random facial expressions and lip movements. As it watched its reflection, the robot learned how specific motor actions changed its facial appearance. Over time, it built an internal understanding of how to move its own face to achieve certain visual outcomes. This process is known as a vision-to-action learning model, where the system links what it sees to how it moves.

Once the robot understood its own face, the second stage began. The researchers exposed it to hours of videos of humans speaking and singing, many of them sourced from YouTube. The robot did not receive transcripts or explanations of what the words meant. It simply watched how human lips moved while listening to the corresponding sounds. By combining what it learned from the mirror stage with what it observed from humans, the robot developed the ability to translate audio directly into facial motor actions.

Using this method, the robot was able to lip sync speech in multiple languages and even match its mouth movements to songs. One demonstration involved the robot performing a song from its own AI-generated debut album titled hello world. Importantly, the robot did not understand the language or the music in a semantic sense. It learned only the relationship between sound patterns and visible lip movements.

The researchers tested the system across a wide range of sounds, languages, and contexts. In many cases, the resulting lip movements were noticeably more natural than those produced by older, rule-based approaches. Human observers were able to recognize the improvement, even though the lip syncing is still not perfect.

Certain sounds remain challenging. The robot struggled with hard consonants like “B”, which require precise lip closure, and puckering sounds like “W”. These limitations are partly due to the complexity of lip dynamics and the mechanical constraints of the artificial face. However, the researchers expect these issues to improve as the robot continues to learn from more data and interactions.

Beyond technical performance, the research highlights the importance of facial movement in human-robot interaction. Humans are surprisingly forgiving of robotic flaws in walking or grasping, but even small errors in facial expression can feel disturbing. This phenomenon is often described as the uncanny valley, where near-human appearances provoke discomfort instead of familiarity. Poor lip motion is a major contributor to this effect.

By enabling robots to learn facial gestures naturally, the researchers believe they are addressing a missing piece in humanoid robotics. Much of the field has focused on legs, arms, and hands, optimizing robots for walking, lifting, and manipulation. Facial expression, however, is just as important for robots meant to interact with people in social environments.

When combined with conversational AI systems, realistic lip syncing could dramatically change how humans perceive robots. A robot that speaks with synchronized facial expressions can appear more attentive, emotionally aware, and responsive. As conversational systems grow more capable and context-aware, facial gestures may also become more nuanced and situation-dependent.

This has important implications for real-world applications. Robots with lifelike faces could play meaningful roles in education, entertainment, healthcare, and elder care. In settings where trust, comfort, and emotional connection matter, facial communication becomes essential. Some economists predict that over a billion humanoid robots could be manufactured in the coming decade, making these design choices increasingly relevant.

At the same time, the researchers acknowledge the risks. As robots become better at forming emotional connections with humans, ethical concerns grow. There is a need to carefully consider how such technology is deployed and how people relate to machines that appear expressive and socially aware. The team emphasizes that progress should be deliberate and responsible.

This work is part of a longer research effort by the Creative Machines Lab to help robots acquire human-like behaviors through learning rather than rigid programming. Smiling, gazing, speaking, and now lip syncing are all being approached as skills that robots can develop by watching and listening to humans.

From a broader perspective, this study also reflects a shift in artificial intelligence and robotics toward self-supervised and observational learning. Instead of telling machines exactly what to do, researchers are increasingly allowing them to explore, observe, and adapt. This approach mirrors how humans and animals learn and may prove essential for creating machines that can function naturally in human environments.

Human faces remain one of the most information-rich interfaces we have. Subtle movements of the lips convey not only words but emotion, intention, and timing. By teaching robots to understand and reproduce these movements, researchers are unlocking a powerful new channel of communication.

While the robot’s lip syncing is not flawless, the progress is clear. The combination of flexible hardware, visual learning, and large-scale observational data marks a significant step toward robots that look and feel less mechanical. As this technology matures, the line between artificial and natural interaction may continue to blur.

Research paper:

https://www.science.org/doi/10.1126/scirobotics.adx3017