Rosetta Stone for Database Inputs Exposes a Serious Security Flaw in Modern AI Systems

Modern search engines, recommendation systems, and AI assistants all rely on a behind-the-scenes technique called embeddings. These embeddings convert text, images, audio, or other data into long lists of numbers that capture meaning rather than exact content. For years, embeddings were widely treated as safe to share, almost like encrypted data. A new research study from Cornell Tech shows that assumption was wrong—and the implications are significant.

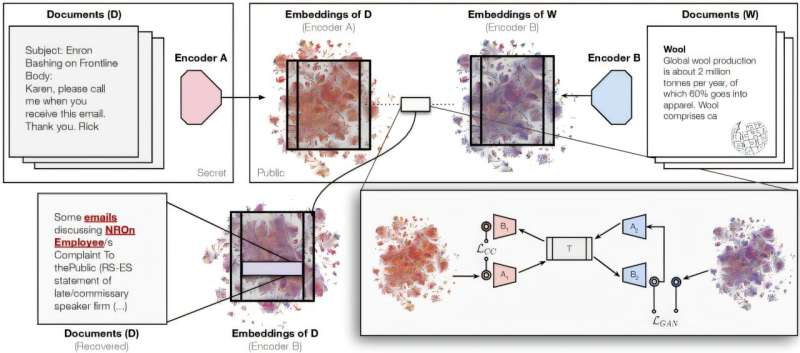

Researchers have developed a new algorithm called vec2vec that can reverse-engineer sensitive information from embedding databases, even when the original model and source data are completely unknown. The work reveals a serious and previously underestimated security and privacy risk in how modern AI systems store and exchange data.

Why embeddings were assumed to be safe

Embeddings are foundational to modern AI. When you search for a product, get a recommendation, or ask an AI chatbot a question, embeddings are what allow the system to compare meaning rather than exact words. Each document or query is transformed into a numerical vector, and similar meanings cluster close together in this vector space.

Because embeddings are not human-readable and are highly abstract, companies long believed that storing or sharing them posed little risk. In practice, embeddings were often treated as non-sensitive artifacts, separate from the original data. Many organizations shared embedding databases with third parties under the assumption that the underlying information could not be reconstructed.

The Cornell Tech research challenges this belief head-on.

What vec2vec actually does

The vec2vec algorithm can translate a database of embeddings from an unknown model into the embedding space of a known model. Crucially, it does this without access to the original text, without knowing how the embeddings were created, and without paired examples of the same data across models.

Instead, vec2vec relies entirely on the latent geometric structure shared across embedding spaces. When large language models and encoders learn human language, they tend to converge on similar internal representations of meaning. Vec2vec exploits this shared structure to align one embedding space with another.

The result is something the researchers describe as a Rosetta stone for embeddings—a universal translation layer that makes it possible to convert embeddings across models and, more importantly, approximate their original meaning.

From translated embeddings to recovered data

Once embeddings are translated into the space of a known model, they become vulnerable to well-established techniques such as embedding inversion and attribute inference. These methods attempt to reconstruct or infer the original content that produced the embeddings.

Using vec2vec, the researchers demonstrated that they could recover sensitive information from several real-world datasets:

- Tweets, where the general topics and themes were accurately identified

- Anonymized hospital records, where specific medical conditions could be inferred

- Corporate emails, including those from the now-defunct Enron Corporation, which revealed names, dates, financial details, and even lunch orders

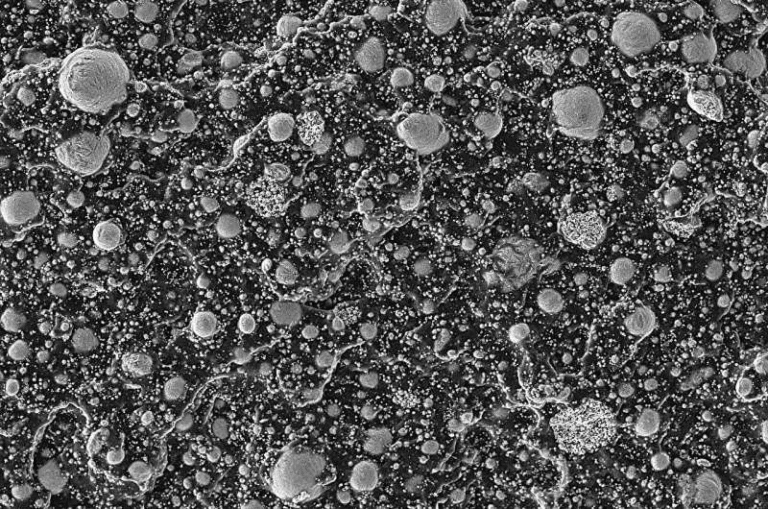

The recovered content was not a perfect word-for-word reconstruction. There was distortion and noise. However, the outputs consistently preserved the core meaning of the original data. In some cases, the algorithm recovered highly specific details, including medical symptoms as precise as alveolar periostitis.

This level of recovery is more than enough to constitute a serious privacy breach.

Why this works across different models

A key insight behind vec2vec is that different AI models—even those developed by separate companies and trained on different datasets—often learn similar conceptual structures. This idea is sometimes referred to as a universal or shared geometry of embeddings.

The researchers argue that this helps explain a common observation: when users ask the same question to different AI chatbots, the answers are often strikingly similar. Despite architectural differences, the models are encoding the same human concepts and relationships.

Vec2vec validates this idea experimentally. By aligning embeddings purely through structural similarities, it shows that meaning is preserved across models far more consistently than previously assumed.

Why this is a security problem, not just a technical curiosity

The most important takeaway from the research is simple: embeddings should be treated as sensitive data.

Handing over an embedding database to a third party is effectively equivalent to handing over the original text, images, or records—at least in part. The long-standing assumption that embeddings function like encrypted data is no longer defensible.

If an attacker gains access to an embedding database, vec2vec makes it possible to extract meaningful information without needing the original model or training process. This dramatically lowers the barrier for exploitation.

Implications for companies and AI developers

This discovery forces a rethink of how vector databases are handled in production systems. Organizations that rely on embeddings for search, personalization, or analytics may need to reconsider several practices:

- Treating embeddings as regulated data, similar to personal or confidential information

- Encrypting embeddings both at rest and in transit

- Restricting access to raw embedding databases

- Limiting embedding sharing across organizational boundaries

The findings are especially relevant for industries dealing with health data, financial records, legal documents, or private communications.

Potential positive applications of vec2vec

While the security implications are serious, the researchers also point out constructive uses for vec2vec.

One promising application is cross-model interoperability. For example, an encoder trained in one language could be translated to work with another language, even if no bilingual training data exists. Similarly, embeddings from different data formats—such as text, images, or audio—could potentially be aligned in a shared semantic space.

In theory, this could enable entirely new forms of multimodal AI systems. The team has even speculated, cautiously, about using similar techniques to translate non-human communication—such as whale vocalizations—into human-interpretable representations. For now, that idea remains purely theoretical.

A broader shift in how AI security is understood

The vec2vec research highlights a deeper issue in AI security: opacity is not the same as protection. Just because data is hard to interpret does not mean it is safe.

As AI systems become more interconnected and embeddings are increasingly shared across platforms, this type of vulnerability becomes harder to ignore. The study suggests that the AI community needs to move beyond informal assumptions and adopt more rigorous security models for representation learning.

The bottom line

Vec2vec shows that embeddings are not the harmless numerical abstractions they were once believed to be. They encode meaning deeply enough that sensitive information can be partially recovered—even across different models.

For developers, companies, and policymakers, the message is clear: embeddings deserve the same level of care and protection as the data that created them.

Research paper: https://arxiv.org/abs/2505.12540