Small Architectural Changes Are Making AI Systems More Brain-Like Than Ever

Artificial intelligence research has long been dominated by a simple idea: feed machines enormous amounts of data, train them on massive computing infrastructure, and intelligence will emerge. A new study from Johns Hopkins University, however, suggests that this approach may be overlooking something fundamental. According to this research, the design of an AI system’s architecture alone can make it behave more like the human brain—even before any training happens.

The findings were published in Nature Machine Intelligence and point toward a shift in how researchers may think about building future AI systems. Instead of relying almost entirely on data and compute power, the study highlights how biologically inspired architectural choices can give AI a major head start.

Why Architecture Matters More Than We Thought

Modern AI development often focuses on training models with millions or billions of examples. This process is expensive, energy-intensive, and slow. Humans, on the other hand, learn to recognize the world with relatively little data. The Johns Hopkins team wanted to understand why.

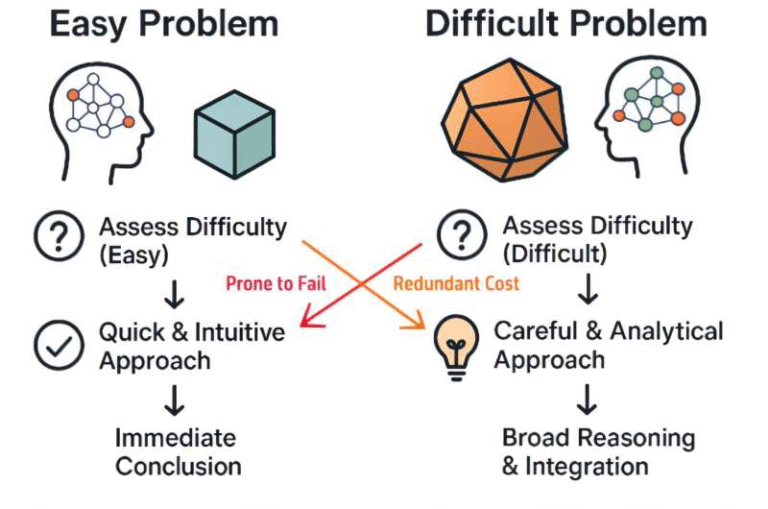

Their central idea was simple but powerful: what if the structure of the network itself already resembles how the brain works? If that is true, an AI system might not need enormous amounts of training to start behaving in brain-like ways.

To test this idea, the researchers studied how different AI architectures respond to visual information before any learning takes place.

The Three AI Architectures Under the Microscope

The research team focused on three widely used types of neural network architectures:

- Transformers, which currently dominate language models and are increasingly used in vision tasks

- Fully connected networks, a more traditional and flexible neural network design

- Convolutional neural networks (CNNs), which are inspired by the organization of the visual cortex and are commonly used in image recognition

Each of these architectures serves as a blueprint rather than a finished model. The researchers created dozens of untrained networks by systematically modifying these blueprints, especially by adjusting the number of artificial neurons and the internal structure of the layers.

Importantly, none of these models were trained on datasets. They were tested in their raw, untrained state.

Comparing AI Activity to Real Brains

Once the models were built, the researchers exposed them to images of objects, animals, and people. At the same time, they compared the internal activity patterns of these AI systems to recorded brain activity from humans and non-human primates viewing the same images.

The focus was on how closely the internal representations of the AI models matched the patterns seen in the visual cortex, particularly areas responsible for object recognition.

This comparison revealed a striking result.

Convolutional Networks Stand Out

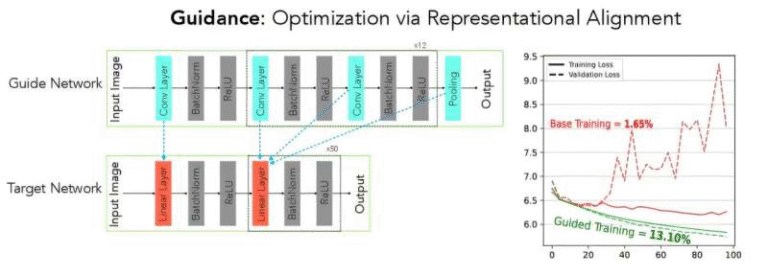

When the researchers modified transformers and fully connected networks by increasing their size, the models showed little improvement in how brain-like their activity patterns were. Simply adding more neurons did not make these systems align better with biological brains.

In contrast, small architectural tweaks to convolutional neural networks made a major difference.

As CNNs were adjusted—particularly in how they handled spatial information and feature channels—their internal activity began to closely resemble patterns seen in the human and primate visual cortex. In some cases, these untrained CNNs rivaled or matched the brain alignment of fully trained AI systems that had been exposed to massive image datasets.

This finding strongly suggests that architecture itself can encode powerful inductive biases, shaping how information is processed even before learning begins.

A Challenge to Data-First AI Development

One of the most important implications of this study is how it challenges the dominant philosophy of AI development. If massive datasets were the only way to achieve brain-like representations, then untrained models should not perform well in these comparisons.

But the results show otherwise.

By starting with a brain-aligned blueprint, AI systems can begin their learning journey from a much stronger position. This could significantly reduce the amount of data and energy required to train effective models.

In a world where AI training consumes enormous computational resources, this insight could have economic, environmental, and practical consequences.

Learning from Evolution

The researchers argue that biological evolution may already have solved many of the design problems AI engineers are still struggling with. The human brain did not evolve by training on billions of labeled examples. Instead, its structure gradually adapted to efficiently process sensory information.

By borrowing these structural principles, AI designers may be able to build systems that learn faster and generalize better.

This does not mean copying the brain neuron by neuron. Instead, it means identifying the key architectural features—such as hierarchical processing and spatial organization—that make biological intelligence so effective.

What Comes Next for Brain-Inspired AI

The study does not suggest that architecture alone is enough. Training and learning still matter. However, the researchers believe that starting with the right design can dramatically accelerate learning.

The next step for the team is to explore simple, biologically inspired learning rules that could complement these brain-aligned architectures. Rather than relying exclusively on backpropagation and massive datasets, future AI systems may incorporate learning mechanisms closer to those used by biological brains.

This could lead to a new generation of deep learning frameworks that are faster, more efficient, and more adaptable.

Understanding Convolutional Neural Networks in Context

To appreciate why CNNs performed so well in this study, it helps to understand their basic principles. Convolutional networks process visual information by detecting simple features first—such as edges and textures—and gradually building up to more complex representations.

This layered approach closely mirrors how the visual cortex works, moving from basic sensory input to higher-level object recognition. CNNs also preserve spatial relationships, which is critical for understanding images.

Transformers, while incredibly powerful for language and sequence processing, do not naturally encode spatial structure in the same way. This may explain why architectural scaling alone did not make them more brain-like in this specific task.

Why This Research Matters Beyond Vision

Although this study focused on visual processing, its implications extend far beyond image recognition. The idea that architecture can embed intelligence before learning could influence fields such as robotics, natural language processing, and neuroscience-inspired computing.

For example, robots operating in real-world environments could benefit from architectures that naturally align with how sensory information is processed in animals. Language models might also improve by incorporating structural biases that reflect how humans process meaning and context.

A Subtle but Important Shift in AI Thinking

This research does not claim that AI systems are becoming conscious or truly human-like. Instead, it shows that certain designs naturally produce brain-aligned activity patterns, even in the absence of learning.

That distinction matters. It reframes intelligence not just as a product of data, but as a combination of structure and experience.

As AI continues to evolve, this study serves as a reminder that sometimes, small changes in design can lead to surprisingly big insights.

Research paper:

https://www.nature.com/articles/s42256-025-01142-3