Sony AI Introduces a Large Human-Centric Photo Dataset to Identify and Reduce Bias in Computer Vision

Sony AI has launched a new human-centric image dataset called the Fair Human-Centric Image Benchmark (FHIBE), and it’s attracting attention for all the right reasons. This is a major step toward addressing long-standing concerns about bias in AI systems, particularly in computer vision models that interpret images of people. FHIBE was published in Nature and brings together over 10,000 ethically sourced photos, all collected with full informed consent, rigorous documentation, and a level of diversity that many existing datasets simply don’t match. If you’ve ever wondered how datasets can affect how AI “sees” people, this new benchmark offers a clear glimpse into how responsible data collection is supposed to look.

At its core, FHIBE contains 10,318 images featuring 1,981 individuals from 81 different countries and regions. That range alone makes it one of the most globally diverse datasets designed specifically for fairness evaluation. Unlike older datasets—many scraped from the internet without approval—FHIBE is completely consent-driven, privacy-respecting, and deeply annotated with participant-reported attributes. With AI systems increasingly being used in areas like security, health, transportation, and image generation, the demand for more ethical, unbiased, and balanced sources of training and evaluation data has never been higher.

A major problem in the world of computer vision is that models often inherit biases from the data they were trained on. If most images in a dataset contain people of a similar gender presentation, ancestry, or age group, models may end up performing poorly on individuals outside that narrow demographic. More seriously, models may produce harmful stereotypes or incorrect conclusions—something that has already been documented in studies of vision-language models. Sony’s FHIBE sets out not only to highlight where those biases appear, but also to offer the kind of detailed annotations needed to trace bias back to specific attributes.

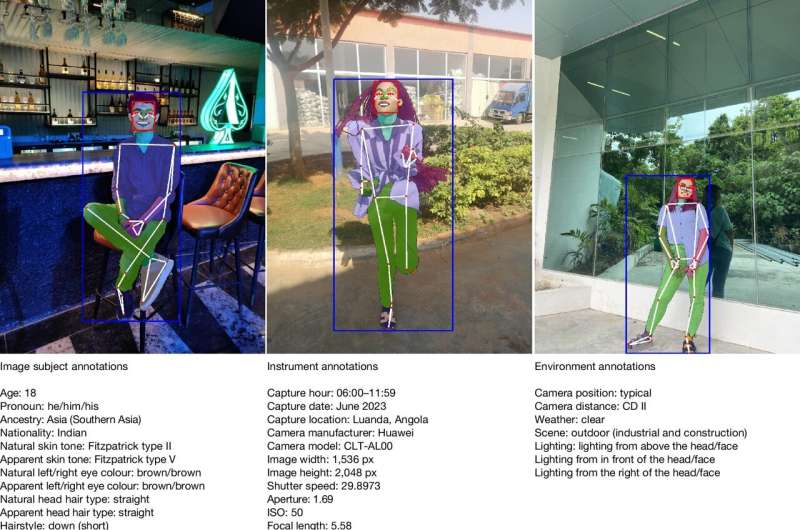

FHIBE does this through a combination of self-reported metadata and structured labeling. Participants provided demographic details such as age range, pronoun category, ancestry, hair type, hair color, and skin tone. There are also environmental and photography-related details, which help researchers understand how background and lighting may influence model behavior. Each photo goes beyond basic tagging, using pixel-level segmentation across 28 categories, 33 keypoints for pose and face landmarks, and bounding boxes for both faces and full bodies. Most datasets don’t come close to this level of granularity.

One of the reasons FHIBE is so valuable is that AI researchers can use its depth to uncover biases that may have gone unnoticed. For instance, early testing with existing AI systems using FHIBE revealed that some models tended to associate certain demographic groups with negative or stereotypical professions or behaviors—even when given neutral prompts. Biases also emerged in seemingly unrelated factors such as hairstyle variability, which was shown to affect accuracy, especially among people who self-identified with she/her/hers pronouns. These discoveries underline the importance of datasets that separate out such attributes rather than treating all people as homogeneous groups.

FHIBE wasn’t easy to build. Collecting thousands of images with ethical sourcing is time-consuming and expensive. Sony AI had to ensure participants understood how their images would be used, outline the risks, and allow withdrawal at any time. Compensation was also handled carefully—participants, annotators, and reviewers were paid fairly, with minimum wage as the baseline. All of this makes FHIBE a rare example of responsible data sourcing done at scale.

Despite the dataset’s size, it isn’t meant to train massive AI systems. Instead, FHIBE serves as a benchmark for evaluating fairness, giving developers a structured way to test whether their models treat all demographic groups equitably. By comparing FHIBE with 27 existing datasets, the creators found major improvements in diversity, representation of often-underrepresented groups, and consent practices. This positions FHIBE as one of the most comprehensive ethical image datasets available today.

FHIBE also sets the stage for a new generation of fairness-focused datasets. For years, the AI field prioritized quantity over quality, pulling millions of unverified images from the web. While vast datasets fueled impressive breakthroughs, they simultaneously introduced harmful blind spots. The industry is now recognizing that smaller but carefully curated datasets can sometimes offer more meaningful insights, especially during evaluation. FHIBE demonstrates how structured, transparent, and respectfully collected data can help rebuild trust in AI systems.

Why Bias in Human-Centric Computer Vision Matters

When AI models misinterpret or misclassify humans, the consequences can be serious. Biases in computer vision have already led to failures in facial recognition, misidentification by security systems, and unequal treatment in seemingly harmless applications like photo editing tools. These systems are often embedded into everyday technologies, meaning an unnoticed bias can end up affecting millions of people.

Bias also damages user trust. If AI systems work well for some users but poorly for others, adoption slows down. FHIBE provides researchers with a way to pinpoint where fairness gaps occur, whether those gaps are linked to skin tone, facial structure, hairstyle, clothing, or environmental conditions.

How FHIBE May Influence Future AI Development

Sony AI plans to keep the dataset updated. If a participant decides they no longer want their images included, those images can be removed and replaced—something almost impossible to do with web-scraped datasets. This living dataset approach allows FHIBE to evolve with shifting cultural expectations and legal standards around privacy.

Researchers can already access the dataset, though registration and agreement to responsible-use guidelines are required. Developers may use FHIBE to evaluate fairness in tasks such as facial detection, body pose recognition, image segmentation, and multimodal (image-plus-text) reasoning. The fine-grained metadata also makes FHIBE a useful tool for studying intersectional fairness—for example, how accuracy differs for combinations of attributes rather than just one at a time.

FHIBE’s release also pushes the AI industry toward better practices. It shows that consent-based, privacy-focused datasets are not only possible but also superior for fairness analysis. Larger companies and research labs may feel pressure to adopt similar standards, and regulators may point to FHIBE as an example of what responsible data collection looks like.

Additional Insight: How Datasets Shape AI Behavior

To understand why FHIBE matters so much, it helps to know how datasets shape computer vision systems. AI models learn patterns through exposure to large volumes of images. If most training photos represent only certain groups, the model may learn skewed patterns. For example:

- If most faces in a dataset are lighter-skinned, the model may struggle with darker-skinned individuals.

- If body pose data mostly shows certain clothing styles or body types, the model may misinterpret or fail to detect others.

- If annotations lack specificity, the model cannot distinguish between different demographic attributes, causing bias to hide beneath broad categories.

Better data leads to better AI. FHIBE’s detailed annotations make it possible not only to measure fairness, but to understand the root causes behind unfair outputs.

Additional Insight: The Growing Role of Ethical AI Benchmarks

As AI becomes more intertwined with daily life, ethical benchmarks like FHIBE will play a crucial role in development. Instead of testing AI only for accuracy, benchmarks now evaluate fairness, robustness, transparency, and impact on society. FHIBE signals a shift from performance-driven AI toward responsibility-driven AI, aligning with global discussions on AI governance.

Research Paper:

Fair human-centric image dataset for ethical AI benchmarking (Nature, 2025)

https://doi.org/10.1038/s41586-025-09716-2