USC Researchers Create Artificial Neurons That Closely Mirror How the Human Brain Works

Researchers at the University of Southern California have unveiled a breakthrough that pushes neuromorphic computing further toward truly brain-like hardware. A team from the USC Viterbi School of Engineering and the School of Advanced Computing has designed artificial neurons that replicate the electrochemical behavior of biological neurons with impressive fidelity. What makes this especially exciting is that these neurons don’t just simulate brain activity mathematically—they physically emulate it using ion movement, much like real neurons do.

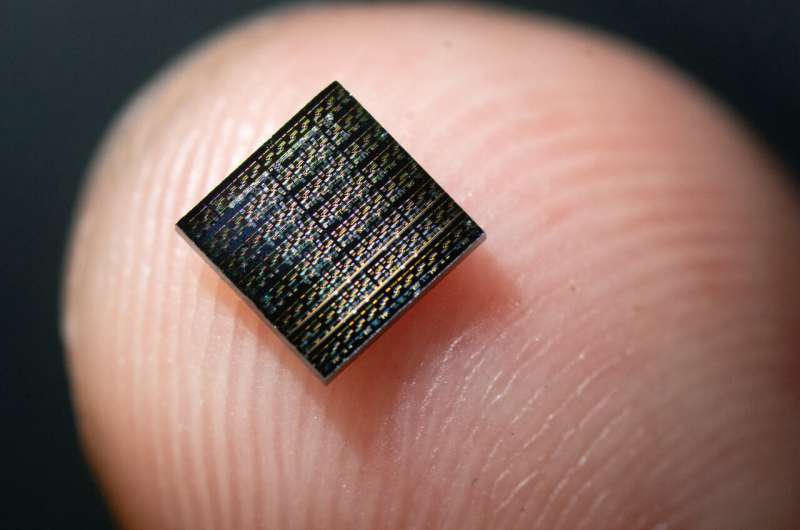

Their work, published in Nature Electronics, introduces a compact artificial neuron built from one diffusive memristor, one transistor, and one resistor. This simple three-component structure occupies the footprint of a single transistor, a massive reduction from the tens or hundreds of transistors used in conventional silicon-based neuromorphic neuron designs. Because of this, the new system can theoretically shrink chip size by orders of magnitude while cutting energy usage by similar margins.

How These Artificial Neurons Work

Biological neurons rely on a mix of electrical and chemical processes. Electrical signals travel along a neuron until they reach a synapse, where they’re converted into chemical signals. These chemicals then trigger electrical responses in the next neuron, creating the dynamic interplay that powers memory, movement, learning, and thought.

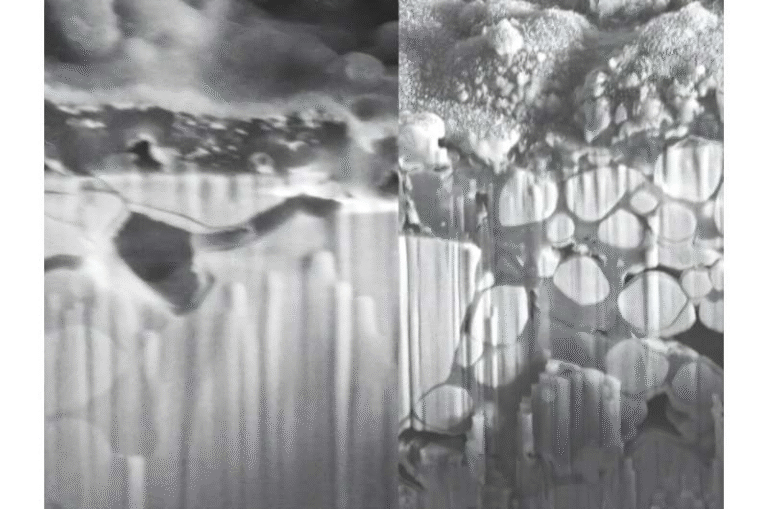

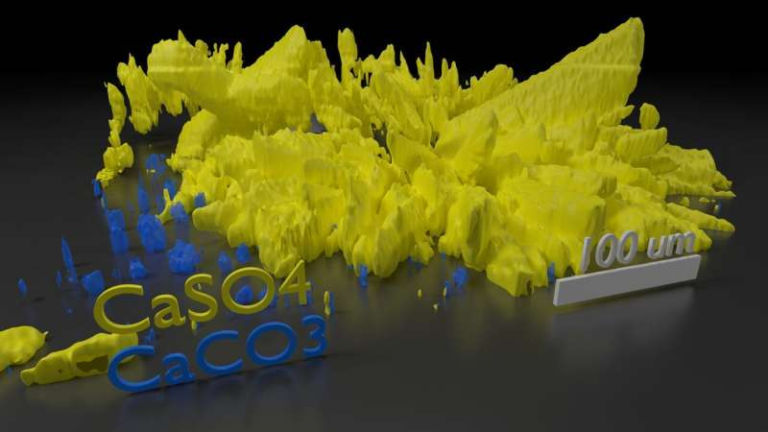

The USC team set out to replicate this process using hardware. Their neuron leverages ion dynamics, specifically silver ions moving through an oxide layer inside the diffusive memristor. This mirrors how ions like potassium, sodium, and calcium produce electrical activity in real neurons. The physics aren’t identical—brains use biological ions while the hardware uses silver—but the mechanisms are remarkably similar, and that’s the key. Because ions move and interact differently from electrons, they can embody brain-like behavior directly in hardware.

The diffusive memristor is especially important. It’s called “diffusive” because ion motion inside it is constantly shifting and dispersing. This volatility allows the device to naturally recreate neuron-like features such as spiking, threshold activation, and short-term memory effects. These aren’t coded in software—they emerge from the device’s physical behavior.

One major benefit is energy efficiency. Real neurons are shockingly power-efficient, and this hardware aims to mimic that. Every artificial spike generated by the memristor-based neuron requires extremely low energy, far less than what digital simulations would need. For a world increasingly relying on large AI systems that consume enormous amounts of electricity, this type of architecture could be transformative.

Why Ions Matter More Than Electrons

Today’s computing systems—including the chips powering artificial intelligence—are built around electrons. Electrons are fast, which is great for throughput, but they’re also volatile, leading to computational styles that differ fundamentally from biology. Current systems simulate learning through software, which requires huge amounts of memory and power.

In contrast, biological systems use ions to represent and store information in the physical structure of neurons and synapses. That means the learning is hardware-embedded, not software-simulated.

The USC researchers argue that ions are a better match for enabling true hardware intelligence, because their motion naturally reflects the mechanisms of learning and adaptation found in the brain. This difference may help close the gap between artificial systems and natural intelligence.

For instance, a child can learn to recognize handwritten digits after seeing only a handful of examples. A conventional machine-learning model would need thousands or tens of thousands of samples to reach similar accuracy. And while the brain uses roughly 20 watts, modern supercomputers need megawatts to run large models.

By incorporating ion movement into the computation process, these new artificial neurons could support adaptive, low-power, hardware-level learning—a significant step toward more brain-like machines.

Physical Design and Materials Used

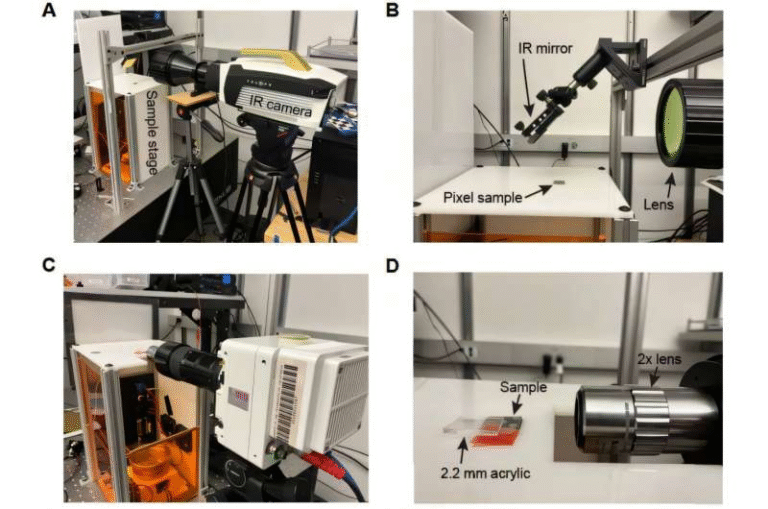

Each artificial neuron fits into a space of approximately 4 μm², which is extremely compact. The membranes, switching layers, and resistive elements are integrated using existing nano-fabrication techniques in a cleanroom environment.

The device uses silver ions dispersed within an oxide matrix to generate electrical pulsing that mimics real neuronal activity. While silver works well for these experimental setups, it isn’t fully compatible with current semiconductor manufacturing methods. This means future versions may use alternative ion-based materials that can scale more easily in an industrial setting.

The diffusive memristor’s volatile behavior is central to reproducing key neural functions such as:

- Leaky integration

- Threshold-based spiking

- Signal propagation to neighboring artificial neurons

- Adaptive changes in firing behavior

- Refractory periods

- Stochastic firing patterns

These are the same behaviors essential in biological neural circuits.

What This Breakthrough Means for Neuromorphic Computing

Neuromorphic computing seeks to design hardware that functions more like the brain rather than traditional CPUs or GPUs. Silicon chips operate with binary on–off switching, while the brain works through non-linear pulse patterns and electrochemical interactions.

By reducing artificial neuron size to a single-transistor footprint and enabling ion-based dynamics, the USC team lays the groundwork for systems that:

- Use far less power

- Pack more neurons per chip

- Support hardware-level learning

- Operate more like actual biological neural networks

This could eventually enable forms of Artificial General Intelligence (AGI) that aren’t feasible with current architectures. Instead of forcing software models to imitate the brain on top of digital hardware, the hardware itself would behave more like the brain.

The team’s next step is to integrate large numbers of these neurons along with artificial synapses to see how closely an entire system can match the brain’s efficiency and computational style.

Challenges and Next Steps

While promising, several issues must be addressed:

- Material compatibility: Silver is not industry-friendly for large-scale fabrication. Replacement ionic species are needed.

- Reliability: Ion-based devices can suffer from physical degradation or variability over time.

- Scalability: Building a fully functional brain-scale network requires coordination of millions or billions of these units.

- Learning mechanisms: While the hardware supports intrinsic plasticity, integrating complex learning algorithms remains an open challenge.

Despite these hurdles, this research defines a strong pathway toward next-generation neuromorphic hardware.

Understanding Memristors and Ion-Based Computing

To expand on the science behind this, it helps to know where memristors fit into the computing world. A memristor is essentially a resistor with memory—it changes its resistance depending on past electrical activity. This makes memristors ideal for representing synaptic weights in neuromorphic systems.

A diffusive memristor is a special type where mobile ions create temporary conductive pathways, producing volatility similar to short-term memory in neurons. This allows the device to spike, decay, and respond dynamically to input pulses.

These behaviors are fundamental to how biological systems compute. By stacking a diffusive memristor on top of a transistor and pairing it with a resistor, the USC team created a compact neuron-like device that captures both the transient and threshold-based characteristics needed for spiking activity.

The Bigger Picture: Why Brain-Like Hardware Matters

As AI models grow, they demand more energy and hardware resources. The world is already hitting limits in power consumption and chip miniaturization. Biological systems, on the other hand, show that advanced intelligence can operate at tiny scales with astonishing efficiency.

Hardware that mirrors the brain could support:

- Real-time learning without cloud-based processing

- Ultra-low-power devices and edge AI

- More human-like adaptability in robotics

- Scalable artificial intelligence with sustainable energy use

This research demonstrates that we’re getting closer to unlocking those possibilities.

Research Paper Link:

https://www.nature.com/articles/s41928-025-01488-x