Video Conferencing Apps May Be Exposing Your Location Through Audio Without You Realizing It

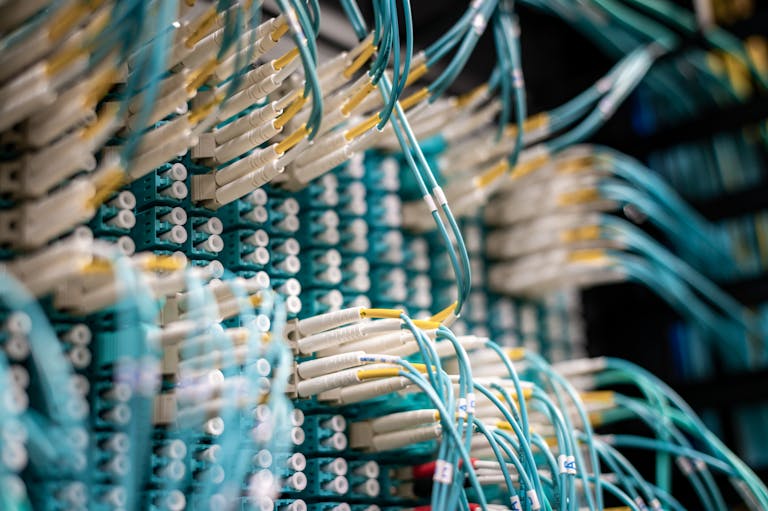

Video conferencing has become so normal in our everyday lives that most of us don’t even think twice before joining a call. We mute ourselves, turn off the camera when needed, pick a virtual background, and feel reasonably safe. But a new study from Southern Methodist University (SMU) shows that these familiar apps—such as Zoom, Microsoft Teams, and others—may be leaking far more about our environment than we ever expected. And the surprising part is that this exposure doesn’t come from the camera. It comes from the audio channel.

This article breaks down what the researchers discovered, how these attacks work, why the issue matters, and what else we know about audio-based privacy risks. Everything is explained plainly and directly, covering all the specifics of the research while also giving some broader context around acoustic privacy and sensing technologies.

How Researchers Discovered Hidden Location Leaks in Video Calls

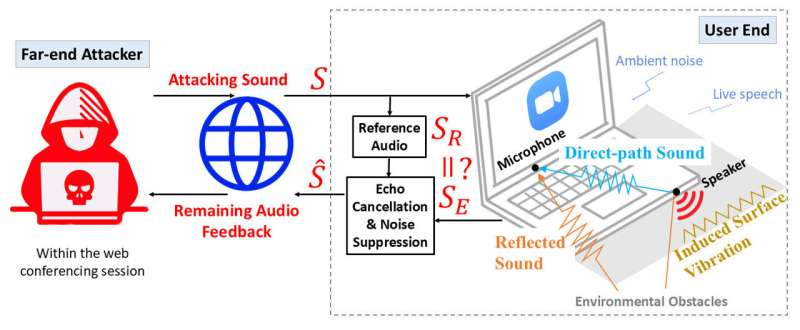

The SMU researchers investigated how audio moves through a normal video conferencing session. What they discovered is that an attacker can send short, specially designed sounds through the call and then analyze how those sounds echo back from the victim’s environment. This method—called remote acoustic sensing—lets the attacker infer the user’s physical surroundings with striking accuracy.

Using this approach, the researchers were able to identify a user’s location or location context with about 88% accuracy, even when the user had been in the same environment repeatedly or had never been there before. These results came from six months of experiments across 12 different types of locations, including offices, homes, hotel rooms, and even inside vehicles.

What makes this alarming is that the attack works even when the camera is turned off and even when a virtual background is used, because the camera isn’t the source of the leak at all. The attacker only needs access to the call like any other participant—no malware, no hacking into devices, no installation of anything.

Why Muting and “Being Careful” Isn’t Enough

Most people assume they’re safe if they keep their microphone muted and only unmute when speaking. But the researchers found that this natural behavior still gives an attacker enough opportunity to gather data.

Here’s why:

- Users typically unmute a second or two before speaking and mute a second or two after speaking.

- During this short window, low-energy probing sounds (sometimes only 100 milliseconds long) can be sent and analyzed.

- When a user starts speaking, the echo cancellation systems in conferencing apps actually stop suppressing audio as aggressively. This means any malicious sound bouncing back from the room returns with higher energy, making it easier for the attacker to extract information.

So your own voice unintentionally boosts the strength of the attacker’s probing signal, helping them read your environment even better.

Two Types of Echo-Based Attacks Identified

The researchers identified two distinct attack methods that can be used to slip past the built-in protections of video conferencing platforms:

1. In-Channel Echo Attack

This method injects carefully engineered acoustic signals into the call. These signals are specifically designed to bypass echo-cancellation algorithms. They may be barely noticeable or completely inaudible to the human ear, but they are highly effective at capturing the echo profile of a room.

2. Off-Channel Echo Attack

This technique doesn’t use suspicious custom sounds at all. Instead, it hijacks natural, everyday noises—like a notification ding, the sound of a new email, or any benign short tone. Because these sounds are normal, they easily slip past any security filters.

Both attacks allow the attacker to create a kind of location fingerprint, revealing whether the user is at home, at the office, in a hotel, or somewhere else. In some contexts, the acoustic signature could potentially reveal even more detailed environmental information.

Why This Threat Is Particularly Serious

There are a few key reasons why this research stands out compared to typical cybersecurity concerns:

- It requires no malware, meaning nothing has to be installed on the victim’s device.

- Any participant in the call can launch the attack, even in a large meeting with many people.

- The signals used can be extremely short, so most victims won’t notice a thing.

- Built-in conferencing protections help the attacker, because sophisticated echo suppression systems unintentionally make the malicious feedback clearer during speech.

Given how widespread remote work has become, this is a meaningful privacy problem. Location context can reveal patterns—such as when someone is at home, traveling, working from a sensitive site, or attending meetings from the same unknown location repeatedly. All of that could be useful to a thief, stalker, or other malicious actor.

The Role of Generative AI in These Attacks

One technical detail worth noting is that the researchers used generative AI encoders to counteract the effects of echo suppression. Video conferencing systems aggressively remove background noise and minimize feedback to create clean audio. This should theoretically destroy the data an attacker needs—but the study shows that new AI models can reconstruct or extract stable location embeddings even from heavily suppressed signals.

This combination of generative modeling and acoustic sensing is what makes the attack both modern and effective.

What Can Be Done to Reduce These Risks?

Right now, there isn’t much a user can do on their own to fully prevent these attacks because the problem exists at the software infrastructure level. However, the SMU researchers are working on server-side defense algorithms that conferencing platforms could adopt.

These include:

- Detecting suspicious probing signals before they reach users.

- Automatically filtering out audio patterns that match known malicious signatures.

- Developing smarter echo-suppression models that cannot be easily reverse-engineered.

- Introducing optional hardened security modes for sensitive meetings.

Until such protections become widely adopted, the best practical advice is simply to be aware of who is in your meetings and avoid untrusted participants when possible.

Understanding Acoustic Sensing: A Bit of Background

Since the research centers on remote acoustic sensing, here’s a quick overview of the broader field to give more context.

Acoustic sensing works by analyzing how sound waves behave in an environment:

- Echo delay can reveal distance to walls.

- Reverberation patterns can describe room size and material types.

- Frequency absorption can hint at objects present (soft fabrics absorb more high frequencies, for example).

- Unique echo signatures act almost like a fingerprint for a location.

Acoustic sensing is used in many fields: robotics, wildlife monitoring, structural engineering, and even health diagnostics. But this study highlights how these same techniques can be misused when applied covertly in everyday software systems.

Knowing how powerful these methods are helps explain why video conferencing apps need stronger safeguards.

What This Means for the Future of Remote Communication

The findings raise important questions about the next generation of communication tools. As AI becomes better at interpreting tiny signals, more hidden data might be extractable from what appear to be harmless channels.

Future remote work platforms may need to consider:

- Audio anonymization, similar to image blurring.

- Randomized echo masking to disrupt room-signature extraction.

- Better monitoring of passive audio patterns.

- Clearer user-side indicators when unusual audio activity occurs.

Privacy expectations will likely evolve as people learn that microphones aren’t just for capturing voices—they can also map spaces.

Research Paper

Sniffing Location Privacy of Video Conference Users Using Free Audio Channels

https://doi.org/10.1109/sp61157.2025.00260