Yale Researchers Develop Scalable Brain-Like Chips That Could Change the Future of Neuromorphic Computing

Researchers at Yale University have taken an important step toward solving one of the biggest challenges in neuromorphic computing: how to build brain-inspired chips that can scale up efficiently while still producing repeatable, deterministic results. Their work introduces a new hardware architecture called NeuroScale, designed to overcome limitations that have slowed progress in large-scale neuromorphic systems for years.

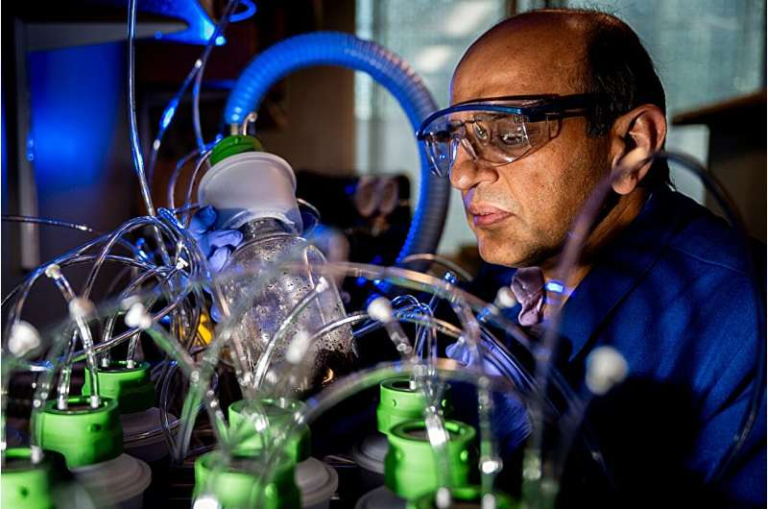

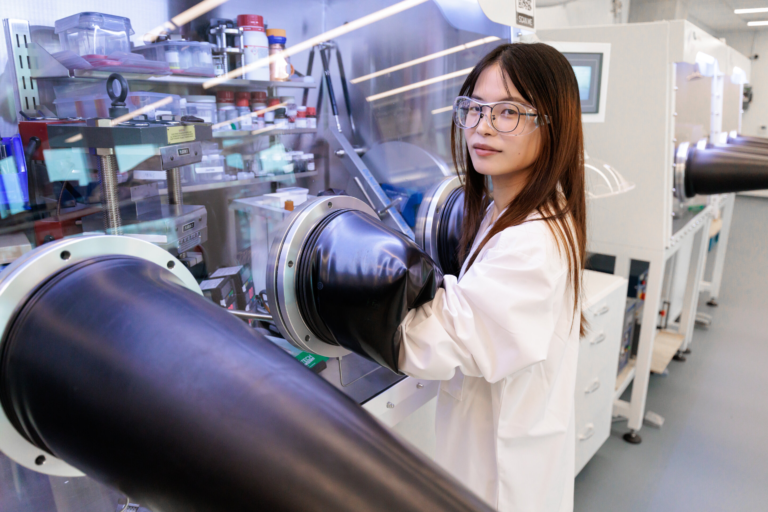

The research, led by Professor Rajit Manohar and spearheaded by Congyang Li, a Ph.D. candidate at Yale, was published in Nature Communications in 2025. It focuses on rethinking how artificial neurons and synapses are synchronized across large computing systems that aim to mimic the way the human brain processes information.

What Neuromorphic Chips Are and Why They Matter

Neuromorphic chips are custom integrated circuits designed to imitate how biological brains work. Instead of relying on continuous clock cycles like traditional processors, these chips use spiking neurons that fire only when needed. This event-driven approach allows neuromorphic systems to operate with significantly lower energy consumption, especially when handling sparse or distributed workloads.

Because of these advantages, neuromorphic hardware is being explored for applications in artificial intelligence, robotics, neuroscience research, and distributed computing. When interconnected, neuromorphic chips can form systems with hundreds of millions or even billions of artificial neurons, pushing closer to brain-scale computing.

However, despite their promise, neuromorphic systems face a fundamental technical obstacle: synchronization.

The Synchronization Problem That Limits Scaling

For neuromorphic systems to produce consistent and repeatable results, their neurons and synapses must be synchronized in time. Most existing designs rely on a global synchronization mechanism, often referred to as a global barrier. This barrier ensures that all parts of the system advance together in lockstep.

While this approach guarantees deterministic behavior, it comes at a cost. As systems grow larger, global synchronization becomes increasingly inefficient. Every component must wait for the slowest element to catch up before progressing. In large networks, this can dramatically reduce performance and increase overhead, since synchronization signals must propagate across the entire system.

In simple terms, global synchronization makes large neuromorphic systems less scalable, preventing them from fully exploiting their biological inspiration.

NeuroScale’s Key Innovation: Local Synchronization

The Yale team’s solution, NeuroScale, takes a fundamentally different approach. Instead of forcing the entire system to synchronize globally, NeuroScale uses a local, distributed synchronization mechanism.

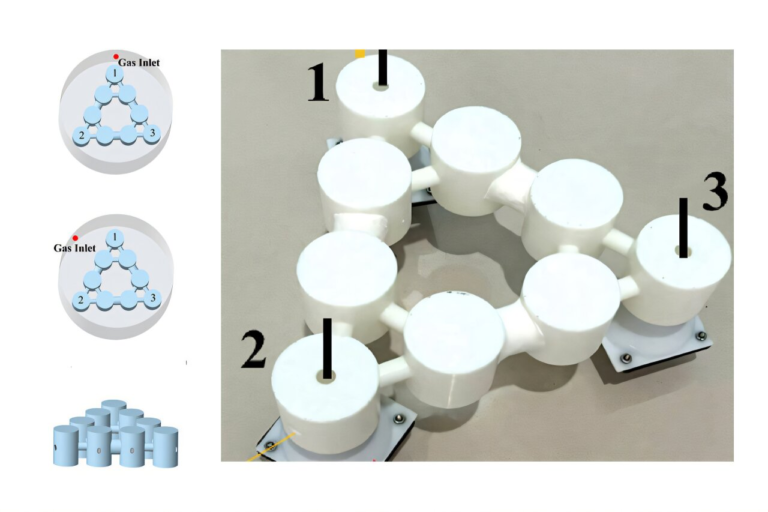

In this architecture, only directly connected clusters of neurons and synapses synchronize with each other. Each cluster operates semi-independently, coordinating timing only where communication is necessary. This removes the need for a system-wide global barrier.

The result is a system that remains deterministic—producing repeatable outputs—while being far more scalable. Performance is no longer dictated by the slowest component in the entire network, and synchronization overhead does not grow uncontrollably as the system expands.

According to the researchers, NeuroScale’s scalability is limited only by the same constraints that apply to the biological neural networks being modeled, rather than by artificial hardware bottlenecks.

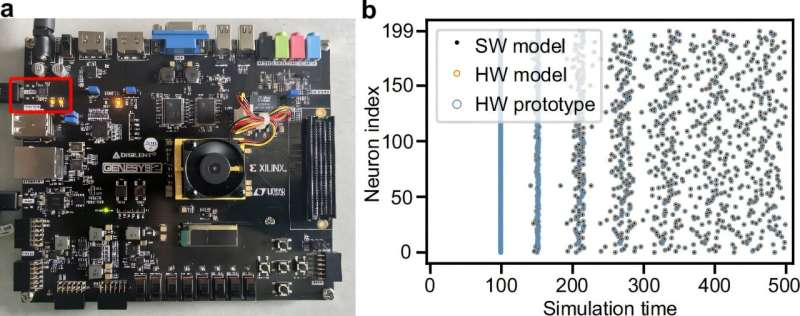

Demonstration Through Simulation and Prototypes

The NeuroScale architecture has been tested using simulations and hardware prototypes, including deterministic execution models that demonstrate how the system behaves under realistic workloads. These tests show that local synchronization can maintain precise spike timing across cores while avoiding the performance penalties associated with global barriers.

The prototype results suggest that NeuroScale can outperform conventional neuromorphic designs in scenarios involving distributed and sparse computation, where only small parts of the network are active at any given time. This aligns closely with how biological brains operate.

Why Deterministic Execution Still Matters

One of the most notable aspects of NeuroScale is that it preserves deterministic execution, a critical requirement for scientific research and reliable AI systems. Determinism ensures that the same input always produces the same output, which is essential for debugging, validation, and reproducibility.

Many neuromorphic systems sacrifice determinism in favor of speed or flexibility. NeuroScale shows that it is possible to achieve both scalability and determinism, challenging the assumption that these goals are mutually exclusive.

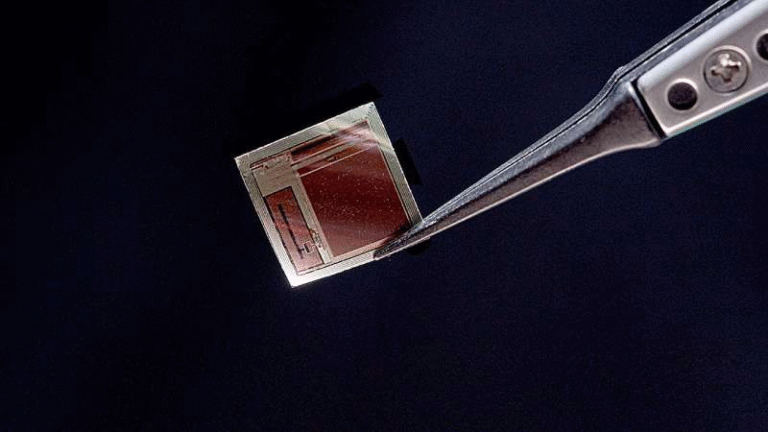

Plans for Silicon Implementation

So far, NeuroScale exists as a combination of simulation models and prototype hardware. The Yale team’s next major goal is to fabricate the NeuroScale chip, moving from experimental validation to real-world silicon implementation.

In parallel, the researchers are exploring a hybrid approach that blends NeuroScale’s local synchronization method with more traditional global synchronization techniques. This could allow future neuromorphic systems to adapt their synchronization strategy based on workload requirements, combining flexibility with reliability.

How This Fits Into the Broader Neuromorphic Landscape

Neuromorphic computing has seen growing interest over the past decade, with notable systems such as IBM’s TrueNorth and Intel’s Loihi pushing the field forward. While these platforms have demonstrated impressive energy efficiency and scalability, they still rely heavily on global timing mechanisms that limit performance at extreme scales.

NeuroScale’s architecture introduces a new design philosophy that could influence the next generation of neuromorphic hardware. By aligning synchronization more closely with biological principles, it opens the door to building systems that are both larger and more efficient.

Potential Applications Beyond AI

Beyond artificial intelligence, scalable neuromorphic hardware could have implications for real-time robotics, sensor processing, and edge computing, where power efficiency and responsiveness are critical. Systems based on NeuroScale-like designs could handle complex tasks locally, reducing reliance on centralized cloud infrastructure.

In neuroscience research, such hardware could also serve as a powerful platform for modeling large neural networks, helping scientists better understand how biological brains compute and adapt.

Why This Research Is Significant

The development of NeuroScale addresses a long-standing bottleneck in neuromorphic computing. By eliminating the need for global synchronization while maintaining deterministic behavior, the Yale team has provided a practical path toward truly large-scale brain-inspired computing systems.

As the field continues to evolve, architectures like NeuroScale may play a crucial role in bridging the gap between biological intelligence and artificial hardware.

Research Paper Reference:

https://www.nature.com/articles/s41467-025-65268-z